What’s the Real Cost of Building Your Own Generative AI?

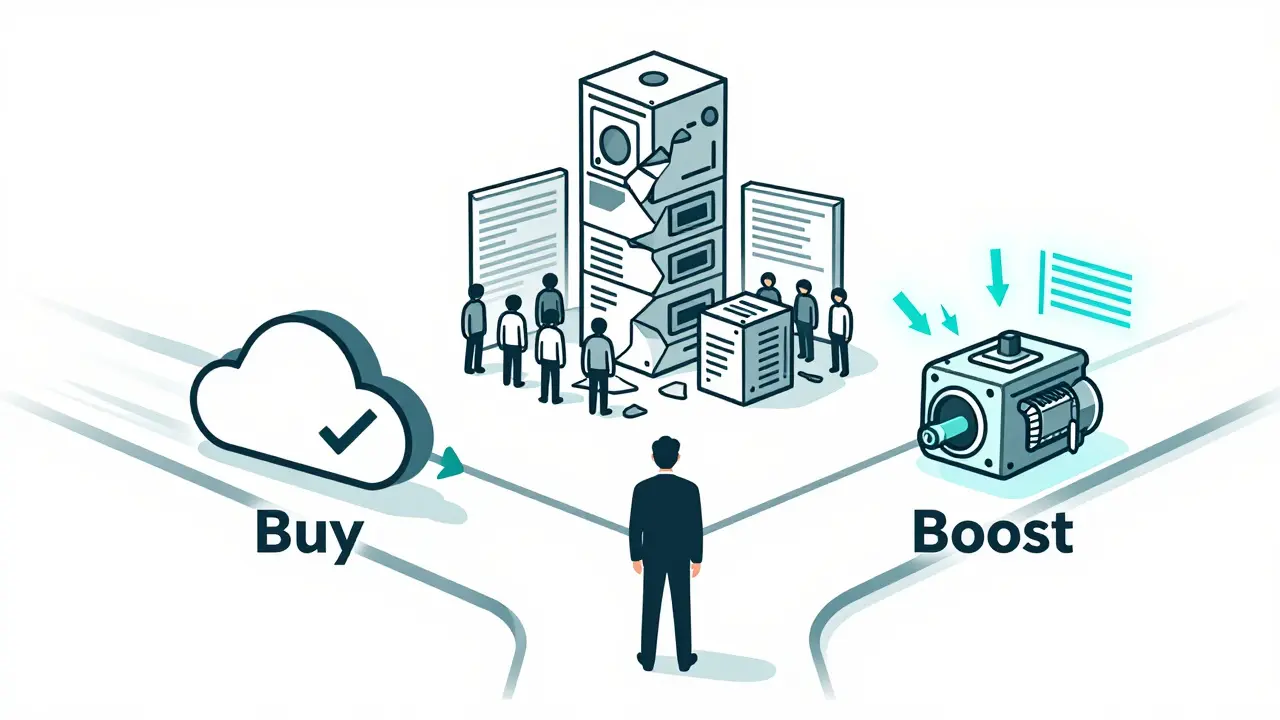

Most CIOs start with a simple question: Should we build our own generative AI model, or buy one? It sounds like a technical choice, but it’s really a business one. The wrong decision can cost millions, delay critical projects, or even expose your company to legal risk. The truth? Only 5% of organizations need to build from scratch. The rest are better off buying - or boosting - and focusing their engineering talent where it matters most.

Let’s cut through the noise. In 2024, Gartner found that 72% of commercial AI tools only meet 60-70% of enterprise needs out of the box. That doesn’t mean they’re bad. It means you’re asking them to do too much. The real question isn’t whether you can build it. It’s whether you should.

When Buying Makes Sense - And When It Doesn’t

Buying a generative AI platform isn’t just cheaper. It’s faster. Microsoft’s Azure OpenAI Service, Anthropic’s Claude Enterprise, and Google’s Vertex AI can be up and running in 2-8 weeks. You don’t need a team of 15 ML engineers. You need a business analyst, an IT integrator, and a clear use case.

Take customer service. A Fortune 500 bank spent $4.2 million and nine months building a custom AI to handle customer inquiries. After launch, they realized the commercial solution they’d rejected could’ve handled 85% of those same queries - at 30% of the cost. They switched. Saved $3.1 million in the first year.

Commercial platforms work best when:

- The task is well-defined (e.g., drafting emails, summarizing reports, generating product descriptions)

- The cost of an error is low (a bad draft can be edited)

- You need speed, not uniqueness

But here’s the catch: usage-based pricing can sneak up on you. Azure OpenAI charges $0.0001 per 1,000 input tokens and $0.0003 per 1,000 output tokens. That sounds tiny - until you’re running 500,000 queries a day. One healthcare provider saw their monthly bill jump from $12,000 to $89,000 after rolling out AI to all patient intake forms. They had to cap usage. That’s not a failure. It’s a lesson.

When Building Is the Only Real Option

There are cases where buying isn’t enough. When the stakes are high, the context is messy, and the model must understand your business like a seasoned employee.

Think healthcare diagnostics for rare diseases. A misdiagnosis can cost over $1 million in lost time, lawsuits, or patient harm. Commercial models are trained on public data - they’ve never seen your hospital’s specific patient records, lab protocols, or drug interactions. You need a model fine-tuned on your own data. That means building.

Or consider financial risk modeling. A bank’s internal fraud detection system must adapt to evolving fraud patterns that no public dataset captures. A vendor’s model won’t know your customer behavior, your transaction history, or your compliance thresholds. Custom is the only way.

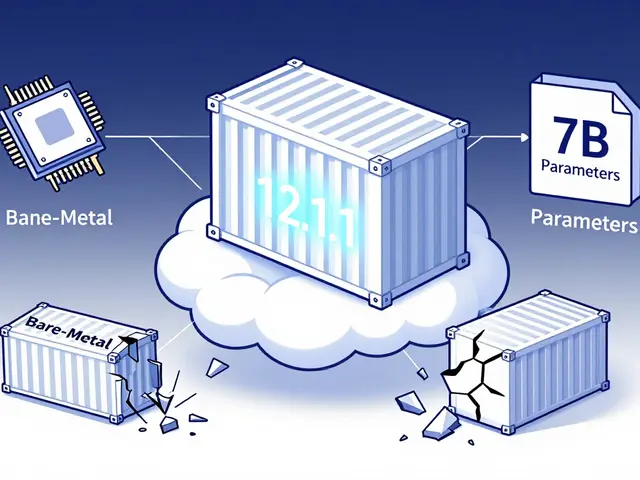

But building comes with brutal costs:

- Hardware: Training a 70B parameter model needs 2,000 NVIDIA A100 GPUs - $20-30 million upfront

- Team: 15-20 specialized roles (ML engineers, MLOps, data scientists) - $2.5-3.5 million in annual salaries

- Time: 6-12 months just to reach production readiness

- Risk: 68% of custom projects exceed budget by 40% or more (EY, 2024)

And it’s not over when you launch. Model drift hits 78% of custom AI systems within 18 months. Your model starts giving weird answers because your data changed. You need a team to monitor, retrain, and fix it - constantly.

The Middle Ground: Boosting

There’s a third option most people overlook: boosting. That means buying a commercial platform and fine-tuning it with your own data. You get the speed of a pre-built model, plus the customization you need.

Microsoft’s Azure OpenAI Studio now lets you upload your internal documents, training data, and workflows - then fine-tune GPT-4 without touching the underlying model. Same with Anthropic’s Claude Enterprise Custom. You’re not building from scratch. You’re adapting a proven engine to your needs.

Boosting cuts development time to 30-45% of a full build. It costs less than hiring a team. And it avoids the security gaps that plague custom builds - because the vendor still manages the core infrastructure.

Companies using boosting report:

- 40% faster deployment than full builds

- 70% higher accuracy on domain-specific tasks

- 25-35% higher operational cost than standard commercial use (due to more tokens)

That’s a fair trade if your use case is high-value and unique.

Security, Compliance, and Hidden Risks

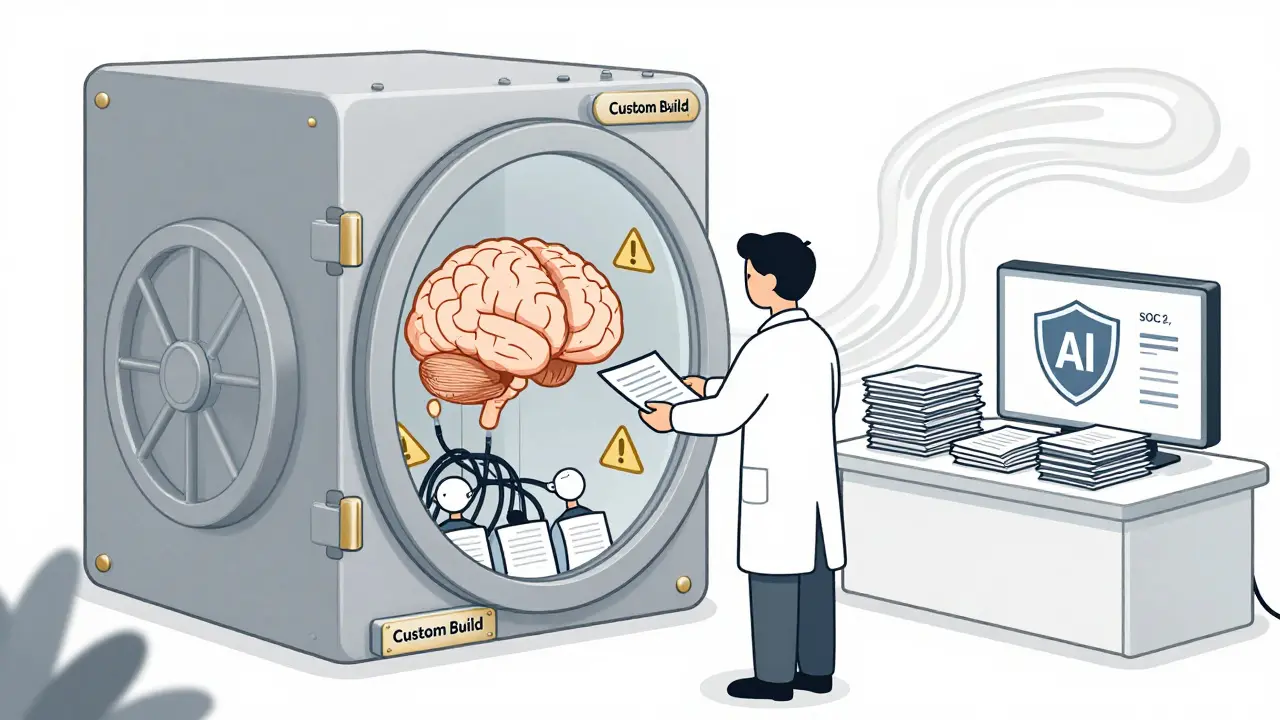

Building your own AI sounds powerful - until you realize you’re now responsible for everything: data encryption, access controls, audit logs, GDPR compliance, HIPAA alignment.

Commercial platforms come with SOC 2 Type II, GDPR, HIPAA, and 47+ pre-certified compliance frameworks built in. Deloitte found it takes 12-18 months to achieve that level of certification on your own - if you can do it at all.

EY’s 2024 security audit found that 63% of organizations building in-house AI had critical vulnerabilities during early deployment. One financial firm accidentally exposed customer PII because their fine-tuning pipeline wasn’t properly anonymized. The vendor they bought from? Their model never had access to raw data.

And don’t forget vendor lock-in. 58% of CIOs worry about being tied to one provider. But if you build your own, you’re locked into your own team’s skills, your own infrastructure, your own maintenance burden. Which is really more flexible?

Who Should Do What? A Simple Decision Tree

Here’s how to decide - no PhD required.

- Is the task standardized and low-risk? (e.g., drafting internal memos, summarizing meeting notes, generating marketing copy) → Buy

- Is the task high-stakes and unique to your business? (e.g., diagnosing rare diseases, detecting fraud in your transaction network, interpreting your proprietary contracts) → Build

- Is the task somewhere in between? (e.g., processing loan applications, answering HR policy questions, analyzing internal support tickets) → Boost

Ask these follow-up questions:

- Can we measure ROI within 6 months? If not, avoid building.

- Do we have 15+ top-tier AI engineers on staff? If not, avoid building.

- Are we in a regulated industry? If yes, buy or boost - don’t build.

- Is our data too sensitive to send to a vendor? If yes, build - but only if you can afford the security team.

What’s Happening in 2026?

The market is shifting fast. Gartner predicts 60% of current AI vendors will disappear by 2027. That means buying today carries risk - but so does building. The winners will be those who use a hybrid, composable approach: buy the foundation, build the differentiator.

Microsoft, Google, and AWS are making it easier than ever to plug custom logic into their platforms. You can now train a model on your data, deploy it behind your firewall, and still get automatic updates, security patches, and support.

Meanwhile, companies that tried to build everything themselves are now scrambling to buy - and paying premium prices to migrate. The lesson? Don’t be the company that spent $5 million to reinvent the wheel, only to realize the wheel was already better.

Final Thought: AI Is a Tool, Not a Trophy

Building your own generative AI isn’t a badge of honor. It’s a massive investment with high risk and narrow payoff. Most companies don’t need to do it. They need to use AI to solve real problems - faster, cheaper, and safer.

The best CIOs aren’t the ones with the most engineers. They’re the ones who know exactly when to say ‘no’ to building - and when to say ‘yes’ to buying, boosting, or both.

Is it cheaper to build or buy a generative AI platform?

Buying is almost always cheaper upfront. Building requires $20-30 million in hardware, $2.5-3.5 million in annual salaries, and 6-12 months of development. Commercial platforms start at under $10,000/month with pay-as-you-go pricing. Only organizations with unique, high-stakes needs (like healthcare diagnostics or financial fraud detection) justify the cost of building.

How long does it take to deploy a generative AI platform?

Buying a commercial platform takes 2-8 weeks. Boosting (fine-tuning a bought model) takes 4-12 weeks. Building from scratch takes 6-12 months. Most organizations see ROI within 90 days of buying - but 18+ months for custom builds.

Can I trust commercial AI vendors with my data?

Yes - if you choose the right vendor. Enterprise platforms like Azure OpenAI, Google Vertex AI, and Anthropic’s Claude Enterprise offer data isolation, encryption at rest and in transit, and contractual guarantees that your data won’t be used to train public models. Always ask for a data processing agreement (DPA) and confirm they’re SOC 2 and GDPR compliant. Never use free or consumer-grade tools like ChatGPT for business data.

What’s the biggest mistake companies make with AI?

Trying to use one approach for everything. Some companies force commercial tools into complex, high-risk workflows - and get bad results. Others build custom models for simple tasks like email drafting, wasting millions. The winning strategy is context-driven: buy for routine tasks, boost for semi-custom needs, build only for mission-critical, unique applications.

Should I use open-source models like Llama or Mistral instead of buying?

Only if you have deep AI engineering expertise and the budget to maintain it. Open-source models don’t come with support, security patches, compliance certifications, or uptime guarantees. You’ll need to host them, fine-tune them, monitor them, and fix them - all in-house. For most companies, that’s more expensive and riskier than buying a managed enterprise service.

What’s the future of build vs buy for AI?

The future is hybrid. Cloud providers are making it easier to customize their platforms with your data - without building your own models. By 2027, most enterprises will use a mix: commercial platforms for 80% of tasks, and custom-built systems only for the 20% that truly require proprietary intelligence. The companies that thrive will be those who treat AI as a strategic lever - not a technology project.

Artificial Intelligence

Artificial Intelligence