AI-generated code isn’t coming-it’s already here. Teams are typing prompts like "build a login form with password reset" and getting working code in seconds. But if you don’t set clear rules, that speed turns into chaos. One junior developer at a fintech startup accidentally pushed Stripe API keys into client-side JavaScript. The breach cost them $87,000 in fraud losses and three weeks of emergency fixes. That’s not an outlier. It’s what happens when you skip governance.

What Vibe Coding Actually Means (And Why It’s Not Magic)

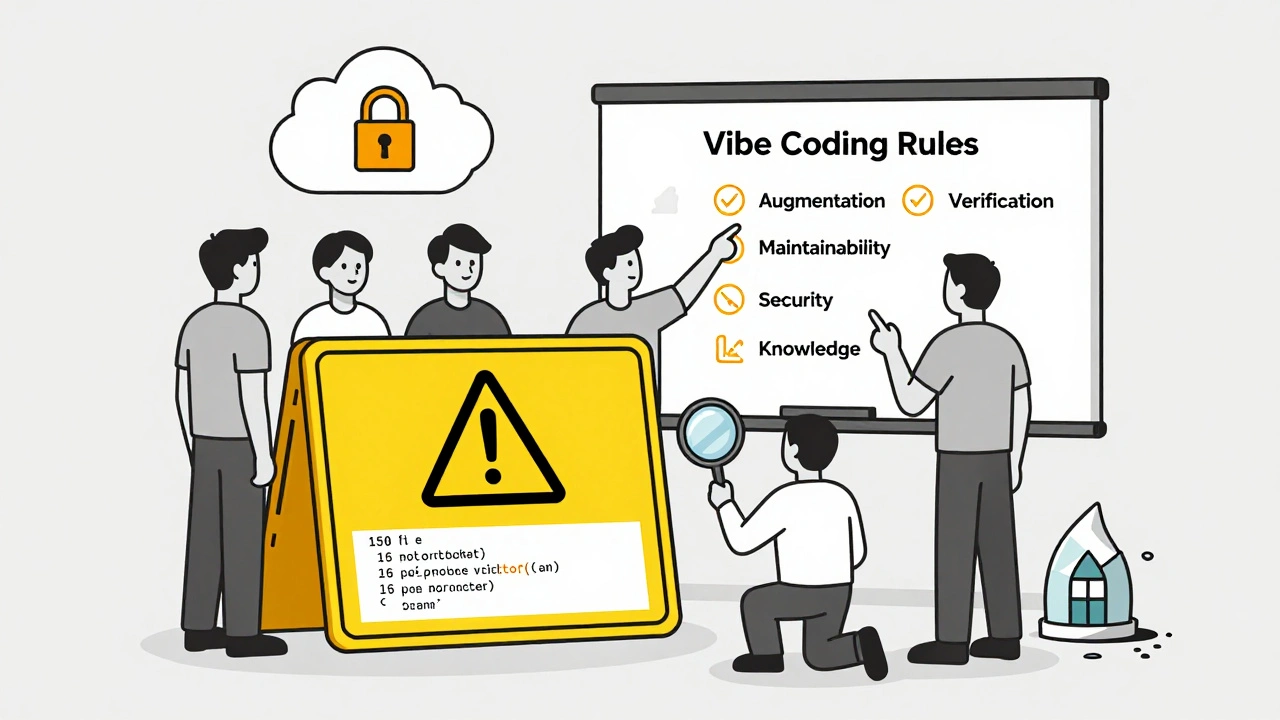

Vibe coding isn’t about letting AI write your whole app. It’s about using AI as a co-pilot-someone who drafts the first version, but you still hold the steering wheel. The Vibe Programming Framework is a structured approach to AI-assisted development that treats AI as an augmentation tool, not a replacement for human judgment. It’s built on five core principles: Augmentation, Not Replacement; Verification Before Trust; Maintainability First; Security by Design; and Knowledge Preservation.

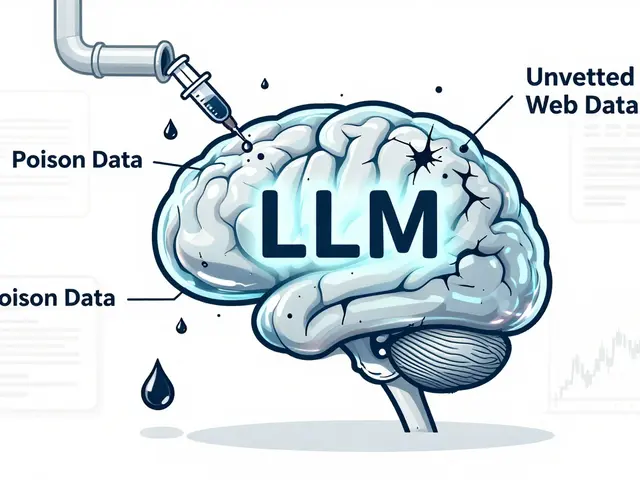

Without these guardrails, you’re in the Wild West. GitHub’s 2024 State of the Octoverse report found that 37% of AI-generated code had security flaws. That’s not a bug-it’s a feature of uncontrolled AI. If you don’t verify, sanitize, and review, you’re inviting SQL injection, XSS, and credential leaks into your production apps.

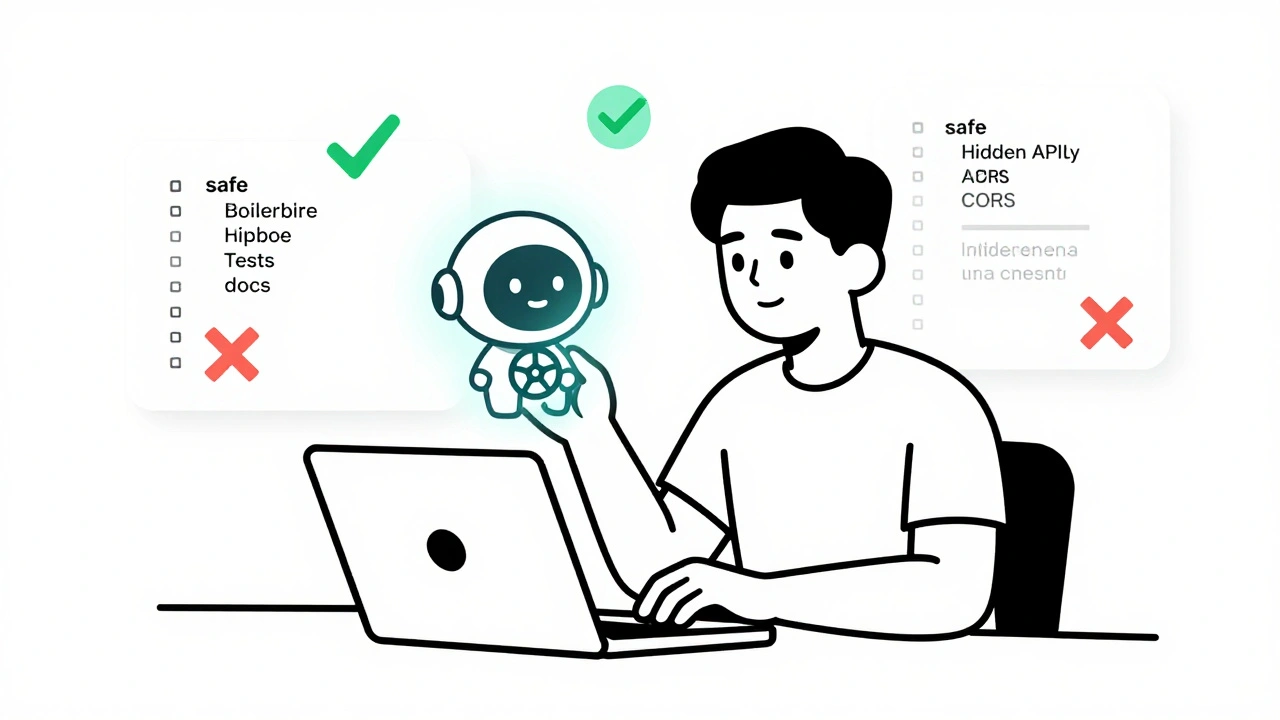

What to Allow: The Green Light Rules

Not all AI-generated code is dangerous. When used right, it boosts productivity. Here’s what you should actively encourage:

- AI-generated boilerplate-things like form handlers, API clients, or CRUD endpoints. These are low-risk, repetitive tasks that slow developers down. Let AI draft them.

- Unit test scaffolding-AI can generate test cases based on function signatures. Review them, but don’t write them manually.

- Documentation stubs-AI can draft comments, READMEs, and API docs. You polish them. The Vibe Programming Framework calls this "Knowledge Preservation"-keeping context alive so new team members don’t get lost.

- Refactoring suggestions-AI can spot duplicated code or suggest cleaner patterns. Use it to improve readability, not to rewrite logic blindly.

One team at a SaaS company reduced onboarding time by 40% by letting AI generate initial code for common features. They still required every line to be reviewed, but the heavy lifting was done. Productivity jumped. Bugs dropped.

What to Limit: The Yellow Light Zones

Some AI outputs are risky-but not forbidden. They need controls. Treat these like live wires: handle with care.

- Complex business logic-AI doesn’t understand your domain. Don’t let it write your payment validation rules, tax calculations, or compliance workflows. These need human oversight.

- Database schemas-AI can suggest table structures, but never deploy them without a DBA review. Poorly designed schemas break scaling and security.

- Third-party integrations-AI might generate code that connects to Stripe, Auth0, or AWS. Verify every API key, scope, and callback URL. Never trust AI to handle auth flows.

- File uploads-AI might generate code that accepts any file type. Limit uploads to specific extensions (PDF, JPG, PNG), scan for malware, and store files outside the web root.

According to Darren Coxon’s "Golden Rules of Full Stack Vibe Coding," no AI-generated component should exceed 150 lines. Why? Because longer blocks become unreadable. Teams that enforced this rule saw a 52% drop in code review time and a 31% reduction in post-deploy bugs.

What to Prohibit: The Red Light Rules

Some things are non-negotiable. If your team does these, you’re not just risky-you’re negligent.

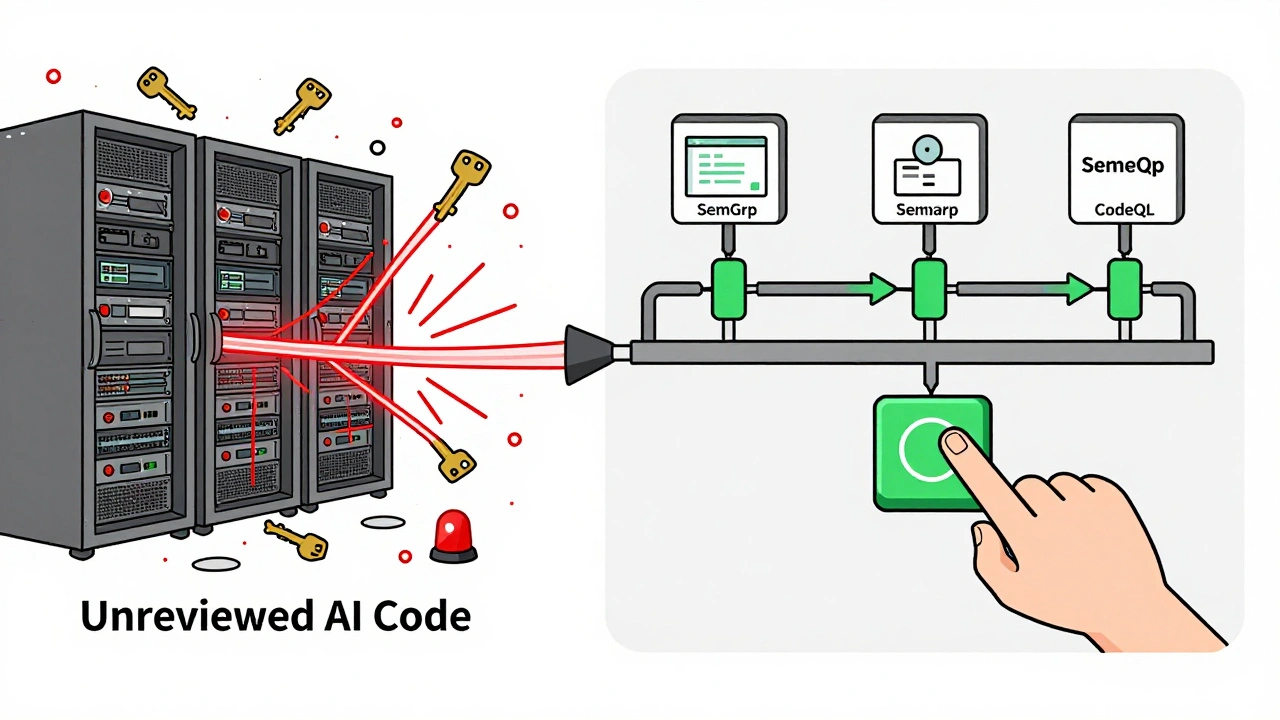

- Hardcoding secrets-API keys, database passwords, encryption keys. Never, ever let AI put them in code. Use environment variables. The Replit Security Checklist mandates this. One company lost $120,000 in cloud credits because AI-generated code left an AWS key in a public GitHub repo.

- Client-side storage of sensitive data-No API keys in localStorage, sessionStorage, or cookies. Even if you "obfuscate" them, they’re still readable by anyone with DevTools.

- Wildcard CORS settings-Never use

Access-Control-Allow-Origin: *. Restrict it to your own domains. AI often suggests wildcards because they’re easy. They’re also dangerous. - Unsanitized user input-AI-generated forms often skip input validation. You must enforce SQL injection prevention via parameterized queries or ORMs. No exceptions.

- Skipping verification-If your team has a "just deploy it and fix later" culture, you’re not using vibe coding-you’re gambling. Every line of AI-generated code must be reviewed by a human before merge.

The Cloud Security Alliance warns: "Ignorance isn’t a defense when regulators come knocking." If you’re handling EU, California, or Singapore user data, you’re legally required to document how you control AI-generated code. Fines for non-compliance can hit 4% of global revenue under GDPR and CCPA.

How to Build a Vibe Coding Policy (Step by Step)

Start small. Don’t try to overhaul everything at once.

- Choose a pilot project-Pick a low-risk internal tool. Not your customer-facing app.

- Define your five rules-Start with the top prohibitions: no secrets in code, no client-side storage, no wildcard CORS, no unreviewed code, no components over 150 lines.

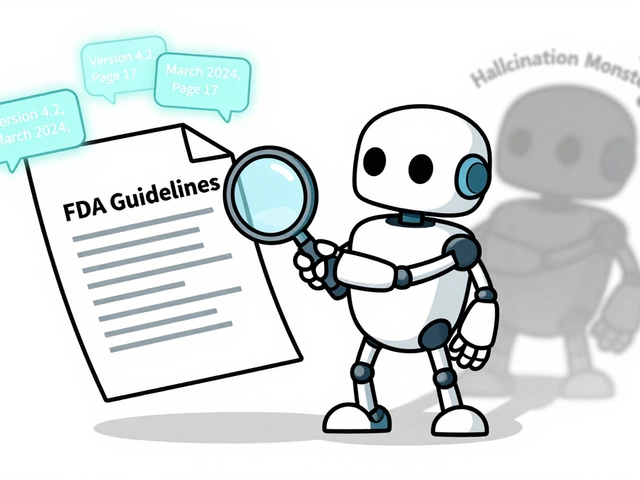

- Integrate with your CI/CD-Use tools like CodeQL, Semgrep, or Snyk to auto-scan AI-generated code for secrets and vulnerabilities before merge.

- Train your team-Run a 90-minute workshop. Show them real breach examples. Make them review a bad AI-generated snippet and fix it.

- Measure and adjust-Track how many AI-generated PRs get flagged. How long does review take? Adjust your 150-line rule or add new checks based on data.

Teams that followed this process saw a 63% drop in security incidents within six months, according to a case study by TechCorp. They didn’t slow down. They just stopped making dumb mistakes.

Enterprise vs. Indie: Different Rules, Same Goals

Big companies and solo devs need different policies-but the same outcomes.

Enterprise teams need centralized governance. Superblocks’ Enterprise Vibe Coding Playbook recommends an AI Center of Excellence (CoE)-a small team that sets standards, runs audits, and trains others. They use a "single pane of glass" to monitor all AI-generated code across departments. Compliance checks are automated. Every deployment requires sign-off.

Indie developers don’t have CoEs. But they can still follow the same principles. Use GitHub Actions to auto-scan for secrets. Install a browser extension that blocks local storage on dev sites. Write a one-page policy and pin it to your README. Your rulebook doesn’t need to be 50 pages. It just needs to be followed.

The goal isn’t control for control’s sake. It’s speed without sacrifice. Vibe coding lets you ship faster-but only if you control the chaos.

What’s Next: The Future of AI Code Governance

By 2026, Gartner predicts 70% of enterprises will have formal AI coding policies. The tools are catching up. The Vibe Programming Framework’s 2.1 update (June 2025) now includes built-in OWASP Top 10 prompt templates-AI automatically adds security checks when you ask for code. Superblocks launched automated compliance scanning in May 2025. Cloudflare’s sandboxing requirements are becoming standard.

But the biggest shift? Human oversight isn’t optional anymore. AI will get better. So will the attacks. The teams that win aren’t the ones using the most AI. They’re the ones who know exactly when to say no.

Can I use AI to write my entire app?

No. AI can draft components, but it doesn’t understand business logic, compliance needs, or long-term maintainability. Apps built entirely by AI are brittle, insecure, and impossible to debug. Always keep a human in the loop.

What’s the biggest mistake teams make with vibe coding?

Skipping verification. Many teams assume AI-generated code is "good enough" and skip reviews. That’s how security flaws slip into production. Even if the code looks clean, always ask: "Do I understand what this does?" If the answer is no, don’t merge it.

Do I need to hire a security expert to use vibe coding?

Not necessarily. But every developer on the team needs basic security training: how to spot secrets, validate inputs, configure CORS, and use environment variables. A 90-minute workshop is enough to start. Tools like Snyk and Semgrep automate the heavy lifting.

Is vibe coding legal for customer-facing apps?

Yes-if you have policies in place. Regulations like GDPR and CCPA require you to document how you handle data. If AI generates code that collects user data, you must prove you reviewed it, secured it, and can delete it on request. Documentation is your legal shield.

How long should code reviews take for AI-generated code?

Plan for 15-20 minutes per 100 lines of AI-generated code. That’s enough to check for secrets, validate inputs, confirm security headers, and ensure the logic makes sense. Anything less is risky. Anything more kills the productivity benefit.

What tools should I use to enforce vibe coding policies?

Use GitHub Actions or GitLab CI with Semgrep or CodeQL to auto-scan for secrets and vulnerabilities. For environment variables, use Vault or AWS Secrets Manager. For documentation, enforce markdown templates with AI-generated comments. The Vibe Programming Framework offers free templates for all of these.

Artificial Intelligence

Artificial Intelligence

Fred Edwords

December 14, 2025 AT 01:00Let me just say-this is the most balanced, well-structured take on AI-assisted coding I’ve read all year. The five-principle framework? Spot-on. I’ve seen teams go full chaos mode with Copilot, and it’s ugly. But when you treat AI like a junior dev who needs supervision-not a magic wand-it works. The 150-line limit is genius. Also, no one talks about how much time it saves on documentation stubs. We started doing this last quarter, and our onboarding docs are actually useful now.

Adrienne Temple

December 15, 2025 AT 21:17This is so needed!! 😊 I’m a solo dev and I was scared to use AI at all-until I read this. Now I just have a sticky note on my monitor: NO SECRETS, NO WILDCARDS, NO SKIPPING REVIEW. 🙏 It’s not about stopping AI-it’s about making it work for YOU. I even made a one-pager for my personal repo. Took 10 minutes. Changed everything.

Sandy Dog

December 16, 2025 AT 12:09Okay BUT-have you seen what happens when a junior dev uses AI to "just fix the login" and then pushes it to prod?? 😱 I swear to god, I once saw a guy type "make a secure auth flow" and the AI generated a full OAuth2 implementation… with the secret key in the frontend JS. Like… in plain text. In a public repo. We lost $120K. And the worst part? He didn’t even realize it was a problem. He thought AI was "smart". I cried. I actually cried. And now I have a framed print on my wall that says "AI IS A COPILOT, NOT A PILOT". I’m not joking. I bought it on Etsy. It’s glittery. I cry every time I look at it. 😭

Tom Mikota

December 16, 2025 AT 15:29Wow. Someone finally wrote this without sounding like a corporate compliance bot. The 150-line rule? Perfect. The fact that you called out wildcard CORS? Even better. I’ve seen so many teams say "we’ll review it later"-and then it never happens. The real problem? Nobody wants to be the guy who says "no" to AI. But if you don’t, you’re just outsourcing your job to a statistically optimized parrot. Also-why is everyone still using localStorage for tokens? 😑

Jessica McGirt

December 17, 2025 AT 21:04Thank you for writing this. I’ve been pushing for something like this in my team for months. The fact that you included both enterprise and indie approaches? Huge. We’re rolling this out as a 90-minute workshop next week. I’ll be sharing the Vibe Framework templates-free ones, no upsell. We’re not trying to be scary. We’re trying to be smart. And yes, we’re tracking PR flags. We’ve already cut review time by 30% because people know what to look for now. This isn’t about control. It’s about respect-for the code, for the users, and for each other.

Jawaharlal Thota

December 19, 2025 AT 01:08Bro, I read this in India and I’m like, this is exactly what we need here. We have a startup with 12 devs, all under 25, and they think AI writes the whole app. I showed them the Stripe key leak example-$87K gone in one commit. They were silent for 10 minutes. Then one guy said, "Wait, so we can use AI for boilerplate?" Yes. Exactly. Now we have a rule: any AI-generated code over 100 lines must be reviewed by someone with 2+ years experience. And we use Semgrep in CI. It’s not perfect, but it’s better than last month. And we’re not scared anymore. We’re just careful. And that’s enough.

Lauren Saunders

December 19, 2025 AT 04:31How quaint. You treat AI like a child needing rules. But let’s be honest-this is just fear dressed up as process. The real innovation isn’t in restricting AI-it’s in letting it run wild and then learning from the chaos. Why should a 150-line limit exist? Who decided that? Also, "knowledge preservation" sounds like corporate jargon for "make the junior devs take notes." And why are you assuming all teams have CI/CD? Most indie devs use VS Code and a GitHub repo. Your framework is beautiful, but it’s a luxury. The future belongs to the unregulated, the messy, the unpoliced. You’re not protecting code-you’re protecting your ego.

sonny dirgantara

December 20, 2025 AT 16:50lol i just use copilot for everything and hope for the best 😅