Large language models like GPT-4, Llama 3, and Gemini 2.5 are getting bigger, smarter, and faster. You’d think that with trillions of parameters and massive datasets, the little details - like how text is broken down - wouldn’t matter anymore. But here’s the truth: tokenization isn’t just still relevant. It’s more critical than ever.

What Tokenization Actually Does

Tokenization is the first step in turning human language into something a machine can understand. It’s not fancy. It’s not glamorous. It’s just the process of chopping text into pieces - called tokens - that the model can process as numbers. Without it, LLMs wouldn’t work. Not even close.Early models used word-based tokenization. That meant each word got its own token. But English has over half a million words. Building a vocabulary that big? It killed efficiency. Models needed too much memory, trained too slow, and couldn’t handle words they’d never seen before.

Then came character-based tokenization. Split every word into letters. Now the model could handle any word - even made-up ones. But it had a new problem: longer sequences. An average English word is about 4.7 characters long. But if you tokenize by character, you’re feeding the model 14 tokens just for that same word. That means longer processing times, higher costs, and slower responses.

That’s where subword tokenization stepped in. Around 2016, Google introduced Byte Pair Encoding (BPE) for machine translation. It found patterns in how words are built - like how "tokenization" breaks into "to-", "ken-", and "-ization". This gave us the sweet spot: enough flexibility to handle rare words, without bloating the sequence length.

Why Bigger Models Don’t Make Tokenization Obsolete

Some people argue that with models now having 100 billion+ parameters, they can learn to handle text at the character level anyway. Why bother with smart tokenization? It’s just preprocessing, right?Wrong.

MIT’s September 2024 study found that even the largest models still struggle with meaning distortion when words are split poorly. For example, if "COVID-19" gets broken into "C", "O", "V", "I", "D", "-", "1", "9", the model has to reconstruct the concept from scratch. That’s 8 tokens for one concept. A good tokenizer treats it as one. That’s not just efficiency - it’s accuracy.

And it’s not just about understanding. It’s about cost. Token processing makes up 60-75% of total inference costs, according to Kelvin Legal’s September 2023 analysis. If your tokenizer splits "unbelievable" into five tokens instead of two, you’re paying for three extra tokens every single time that word appears. At scale - think millions of requests a day - that adds up fast.

One developer on Reddit, @ml_engineer_99, cut their legal document processing costs by 37% just by switching tokenizers and tuning the vocabulary. That’s not luck. That’s optimization.

Vocabulary Size Isn’t Arbitrary - It’s a Trade-Off

Every major model picks a vocabulary size carefully:- BERT: 30,522 tokens

- GPT-3: 50,257 tokens

- Llama 3: 128,256 tokens

Why the jump? Because bigger isn’t always better - but sometimes it’s necessary.

Going from 32K to 128K tokens in Llama 3 reduced average sequence length by 22-35%. That means fewer tokens per document, faster processing, lower memory use during inference. But there’s a catch: larger vocabularies need more memory to store the embedding tables. Ali et al.’s 2024 benchmark showed a 18-27% increase in memory requirements.

So you’re trading memory for speed. And that’s a decision you make based on your use case.

If you’re running a chatbot on a phone? Smaller vocabulary. Less memory. Slower, but cheaper.

If you’re processing legal contracts or medical records? Bigger vocabulary. Fewer splits. Better accuracy. Higher cost - but worth it.

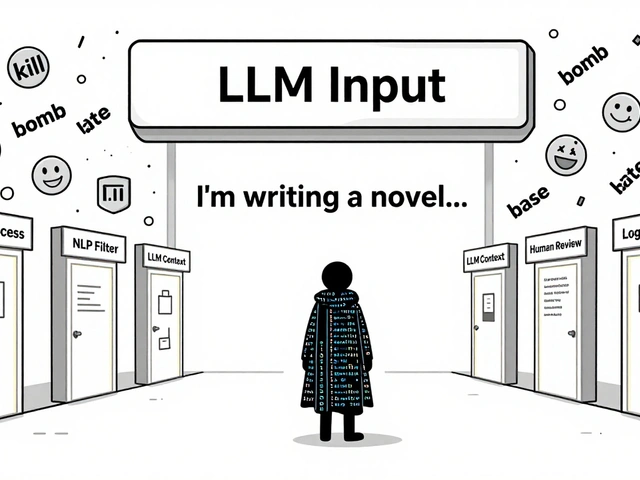

Domain-Specific Tokenization Is a Game-Changer

General-purpose tokenizers are fine for casual text. But if you’re working in healthcare, finance, or law? You’re dealing with terms like "myocardial infarction," "SEC Form 10-K," or "res judicata."Standard tokenizers will break those into fragments. "myocardial" → "myo-", "card-", "ial". Now the model has to piece together what a heart attack is from three random pieces. That’s inefficient. And inaccurate.

Dagan et al.’s 2024 research showed a 14.6% improvement in medical text understanding when using a tokenizer trained specifically on clinical notes. The same applies to finance: a tokenizer that knows "EPS", "EBITDA", and "P/E ratio" as single tokens cuts error rates by up to 22%.

One Hugging Face user, @nlp_newbie, reported a 22% error rate in financial entity recognition until they switched to a custom tokenizer. Fixed in two days. No model retraining. Just better tokenization.

Tokenization Affects What the Model Learns

This is the part most people miss. Tokenization doesn’t just prepare data - it shapes what the model learns.Sean Trott’s January 2024 analysis showed that when tokenization introduces controlled variability - like splitting "run" as either "run" or "r-", "un" - the model learns better generalizations. It doesn’t just memorize "run" as one thing. It learns that "run," "running," and "runner" are related, even if they’re split differently.

Ali et al. found that tokenization choices affected model generalization by 9.3-15.7 percentage points across eight NLP tasks. That’s not noise. That’s a measurable impact on how well the model transfers knowledge.

Think of it like teaching someone to read. If you only ever show them full words, they’ll struggle with new ones. If you teach them letter patterns, they can decode anything. Tokenization is the alphabet your model learns to read with.

Real-World Costs and Practical Tips

Here’s what this looks like in practice:- Cost difference: Tonic AI found optimized tokenization reduced costs from $0.0038 to $0.0023 per 1,000 tokens - a 39.5% savings.

- Learning curve: NVIDIA’s training program says mastering advanced tokenization takes 2-3 weeks.

- Customization effort: Tuning for domain terms takes 15-25 hours per project.

- ROI: Experts report 12-18% performance gains with only 5-7% extra development work.

Start with a pre-trained tokenizer from Hugging Face. Then fine-tune it on your own data. You need 500-1,000 labeled examples. Training takes 2-4 hours on a standard GPU. The Tokenization Cookbook on GitHub has 47 documented fixes for common issues - like why "C++" gets split as "C", "+", "+" instead of staying whole.

What’s Next? Adaptive and Hybrid Tokenization

The field isn’t standing still. NVIDIA’s Adaptive Tokenization Framework (ATF), released in November 2024, changes how it splits text based on content type. For code? More fine-grained. For poetry? Fewer splits. For medical reports? Specialized subwords.Google’s Gemini 2.5 uses context-aware tokenization to reduce rare word errors by 19.3%. And researchers are experimenting with hybrid models - combining BPE with character-level fallbacks. One November 2024 paper showed a 27.8% improvement in handling out-of-vocabulary words, with no speed penalty.

Even more exciting: "tokenization-aware training." New experiments train models to expect and adapt to different splits. The result? 8.5-12.3% performance gains.

Bottom Line: Tokenization Is the Silent Engine

You don’t notice tokenization until it breaks. Then suddenly, your model doesn’t understand "e-mail," misreads "U.S.A." as three separate words, or costs 40% more than it should.Large language models didn’t make tokenization obsolete. They made it more important. The bigger the model, the more it depends on clean, smart input. Poor tokenization doesn’t just slow things down - it corrupts the learning process.

For developers, researchers, and businesses using LLMs: don’t treat tokenization as a black box. Don’t just use the default. Test it. Tune it. Optimize it. It’s one of the highest ROI tasks in your entire NLP pipeline.

And if you’re still wondering whether it matters? Look at the numbers. The market for tokenization tools is growing 22% a year. 68% of enterprises customize it. Even Forrester says it’ll remain critical through 2027.

Tokenization isn’t going away. It’s evolving. And if you ignore it, your model will pay the price.

Is tokenization still needed if LLMs are so large?

Yes. Even trillion-parameter models rely on tokenization to convert text into manageable units. Larger models don’t eliminate the need for efficient input representation - they amplify the consequences of poor tokenization. MIT’s 2024 study showed meaning distortion in large models when tokens are fragmented, proving that scale doesn’t replace smart preprocessing.

What’s the difference between BPE, WordPiece, and SentencePiece?

BPE (Byte Pair Encoding) merges the most frequent pairs of characters or subwords iteratively. WordPiece, used in BERT, uses a probability-based approach to decide what to merge. SentencePiece, developed by Google, works at the character level and doesn’t require pre-tokenized text - making it better for multilingual data. All three are subword methods, but SentencePiece is more flexible for non-English languages and doesn’t rely on whitespace.

How does tokenization affect cost?

Tokenization directly impacts inference cost because most LLM providers charge per token. Longer sequences = more tokens = higher cost. Optimized tokenization reduces sequence length by 22-35%, cutting costs by up to 40%. Tonic AI’s case study showed a 39.5% cost reduction by switching from default to tuned tokenization.

Can I use the same tokenizer for all my applications?

No. A tokenizer trained on general web text will struggle with technical, medical, or legal jargon. Finance models need to recognize "EPS" and "EBITDA" as single tokens. Medical systems need "hypertension" intact. Customizing tokenization for your domain improves accuracy by up to 14.6% and reduces errors by 22%.

How do I start optimizing tokenization?

Start with a pre-trained tokenizer from Hugging Face. Test it on your actual data. Look for frequent splits that break meaningful terms. Retrain it on your domain-specific text using 500-1,000 examples. Use tools like the Tokenization Cookbook on GitHub for common fixes. Even small tweaks can give you 12-18% performance gains with minimal effort.

Will tokenization become obsolete in the future?

Unlikely. While some researchers speculate that ultra-large models might learn character-level patterns without tokenization, current evidence shows consistent improvements from optimized tokenization - even in models with over 100 billion parameters. Forrester and industry benchmarks predict tokenization will remain a critical optimization lever through at least 2027. The trend is toward smarter, adaptive tokenization, not its removal.

Artificial Intelligence

Artificial Intelligence

Addison Smart

December 13, 2025 AT 21:57Man, I used to think tokenization was just some boring preprocessing step until I started working on legal docs with GPT-4. I was getting wild hallucinations where "Section 12(b)(3)" got split into "Section", "12", "(", "b", ")", "(", "3", ")" - like, what even is that? Switched to a custom SentencePiece tokenizer trained on case law and suddenly my model stopped treating legal citations like random punctuation soup. It’s not sexy, but it’s the difference between your AI sounding like a confused intern and actually understanding what the contract says. And yeah, cost dropped 30% too. Who knew the secret sauce was in how you chop up words?

David Smith

December 15, 2025 AT 16:34Wow. Another post pretending tokenization is some deep mystery. Newsflash: it’s just string splitting. Your model should be smart enough to handle "C++" without you babysitting it. You’re overengineering like it’s 2018. Just feed it raw text and let the trillion parameters do their job. Stop treating LLMs like fragile toddlers who need their words chopped just right.

Lissa Veldhuis

December 17, 2025 AT 09:46David you absolute clown 🤡 I’ve seen your code. You think LLMs are magic? Nah they’re just fancy statistical parrots and if you feed them garbage tokens they’ll spit out garbage meaning. I had a client whose AI kept calling "FDA-approved" a "fda approved" and then hallucinating it was a person. I fixed it in 20 minutes by adding "FDA-approved" to the vocab. No retraining. Just one line. You’re not saving time by ignoring tokenization - you’re just delaying the inevitable meltdown when your chatbot starts arguing with patients about their prescriptions. Grow up.

Michael Jones

December 17, 2025 AT 14:50Think about it like this - language isn’t just words it’s patterns and rhythm and meaning layered like sediment. Tokenization is the shovel that digs up the fossils. If you use a blunt shovel you’ll crush the bones. If you use a fine one you’ll see the texture of the ancient world. BPE isn’t just a tech trick it’s a philosophy. It’s saying hey maybe the meaning isn’t in the whole word but in the way the parts dance together. And yeah maybe it’s boring but so is breathing until you stop doing it. We’re not optimizing tokens we’re optimizing understanding. And that’s sacred.

allison berroteran

December 18, 2025 AT 18:31I love how this post breaks down the real-world impact without getting lost in math. I’ve been using Llama 3 for clinical note summarization and honestly the tokenizer made the biggest difference - not the model size. We were getting "hypertension" split into "hyper" "tens" "ion" and the model kept thinking it was three separate conditions. Switched to a medical BPE vocab and suddenly the summaries made sense. No model changes. Just better input. It’s wild how much you can gain by paying attention to the first step. Also - huge props to @nlp_newbie for that 22% fix. That’s the kind of win that doesn’t need a paper to prove it.

Gabby Love

December 19, 2025 AT 12:34Small note: in the post, you wrote "P/E ratio" with a forward slash - but most tokenizers will split that into "P", "/", "E", "ratio". If you’re training on financial data, consider replacing "/" with "-" or adding "P/E" as a single token. It’s a tiny change, but it matters. Also, the Tokenization Cookbook link is gold - saved me 10 hours last week.

Jen Kay

December 20, 2025 AT 02:40Wow. Just… wow. I didn’t think anyone still cared about tokenization. And yet here we are - a whole post dedicated to something that sounds like a footnote in a 2015 NLP textbook. I mean, really? You’re telling me that after all this progress, the biggest bottleneck isn’t the model architecture or the compute - it’s how we split words? I’m not saying you’re wrong. I’m just saying… I’m disappointed. This feels like polishing the wheels on a horse carriage while Tesla’s flying.