Most people think prompt engineering is about writing better questions. But what if the real breakthrough isn’t in how you phrase your prompt - but in how the prompt learns to improve itself?

Reinforcement Learning from Prompts (RLfP) flips the script. Instead of humans tweaking prompts by trial and error, RLfP lets an algorithm do it automatically - and it’s already beating human-designed prompts on real-world tasks. This isn’t theory. It’s happening right now, in labs and enterprise systems, with measurable gains in accuracy, speed, and reliability.

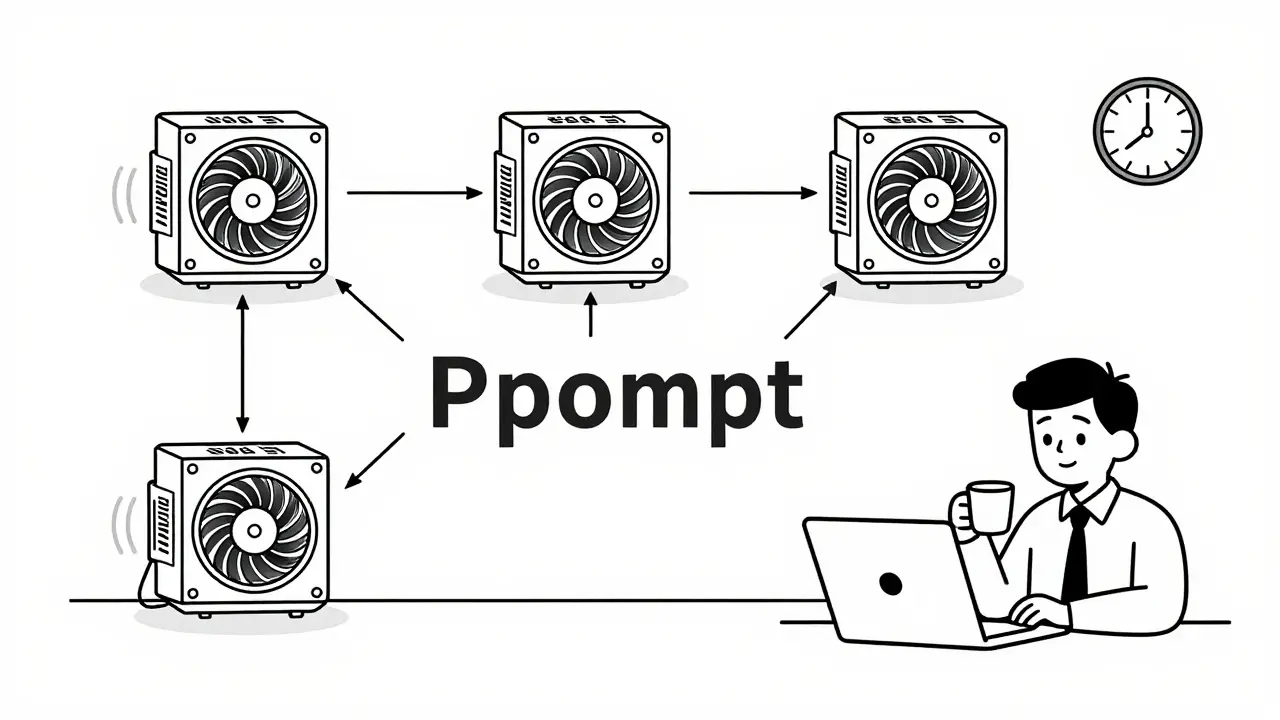

How RLfP Works: The Feedback Loop That Changes Prompts

Traditional prompt engineering is like giving a chef a recipe and hoping it turns out well. RLfP is like giving that chef a tasting panel that tells them, "This version tastes better," then letting them tweak the recipe again - over and over - until it’s perfect.

The process has three core parts:

- The policy function: This is the engine that suggests changes to the prompt. It doesn’t just swap words randomly. It learns which token additions, deletions, or rephrasings are likely to improve output quality.

- The reward mechanism: After a prompt generates an answer, it’s scored. Google’s PRewrite uses five reward types: Exact Match (perfect answer = 100%), F1 score (precision + recall), Perplexity (how confident the model is), a mix of Perplexity + F1, and Length Difference (does the output match the expected length?).

- The iterative loop: The system runs hundreds of prompt variants, measures their rewards, and uses Proximal Policy Optimization (PPO) to update the policy. Think of it as a self-driving car learning from every turn - except here, it’s learning how to rewrite prompts.

It’s not magic. It’s math. And it works.

Real Results: 10% Gains on Benchmarks

Let’s look at numbers that matter.

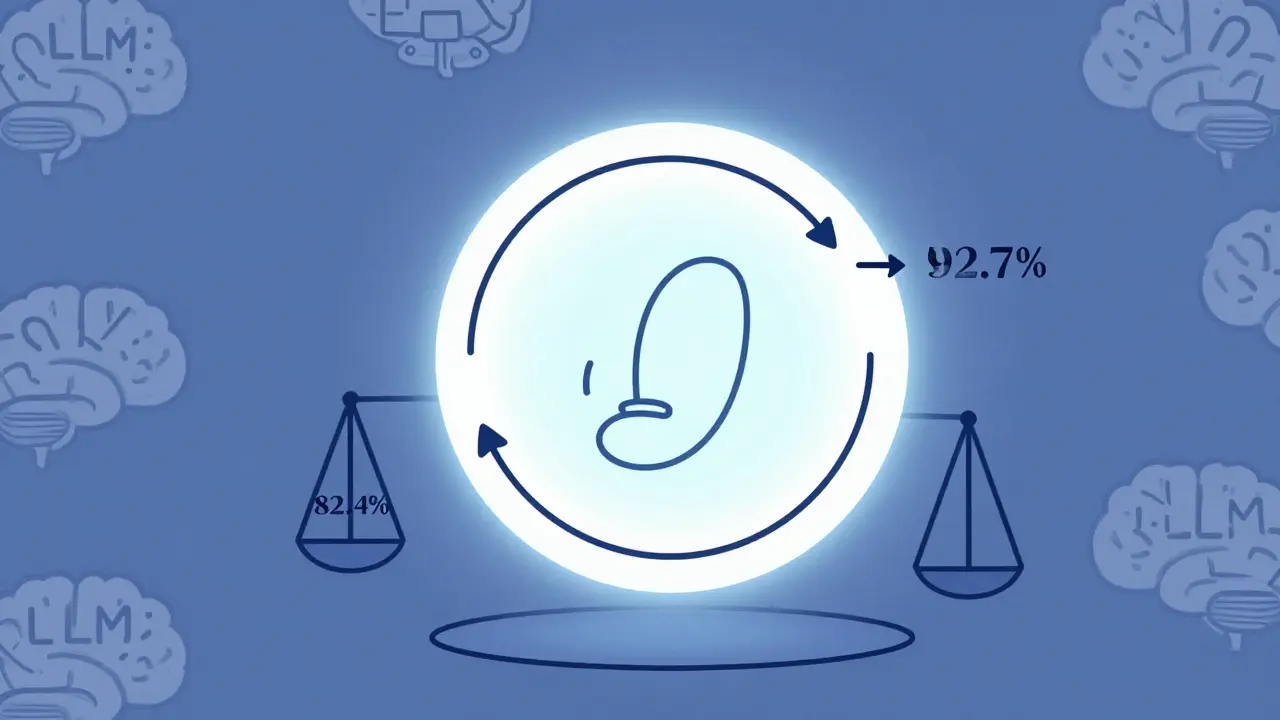

On the SST-2 text classification task - a standard benchmark for sentiment analysis - the original human-written prompt hit 82.4% accuracy. After RLfP optimization using PRewrite with a Perplexity+F1 reward, accuracy jumped to 92.7%. That’s a 10.3-point gain. In real-world terms, that’s the difference between a customer service bot missing 1 in 5 complaints and missing only 1 in 12.

Compare that to other methods:

- Manual prompt engineering: 84.2%

- AutoPrompt: 85.1%

- PromptSource: 83.9%

RLfP didn’t just beat them - it left them behind. And the gains aren’t just on sentiment tasks. On the GSM8K math reasoning benchmark, PRL (another RLfP framework) reached 68.4% accuracy, while the next-best method scored 59.2%. That’s a 9.2-point swing in a domain where even 1% is hard-won.

Dr. Sebastian Raschka of MIT put it simply: "Humans can’t see the subtle semantic cues that make or break an LLM’s response. RLfP can." One PRewrite tweak - changing just three words - boosted accuracy by 9.8% on a medical QA task. No human would have guessed that change.

Why RLfP Beats Static Methods

AutoPrompt and Prefix Tuning are great tools. But they’re static. Once you run them, the prompt is done. RLfP keeps evolving.

PRewrite’s key innovation? It doesn’t use a fixed evaluator. Most systems use one LLM to judge prompts. PRewrite uses a learned evaluator - one that improves as it sees more data. That means the feedback loop gets smarter over time. It’s not just optimizing the prompt. It’s optimizing the way it judges the prompt.

This leads to more nuanced improvements. For example, RLfP might add a phrase like "Think step by step" - not because it’s a known trick, but because the algorithm detected that prompts with this phrase had 14% higher F1 scores on complex reasoning tasks. That insight wouldn’t show up in a blog post. It only emerges from data.

The Hidden Costs: Resources, Time, and Complexity

RLfP isn’t free. It’s expensive.

Google’s PRewrite required 4× NVIDIA A100 GPUs running for 72 hours on the GLUE dataset. That’s roughly $1,800 in cloud costs for a single optimization run. For comparison, AutoPrompt takes 2 hours. PRL’s setup time is nearly 37× longer than simpler methods.

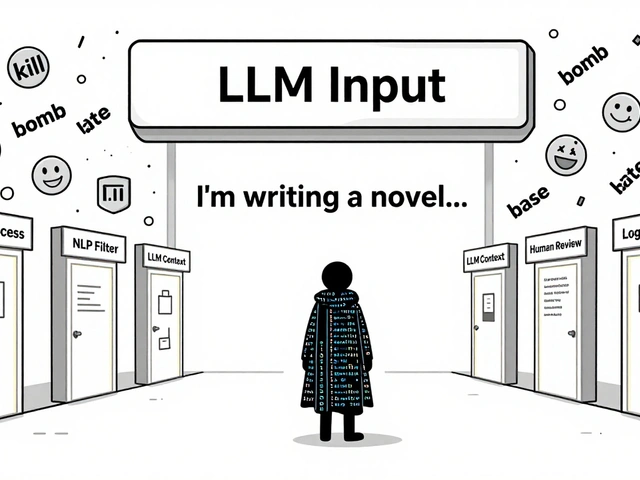

And it’s not just about money. The skill gap is wide. To implement RLfP, you need:

- Deep understanding of reinforcement learning (PPO, policy gradients)

- Experience with LLM architectures and tokenization

- Access to high-end GPU infrastructure

- Patience - debugging reward function instability takes days

Users on GitHub and Reddit report 27-hour debugging sessions just to get CUDA working with PyTorch 2.5. Documentation is sparse. PRewrite’s docs scored 3.1/5. PRL’s? 2.8/5. Community support? Only 14 active contributors across both frameworks as of January 2026.

For most teams, the ROI isn’t there - unless you’re in healthcare, finance, or legal tech, where a 5% accuracy gain means millions in risk reduction.

Where RLfP Falls Short

It’s not a silver bullet.

RLfP struggles where human prompts are already near-optimal. On the AG News dataset - a simple news classification task - RLfP improved accuracy by just 0.7%. That’s barely worth the compute cost.

Worse, there’s "prompt architecture lock-in." PRewrite-optimized prompts for Llama-3 dropped 12.3% in accuracy when tested on Mistral-7B. The algorithm overfit to one model’s internal behavior. If you switch models, you might need to re-optimize from scratch.

Stanford’s HAI Institute found RLfP results vary by ±4.7% across different LLM backends. That’s unacceptable for production systems that need consistency.

And then there’s the regulatory wall. The EU AI Office now requires human review for RL-optimized prompts in high-risk applications. That means even if you automate the optimization, you still need a human to sign off. That adds cost, delay, and complexity.

Who’s Using It - and Why

Enterprise adoption is climbing fast. Among Fortune 500 companies with AI initiatives, 23% now use RLfP - up from 4% in late 2025.

Why? Two sectors lead:

- Financial services (37% adoption): A bank’s fraud detection model improved from 89.1% to 95.4% accuracy after RLfP optimization. That’s thousands of false positives eliminated per month.

- Healthcare (29% adoption): Maria Rodriguez, a data scientist at a Boston hospital, used PRewrite to boost her medical QA system from 76.4% to 85.1% accuracy. "It went from unusable to clinically viable," she said.

These aren’t labs. These are production systems where accuracy isn’t optional - it’s life-or-death.

Meanwhile, individual developers? Only 21% of RLfP users are solo. The rest are teams with budgets, cloud credits, and ML engineers on staff.

What’s Next: Lighter, Smarter, Self-Validating

RLfP is still early. But it’s evolving fast.

Google’s PRewrite v1.3, released in January 2026, introduced multi-objective reward balancing. It now optimizes for accuracy, speed, and safety at the same time - cutting inference time by 22% without losing performance.

The PRL team is integrating with Hugging Face, meaning you’ll soon be able to optimize prompts for over 12,000 community models - no custom code needed.

And the most exciting development? DeepMind’s January 2026 preprint introduced a "lightweight RLfP" method that uses just 1/8th the GPU power. That could bring RLfP within reach of mid-sized companies.

Long-term, researchers are chasing "verifiable reward" systems - where the LLM itself validates its own outputs without needing human-labeled ground truth. If that works, RLfP could become autonomous.

Should You Use It?

If you’re building a chatbot for a blog? No. Stick with manual prompts.

If you’re optimizing a legal document analyzer, a clinical decision support tool, or a fraud detection engine? Then yes - if you can afford it.

RLfP isn’t for everyone. But for those who need precision, it’s becoming the gold standard. The question isn’t whether it works - it’s whether your use case justifies the cost.

By 2028, Gartner predicts RLfP will be standard in enterprise LLM pipelines. Until then, it’s a tool for the few who can wield it - and a glimpse into where prompt engineering is headed.

Artificial Intelligence

Artificial Intelligence

Robert Byrne

February 3, 2026 AT 12:39This is the most BS I've read all week. You act like RLfP is some magical elixir, but let's be real - it's just overfitting dressed up in fancy math. I've seen teams burn months on this only to get a 2% gain on a task that didn't need it in the first place. The real problem? You're trading human intuition for a black box that can't explain why it changed 'analyze this' to 'break it down step by step, but not too much, and only if the context is financial.' Who the hell wants to trust that?

King Medoo

February 4, 2026 AT 00:31Let me just say - I'm not one to get emotional about prompt engineering, but this? This is the future. 🤖✨ I've spent years watching people tweak prompts like they're fixing a toaster with duct tape, and now we have a system that *learns*? That's not innovation - that's evolution. I mean, think about it: if a model can optimize its own instructions based on reward signals, isn't that basically the AI equivalent of learning to read its own mind? 🤯 I'm not saying it's perfect - the cost is insane, and yes, the docs are garbage - but this is the first time I've seen LLMs actually grow up. The fact that PRewrite found a 9.8% boost by changing three words? That's not luck. That's intelligence. And if you're not paying attention, you're going to be left behind while the real players are building systems that don't need you to babysit them anymore. 🏁📈