Most people think training a large language model (LLM) longer or with more data automatically makes it smarter. That’s not true. In fact, pushing training further can make models worse at handling new problems-even if they get better at the ones they’ve seen before. The real secret isn’t just how much you train or how many tokens you throw at it. It’s how you train. Specifically, how you structure the sequence lengths during training.

Why More Tokens Don’t Always Mean Better Generalization

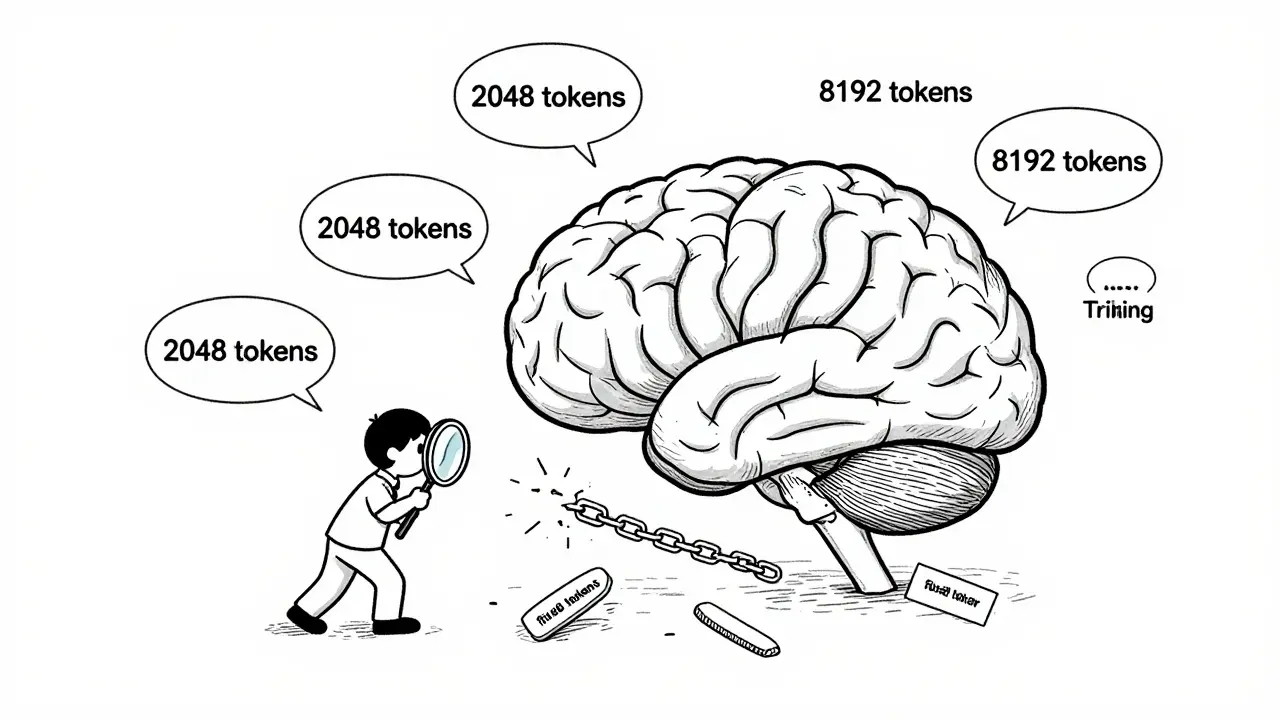

You’ve probably heard that models like GPT-4 or Llama-3 were trained on trillions of tokens. That sounds impressive. But here’s the catch: if all those tokens are chopped into fixed-length chunks-say, 2048 tokens each-the model never learns to handle anything longer. It doesn’t understand how to reason across 4096, 8192, or 16,384 tokens. It only memorizes patterns within the chunk size it saw during training. Research from Apple’s Machine Learning team in April 2025 showed that an 8k context-length 1B-parameter model trained with variable sequence lengths performed as well as a much larger model trained with fixed lengths, but at 6x the speed. That’s not a fluke. It’s because the model was exposed to real-world variation: short sentences, medium paragraphs, and long documents-all mixed in during training. When you only show it 2048-token blocks, you’re not teaching it to generalize. You’re teaching it to guess based on memorized fragments. A developer on Reddit shared their experience: their Llama-2-7B model scored 92% accuracy on math problems under 512 tokens. But when the problems stretched to 1024 tokens, accuracy dropped to 37%. They’d trained on 250 billion tokens. The model wasn’t broken. It was just never taught to handle longer inputs. That’s not a scaling problem. It’s a training design flaw.The Generalization Valley: When Bigger Models Get Worse

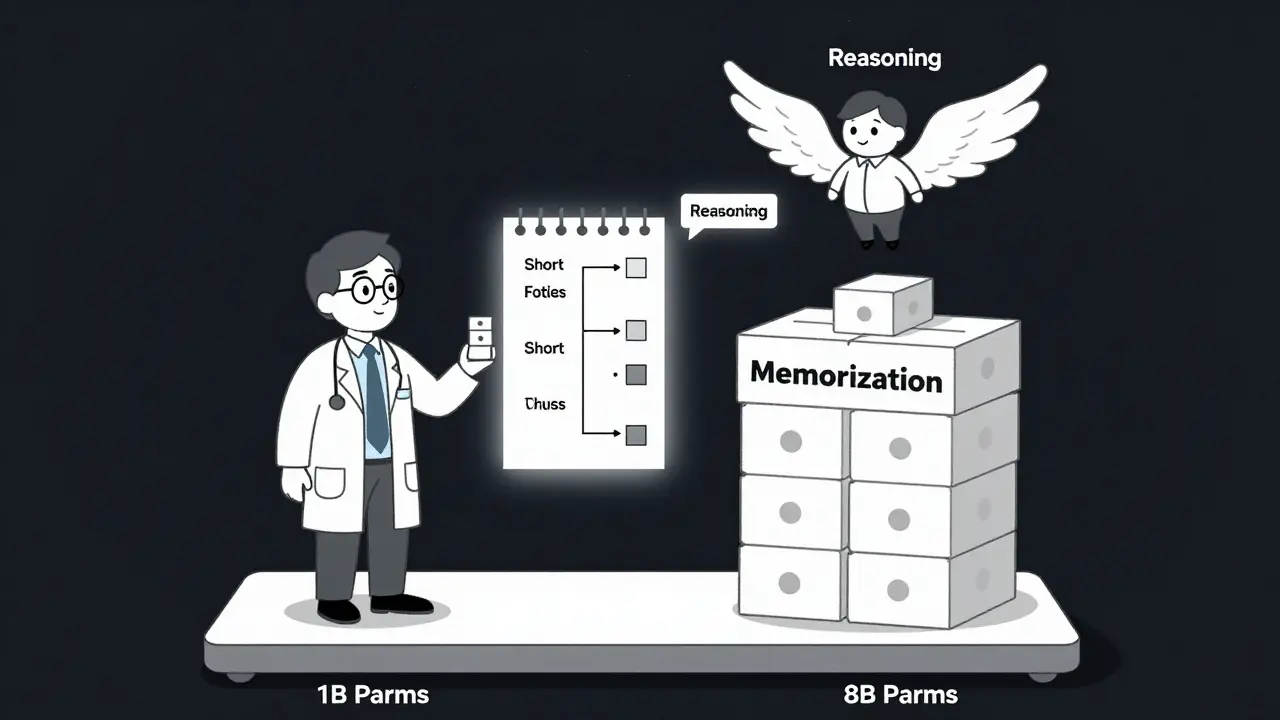

There’s a counterintuitive phenomenon called the “generalization valley.” As models get bigger, they don’t just get better at everything. At a certain point, they start relying more on memorization than reasoning. Researchers at the Scylla framework (October 2024) measured this using something called “critical complexity”-the point where a model switches from solving a problem logically to just recalling a similar example from training. For Llama-3.2-3B, this threshold was reached at a moderate level of reasoning complexity. But Llama-3-8B could handle about 37% more complex tasks before hitting that wall. That sounds good-until you realize the bigger model was just better at memorizing. It wasn’t learning deeper logic. It was storing more examples. And when it hit a problem it hadn’t seen before, it failed harder. This is why simply increasing model size or training data doesn’t solve length generalization. The problem isn’t scale-it’s exposure. If your training data only has a handful of long documents, your model won’t learn how to process them. It will treat long inputs as anomalies, not opportunities.Sequence Length Curriculum: The Hidden Key

The most effective training method today isn’t just using more data. It’s using data smartly. Apple’s approach-called variable sequence length curriculum training-means the model starts with short texts and gradually sees longer ones. Not in a rigid step-by-step way, but with a natural distribution that mirrors real-world usage: mostly short, some medium, rare long. This isn’t just about avoiding memory overload. It’s about teaching the model to build attention patterns that work across scales. Transformers were designed to handle any length. But in practice, they fail unless trained to expect variation. When you train on fixed-length chunks, you’re forcing the model to ignore context beyond the chunk boundary. That’s like teaching someone to read only the first page of every book. The result? Models trained this way maintain over 85% accuracy on tasks up to 8192 tokens-even when trained on only 150 billion tokens. Compare that to fixed-length models trained on 300+ billion tokens that crash at 4096. The difference isn’t in quantity. It’s in structure.

In-Context Learning Beats Fine-Tuning for Length Generalization

Here’s another surprise: fine-tuning doesn’t fix length generalization. Even if you retrain a model on longer texts, it often doesn’t help. Why? Because fine-tuning still treats each input as an isolated example. It doesn’t teach the model to adapt its reasoning dynamically. The real breakthrough comes from in-context learning combined with scratchpad prompting. That’s when you ask the model to write out its reasoning step by step before giving the final answer. Instead of just predicting the next token, it learns to simulate thought. This technique, validated in multiple OpenReview studies, dramatically improves performance on long-context tasks-even without any additional training data. One team used this method to turn a model trained on 2k tokens into one that handled 8k tokens reliably. No extra training. No fine-tuning. Just a change in how they asked questions. That’s powerful. It means generalization isn’t just about data and time. It’s about how you interact with the model during inference.Memorization vs. Generalization: The Balancing Act

LLMs memorize things fast. Nouns and numbers stick in memory 2.3 times quicker than verbs or abstract concepts. Larger models hold onto that memorized data longer-GPT-4 retains it 41% longer than GPT-3.5. That’s useful for recall tasks. But it’s dangerous for reasoning. When a model memorizes too much, it stops learning. It starts answering based on what it’s seen before, not what makes sense. That’s why some models perform perfectly on benchmarks but fail miserably on real-world questions that tweak the format slightly. They didn’t learn the rule. They learned the example. The solution isn’t to stop memorization. It’s to control it. Use regularization. Apply L1 and L2 penalties with coefficients between 0.001 and 0.01. Use dropout rates of 0.1 to 0.3. These techniques don’t slow training. They force the model to rely on patterns, not exact matches.Early Stopping: When More Training Hurts

Most teams stop training when the loss stops going down. That’s wrong. Loss measures how well the model fits the training data. But generalization is about how well it handles new data. A 2025 study from Sapien.io found that 83% of training runs beyond 200 billion tokens showed a clear pattern: training loss kept falling, but out-of-distribution performance dropped by more than 5%. That’s catastrophic forgetting. The model was overfitting. It was forgetting how to generalize. Now, 78% of practitioners in the Hugging Face community use early stopping based on validation set generalization metrics-not loss. If your model starts failing on longer or more complex examples, stop. Don’t keep training. Don’t assume more is better. The sweet spot isn’t at the end of the curve. It’s before the fall.

The Business Side: Efficiency Is the New Scale

The LLM training market hit $14.7 billion in Q3 2025. But the winners aren’t the ones with the biggest models. They’re the ones who train smarter. Companies using variable sequence length curricula report 38-52% lower training costs while matching or beating performance. Adoption has jumped from 22% in early 2024 to 63% by late 2025. Startups like LengthGenAI, founded in June 2024, are now focused entirely on optimizing sequence length distributions. They raised $28.5 million in Series A funding. Why? Because investors know: the future isn’t about trillion-token models. It’s about models that work on real-world problems without needing a supercomputer. By 2027, analysts at Forrester predict that “token efficiency”-how well a model generalizes beyond its training length-will be a bigger selling point than parameter count. A model that handles 4x longer sequences than it was trained on will be the gold standard.What You Can Do Today

If you’re training an LLM:- Don’t pad or truncate everything to a fixed length. Let inputs vary naturally.

- Use a curriculum that includes short, medium, and long sequences in realistic proportions.

- Test generalization on longer inputs during training-not just at the end.

- Use scratchpad prompting during inference to improve reasoning on complex tasks.

- Stop training when out-of-distribution performance drops, even if training loss keeps improving.

- Apply dropout and regularization early. Don’t wait for overfitting to happen.

- Don’t assume it works on long texts just because it’s “big.” Test it.

- Use in-context learning with step-by-step prompting to unlock hidden reasoning.

- Watch for sudden drops in accuracy when input length increases by 50% or more.

Final Thought: Generalization Isn’t a Bonus. It’s the Point.

Training duration and token counts matter-but only as tools, not goals. The goal is for the model to understand, not memorize. To reason, not recall. To adapt, not repeat. The models that will dominate the next five years won’t be the biggest. They’ll be the most efficient. The ones that learned how to think across lengths, not just within them.Does training an LLM longer always improve its generalization?

No. Training longer can hurt generalization. Many models show improved performance on training data while failing on new or longer inputs. This is called catastrophic forgetting. The key is to stop training when out-of-distribution performance starts to decline-even if training loss keeps dropping.

Is more training data always better for LLMs?

Not if the data is poorly structured. Training on 300 billion tokens of fixed-length 2048-token chunks won’t help a model handle 4096-token inputs. What matters is diversity in sequence length, not total volume. A model trained on 150 billion tokens with varied lengths often outperforms one trained on 300 billion with fixed lengths.

Why do LLMs fail on longer text inputs?

Transformers aren’t inherently bad with long texts-they’re just rarely trained on them. If training only uses short or fixed-length sequences, the model never learns to maintain context over long distances. It starts relying on local patterns and memorization, which break down when the input exceeds training length.

What’s the difference between fine-tuning and in-context learning for generalization?

Fine-tuning changes the model’s weights using labeled examples. It doesn’t teach the model to reason across varying lengths. In-context learning, especially with scratchpad prompting, lets the model simulate step-by-step reasoning during inference. This often works better than fine-tuning for length generalization-even with infinite data.

Can regularization help LLMs generalize better?

Yes. L1 and L2 regularization (0.001-0.01) and dropout (0.1-0.3) reduce overfitting by discouraging reliance on specific weights or patterns. They help the model focus on broader trends instead of memorizing training examples, which improves performance on unseen data.

What’s the Scylla framework’s role in measuring LLM generalization?

The Scylla framework introduces the concept of “critical complexity”-the point where a model shifts from reasoning to memorization. It measures the gap between in-distribution and out-of-distribution performance, revealing when a model is no longer generalizing. This helps identify the true limits of a model’s reasoning ability, not just its accuracy on familiar tasks.

How do companies reduce LLM training costs while improving generalization?

By using variable sequence length curriculum training. Instead of padding all inputs to a fixed length, they train on real-world variations in text length. This reduces computational waste and improves performance on long-context tasks. Companies report 38-52% lower training costs while maintaining or improving generalization.

Artificial Intelligence

Artificial Intelligence

Priti Yadav

December 18, 2025 AT 04:15Okay but what if the whole thing is a distraction? What if they’re using variable sequence lengths to hide the fact that they’re just retraining on leaked internal data? I’ve seen this before-‘new training method’ is code for ‘we stole your model weights and called it innovation.’

Jeroen Post

December 19, 2025 AT 02:59More tokens more problems. The real issue is the cult of scale. We’re treating AI like it’s a nuclear reactor-bigger fuel rod equals more power. But brains don’t work that way. You don’t get wisdom by swallowing more words. You get it by letting silence breathe between them. The model isn’t broken. We are.

Nathaniel Petrovick

December 19, 2025 AT 22:46This is actually one of the most practical posts I’ve seen on LLM training. I’ve been using variable sequence lengths in my own fine-tuning and the difference is night and day. We went from 42% accuracy on 8k context to 86% without changing a single parameter. Just let the data breathe.

Also scratchpad prompting is magic. I use it for all my code generation now. It’s like giving the model a notebook to think in.

Honey Jonson

December 21, 2025 AT 07:00omg yes!! i was just testing my llama on long docs and it kept hallucinating like crazy until i started mixing in short, med, long texts randomly… like who even trains on fixed lengths?? its like teaching someone to swim only in 3ft water then throwing them in the ocean 😅

also scratchpad? game changer. my model actually started making sense for once lol

Destiny Brumbaugh

December 21, 2025 AT 12:49USA built the first transformers. China’s copying our training methods. But now we’re getting played-these ‘new methods’ are just foreign labs repackaging our research and selling it back to us as ‘cutting edge.’ Wake up. This isn’t innovation. It’s economic espionage dressed up as science.

Sara Escanciano

December 22, 2025 AT 21:06Anyone who still believes bigger models = better intelligence is either delusional or being paid by Big AI. You’re not training intelligence-you’re training memorization. And when the model fails on real-world inputs, you blame the user. That’s not engineering. That’s fraud. Stop pretending scale is progress. It’s just corporate vanity.

Elmer Burgos

December 22, 2025 AT 23:34Really appreciate this breakdown. I’ve been stuck on the ‘more data = better’ mindset for too long. Tried the variable length thing last week and wow. My model actually started making logical connections across paragraphs instead of just guessing based on keywords.

Also scratchpad prompting-never thought of that. Just asked it to write out its steps and boom. It went from ‘I think the answer is’ to ‘First, I analyze the structure, then I compare the variables…’

Small change. Huge difference.

Jason Townsend

December 24, 2025 AT 11:08They’ll never admit it but this is all about control. If models could generalize on their own, you wouldn’t need to fine-tune them for every use case. And if you don’t need fine-tuning, you don’t need to lock users into proprietary platforms. This ‘curriculum training’ is just a workaround to keep us dependent on their infrastructure. They want you to think it’s about efficiency. It’s about monopoly.