For years, the race in generative AI was simple: bigger models, more parameters, longer training times. But by 2025, that approach hit a wall. Scaling up didn’t mean smarter AI-it meant more expensive, slower, and often more fragile systems. The real breakthrough wasn’t in making models larger. It was in rethinking how they’re built.

From Monoliths to Modular Systems

Early generative AI models were like single-engine planes: powerful, but everything had to run together. If one part failed, the whole system slowed down or crashed. That’s the problem with monolithic architectures. They’re rigid. They’re costly. And they don’t scale well beyond 500 billion parameters.

Today’s systems are more like a city’s public transit network-different trains, buses, and subways working together, each optimized for a specific job. This shift from monoliths to system-level intelligence is what’s powering the real explosion in AI adoption. Companies aren’t just using AI anymore. They’re building entire workflows around it.

Take Mixture-of-Experts (MoE) architectures. Instead of activating every neuron for every task, MoE selects only 3-5% of the model’s parameters per token. That means a trillion-parameter model can run on the same hardware that once handled a 50-billion-parameter model. The result? A 72% drop in inference costs. AWS, Google, and Meta all use variations of this today. It’s not magic-it’s smart engineering.

Why Attention Isn’t Enough Anymore

Transformers dominated AI for years because of their attention mechanisms. But even those hit limits. Processing long sequences-like a 10,000-word document or a 30-minute video-used to mean quadratic compute growth: O(n²). That’s unsustainable.

Now, efficient attention variants like FlashAttention-3 and Streaming Transformers cut that to O(n log n) or better. Some systems even use State Space Models like Mamba, which process sequences linearly. Mamba is 2.4x faster on long texts, but it’s not perfect. It loses about 12% accuracy on complex reasoning tasks. That’s why most production systems still use Transformer variants-87% as of Q3 2025.

But the real innovation isn’t just faster attention. It’s how attention is used. Modern systems don’t just predict the next word. They break tasks into steps, verify each one, and backtrack if something goes wrong. That’s verifiable reasoning.

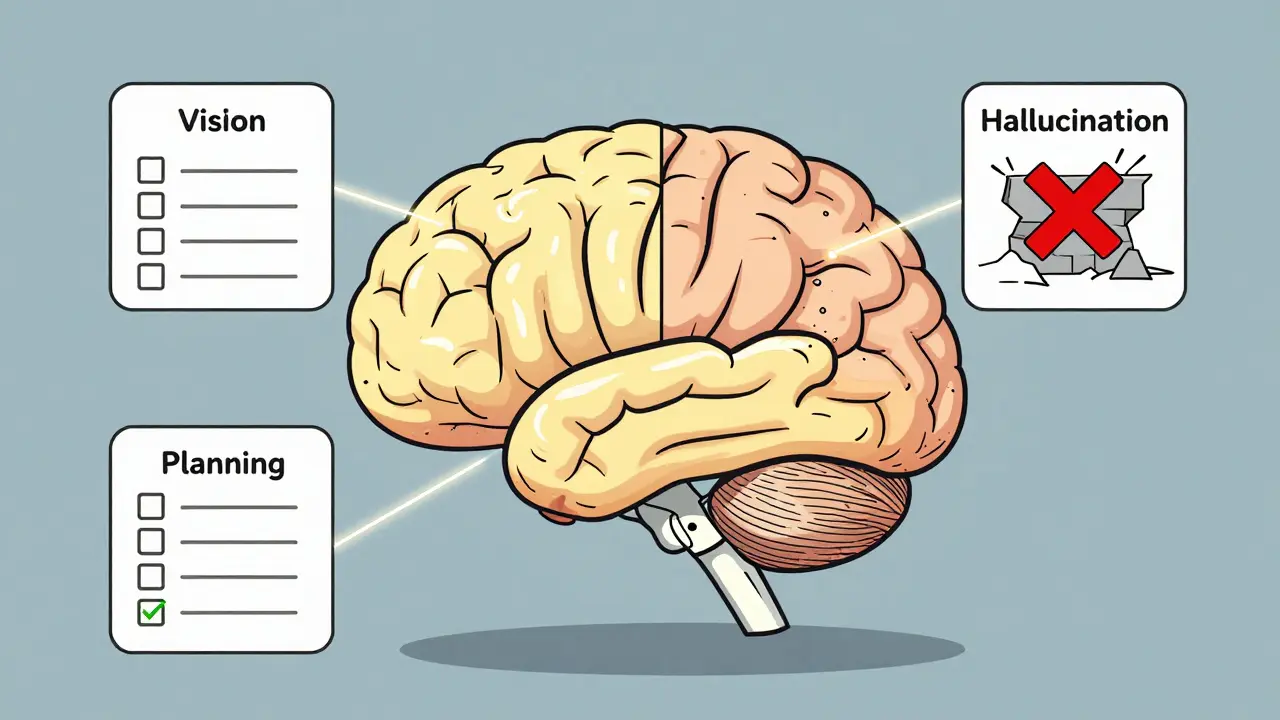

Verifiable Reasoning: AI That Knows When It’s Wrong

Early AI hallucinated. It made things up. And because it was a black box, you had no way to know why. Chain-of-Thought prompting helped a little-but it was still guesswork.

Now, systems use explicit reasoning frameworks. Think of it like a lawyer writing out each step of their argument before presenting it. These architectures add process supervision: every logical step is tracked, scored, and validated. The result? 60-80% fewer logical errors on complex tasks like legal analysis or medical diagnosis.

Companies like Anthropic and Google are building models that can say, “I don’t know” with confidence-not because they’re programmed to, but because the architecture forces them to prove their reasoning before answering. That’s huge for enterprise use cases where accuracy isn’t optional.

The Rise of Hybrid Architectures

AI isn’t just about language anymore. It’s about vision, memory, planning, and physical interaction. That’s why hybrid architectures are taking over.

Chinese manufacturers are leading here. Their AI systems combine perception models (to see parts on a factory floor), symbolic reasoning (to understand assembly rules), and memory systems (to recall past failures). The outcome? 22% higher operational efficiency.

In the West, enterprises are adopting hybrid systems too-but differently. AWS launched three specialized Well-Architected Lenses at re:Invent 2025: Responsible AI, Machine Learning, and Generative AI. The Generative AI Lens alone outlines eight real-world scenarios, from autonomous call centers to AI co-pilots for engineers.

These aren’t theoretical. Netflix used one of these patterns to cut scaling prediction errors by 31%. Amazon improved database sharding efficiency by 27%. But both took months of customization. Why? Because hybrid systems are powerful-but they’re complex. You can’t just plug them in.

Real-World Impact: Architecture Firms and Beyond

Generative AI isn’t just changing software. It’s changing how buildings are designed.

Architectural firms like Zaha Hadid Architects and BIG are using AI to generate hundreds of design variations in hours. One firm cut conceptual design time from three days to four hours using Archicad AI Visualizer. That’s not a gimmick-it’s a productivity revolution.

But there’s a catch. Only 32% of firms have seamless integration with BIM (Building Information Modeling) tools. Many AI-generated designs look great on screen but fail structural checks. One Reddit user wrote: “It gives me beautiful facades-but ignores load-bearing walls.”

Still, 68% of firms report better sustainability analysis. AI can simulate sunlight, wind flow, and material efficiency faster than any human. And 78% say it speeds up design exploration. The tool isn’t replacing architects. It’s giving them superpowers.

What You Need to Get Started

If you’re looking to adopt modern AI architecture, here’s the reality: you don’t need to build a trillion-parameter model from scratch. You need to pick the right components and connect them well.

- Start with AWS’s Generative AI Lens. It gives you eight proven patterns-no guesswork.

- Use MoE if cost and speed matter more than absolute peak accuracy.

- Choose verifiable reasoning if your use case involves compliance, safety, or legal risk.

- Hybrid systems? Only if you’re dealing with multiple data types: text, images, sensor data, or physical systems.

Training takes 8-12 weeks for most engineers. You need three skills: traditional software architecture, basic AI model understanding, and system integration know-how. LinkedIn’s 2025 job data shows these aren’t optional-they’re required in 100%, 87%, and 76% of AI architecture roles, respectively.

Challenges You Can’t Ignore

These systems are powerful-but they’re not easy.

- Integration with legacy systems is the #1 blocker. 63% of enterprises struggle here.

- Complexity is rising. 52% say managing multi-component systems is harder than expected.

- Security is slipping. Startups using AI to generate app architectures report 62% of outputs ignore basic security practices.

- False positives are common. Amazon’s AI recommendations had an 18% error rate at first.

MIT’s Aleksander Madry warns: “Architectural complexity creates hidden flaws you can’t see with traditional testing.” And Anthropic’s Dario Amodei cautions against over-specialization: “AI that’s brilliant in one context can fail catastrophically in another.”

That’s why monitoring matters. You need logging, validation layers, and human-in-the-loop checks. No system is fully autonomous-not yet.

Where This Is Headed

By 2027, Gartner predicts 65% of enterprise AI systems will use hybrid architectures. That’s up from 28% in 2025. Meta just open-sourced its Modular Reasoning Framework. Google’s Pathways update is coming in Q2 2026-and it promises to cut architectural complexity by 40%.

The biggest shift? The focus is no longer on “What’s the next model?” It’s “How do we make AI work reliably?”

Future systems won’t just answer questions. They’ll plan, reflect, and adapt. Imagine an AI that designs a building, simulates its energy use over 20 years, adjusts for weather patterns, and then reconfigures itself when a client changes the brief. That’s not science fiction. It’s what Berkeley’s researchers call “a configurable resource that scales with compute budget.”

The market is exploding. $18.7 billion in AI architecture tools in Q3 2025. 78% of Fortune 500 companies are using some form of it. AWS leads with 34% market share. But the real winners will be those who master the architecture-not just the models.

Frequently Asked Questions

What’s the biggest difference between old and new AI architectures?

Old AI systems were monolithic-everything ran in one big model. New systems are modular, combining specialized components like reasoning engines, memory units, and perception modules. This lets them be faster, cheaper, and more reliable than trying to scale a single model.

Do I need a trillion-parameter model to use modern AI architecture?

No. Most businesses don’t need massive models. Mixture-of-Experts architectures let you use large models efficiently by activating only a small portion per task. You can get strong results with models under 100 billion parameters if your architecture is well-designed.

Why are companies like AWS releasing AI architecture lenses?

Because most companies struggle to turn AI models into working systems. The lenses provide proven templates-like how to build an AI co-pilot or an autonomous call center-so teams don’t waste months guessing what works. They’re like blueprints for AI.

Can generative AI replace architects or engineers?

Not yet-and probably not ever. AI generates options, simulates outcomes, and speeds up repetition. But humans still make final decisions, handle exceptions, and ensure ethical, legal, and structural integrity. AI is a tool, not a replacement.

What’s the biggest risk in adopting these new architectures?

Complexity. More components mean more things that can break silently. A flaw in one module might not show up until it interacts with another. That’s why monitoring, validation, and human oversight are critical-not optional.

How long does it take to implement a modern AI architecture?

Most enterprise systems take 3-6 months. Netflix took 4.5 months to integrate their AI-assisted architecture tool. Startups can build MVPs in a day, but scaling and securing them takes longer. The key is starting small-pick one use case, prove it works, then expand.

Next Steps

If you’re an engineer: Start with AWS’s Generative AI Lens. Pick one scenario-maybe a knowledge worker co-pilot-and build it. Use MoE if you’re on a budget. Add verifiable reasoning if accuracy matters.

If you’re in architecture, design, or manufacturing: Try AI tools that integrate with your existing BIM or CAD software. Don’t aim for full automation. Aim for 50% faster iteration. That’s enough to change your workflow.

If you’re a leader: Stop asking for bigger models. Start asking: “What’s our integration plan?” “How are we monitoring errors?” “Do we have the right skills on the team?” The architecture is the product now-not just the model.

Artificial Intelligence

Artificial Intelligence

Glenn Celaya

January 26, 2026 AT 13:33MoE is just hype wrapped in jargon. I've seen teams waste months on it only to get 5% better latency and triple the debugging hell. If your model can't run on a single GPU you're already doing it wrong.

Jen Becker

January 26, 2026 AT 22:57LOL at people acting like this is new. We had modular AI in 2019. This is just rebranded. Also who cares if it's 72% cheaper if it hallucinates more?

Wilda Mcgee

January 27, 2026 AT 02:07Really appreciate how you broke this down. I've been working with hybrid systems at my firm and the difference between 'just throwing a model at it' and actually designing the pipeline is night and day. One team used Mamba for long-form customer feedback analysis and cut processing time from 4 hours to 45 minutes. The catch? They had to build their own validation layer because the out-of-the-box stuff kept missing sarcasm. Took weeks but now it's reliable. It's not magic-it's patience and good docs.

Ryan Toporowski

January 28, 2026 AT 11:06Yes yes YES to verifiable reasoning!! 🙌 I used to work in healthcare AI and we lost a contract because the model kept giving 'confident' wrong diagnoses. Now we use a step-by-step trace with confidence scores for every claim. It's slower but clients sleep better at night. Also 👋 to anyone trying this-start small. Don't try to replace your whole workflow on day one. Pick one report. Automate that. Celebrate it. Then move on. 💪

Samuel Bennett

January 28, 2026 AT 11:58Where's the source for that 62% security flaw stat? I've read every paper on this and no one says that. Also 'hybrid architectures' is just a buzzword for 'glue code with three APIs and a prayer'. And why is everyone ignoring the fact that Mamba's linear attention breaks on non-English languages? You're all just chasing trends while the real problems get ignored

Madeline VanHorn

January 30, 2026 AT 05:37Anyone who thinks AI is changing architecture is delusional. I've seen 3 AI-generated designs that looked 'beautiful' but had no structural integrity. One had a 300-foot cantilever with no supports. The architect had to manually fix it. AI is a fancy sketchpad. It doesn't understand gravity. Or zoning laws. Or human behavior. Stop pretending it's a genius.

Chris Atkins

January 30, 2026 AT 14:40Love this post. Been using AWS's Generative AI Lens for our internal tools and it saved us 6 weeks of guesswork. Started with the co-pilot for code reviews-now we're rolling it out to docs and QA. The key? Don't try to automate everything. Let AI handle the boring parts. Humans handle the weird edge cases. Also if you're in architecture like the post says-try Archicad AI. It won't design your building but it'll give you 20 variations in 10 minutes. Game changer for client meetings 😎