Why RAG Pipelines Need More Than Just a Quick Test

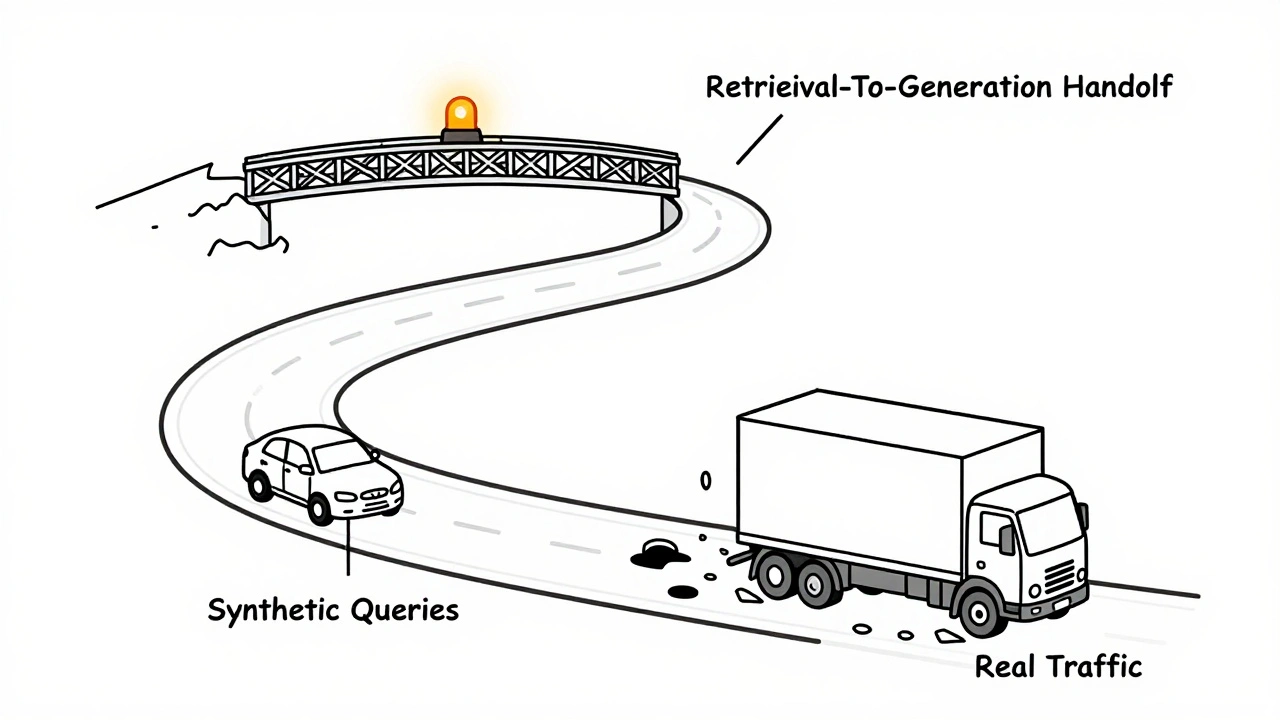

Building a RAG pipeline isn’t like deploying a simple chatbot. You’re stitching together a vector database, a retrieval system, and a language model-each with its own failure points. A query might look perfect on paper, but in production, it could trigger a hallucination, pull the wrong document, or cost you $50 in API fees because the context window exploded. That’s why testing with synthetic queries alone is like checking your car’s tires before a road trip but never turning on the engine.

Real-world RAG systems fail in ways you can’t predict. A healthcare startup in Seattle passed every synthetic test but gave patients wrong dosage advice in 3.7% of live queries. Why? The training data didn’t include rare drug interactions. Synthetic tests missed it. Real traffic didn’t.

What Synthetic Queries Actually Do

Synthetic queries are your controlled lab environment. You create them manually or with LLMs to simulate edge cases: ambiguous questions, multi-step reasoning, or adversarial prompts designed to trigger hallucinations. Tools like Ragas and Promptfoo help generate these at scale.

Common datasets used include MS MARCO (800,000 real user questions) and FiQA (6,000 financial queries). But don’t just copy-paste these. Tailor them to your domain. If your RAG answers legal questions, use real case law prompts. If it’s for customer support, mimic how users actually phrase complaints.

Key metrics for synthetic testing:

- Recall@5: Are the top 5 retrieved documents relevant? Target 0.75 or higher.

- Context Relevancy: Do the retrieved docs actually answer the question? Score above 0.7.

- Faithfulness: Does the answer stick to what’s in the retrieved context? Below 0.6 means hallucinations.

- Answer Relevancy: Is the generated response even useful? Many teams overlook this.

Langfuse’s 2024 benchmarks show enterprise RAG systems scoring between 0.6-0.9 on these metrics. Anything below 0.6 in production? You’ve got a problem.

The Hidden Cost of Synthetic Testing

Creating good synthetic datasets takes time. Braintrust.dev found teams spend 40-60% of their RAG development time just building test cases. And even then, they miss real-world complexity.

Dr. James Zhang from MIT found synthetic queries underrepresent multi-turn conversations by 45-60%. Real users don’t ask one clean question. They say: “Wait, earlier you said X, but now Y. What’s going on?” Synthetic tests rarely capture that.

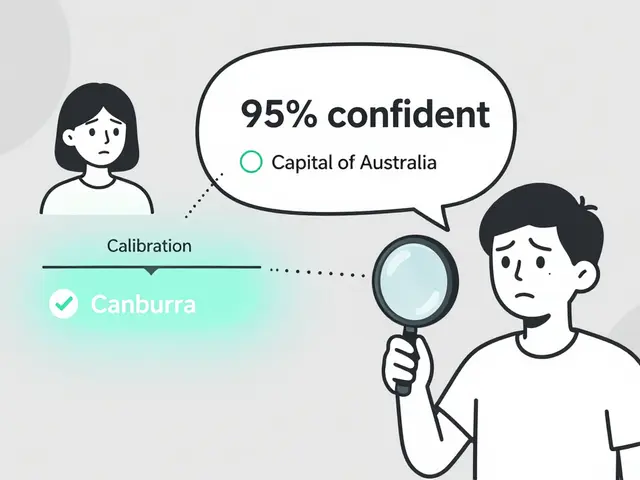

And here’s the kicker: Ragas has a 22% false positive rate for hallucination detection in live systems. That means nearly one in five flagged issues aren’t real. You’ll waste hours chasing ghosts.

Synthetic tests are necessary-but not enough. They’re your smoke alarm. You still need to monitor the house while it’s burning.

Real Traffic Monitoring: Where the Real Problems Hide

Real traffic monitoring is about watching what happens when real people use your system. No simulations. No curated data. Just raw, messy, unpredictable usage.

Platforms like Maxim AI and Langfuse capture 100% of production queries with under 50ms overhead. They trace each request from query → retrieval → generation → response. You can see exactly where things break.

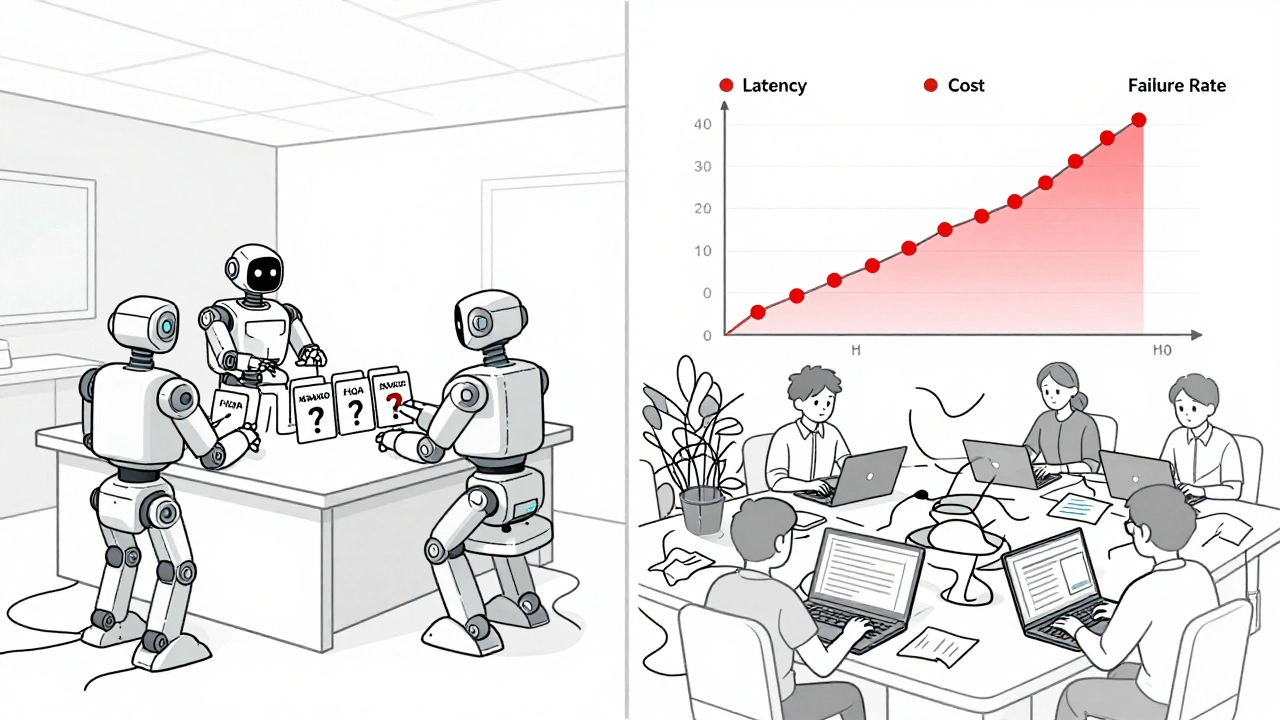

What do you track in production?

- Latency: Over 5 seconds? Users bounce. Target 1-3 seconds.

- Cost per query: $0.0002-$0.002 depending on context length. A single long query can cost 10x more than a short one.

- Failure rate: Are 12% of finance queries failing? That’s not noise-that’s a pattern.

- Query refinement rate: Are users rephrasing their questions 2-3 times? That’s a sign your retrieval is off.

- Session duration: If users leave after one question, your answers aren’t helping.

One startup CTO on HackerNews caught a retrieval failure affecting 12% of finance queries-something synthetic tests missed because their dataset was biased toward public company data. Real traffic exposed the blind spot.

Why 63% of RAG Failures Happen Between Retrieval and Generation

Evidently AI’s 2024 whitepaper found that most failures don’t happen in the LLM or the vector store-they happen in the handoff between them.

Example: The retrieval system finds three relevant documents. The LLM gets them, but ignores two because they’re “too long.” The answer is based on just one. Result? Incomplete, misleading response.

Without distributed tracing, you won’t see this. You’ll just see “answer is bad.” You won’t know if it’s a retrieval issue, a context truncation issue, or a prompt design flaw.

That’s why tools like Langfuse and Vellum show you the exact context passed to the LLM. You can see: “Here’s what the model saw. Here’s what it generated. Here’s the mismatch.”

Costs Add Up Fast-Here’s How to Control Them

Evaluating every single query with Ragas costs $15 per 1,000 queries. That’s $15,000 a month for 1 million queries. Most teams can’t afford that.

Here’s what works:

- Batch scoring: Randomly sample 10% of queries. Costs $1.50 per 1,000. Good for trends, bad for edge cases.

- Threshold alerts: Only evaluate queries that trigger high latency, high cost, or low confidence scores.

- Automated conversion: Vellum’s new feature turns high-latency real queries into synthetic tests automatically. No manual work.

One Reddit user saved $18,000/month by using Promptfoo to catch a context window overflow before it hit production. The system was pulling 10,000-character contexts when 2,000 was enough. Simple fix. Big savings.

Tools Compared: Open Source vs Enterprise

| Tool | Type | Cost | Setup Time | Best For | Weakness |

|---|---|---|---|---|---|

| Ragas | Open Source | $0 | 2-4 weeks | Team with ML engineers, budget constraints | High false positives, no tracing |

| Langfuse | Hybrid | $500-$3,000/month | 1-2 weeks | Teams needing full tracing + scoring | Complex UI, steep learning curve |

| Vellum | Enterprise | $1,500-$5,000/month | 3-5 days | Fast deployment, automated test generation | Less flexible for custom metrics |

| Maxim AI | Enterprise | $2,000-$5,000/month | 1 week | 100% production tracing, feedback loop automation | Expensive, less transparent |

| TruLens | Open Source | $0 | 3-6 weeks | Custom pipelines, deep control | Manual instrumentation for 8-12 components |

Enterprise tools like Vellum and Maxim AI offer “one-click test suite creation” and automated feedback loops. Open-source tools like Ragas give you control-but you pay in engineering hours. Confident AI estimates 20-40 hours/month of maintenance for open-source setups.

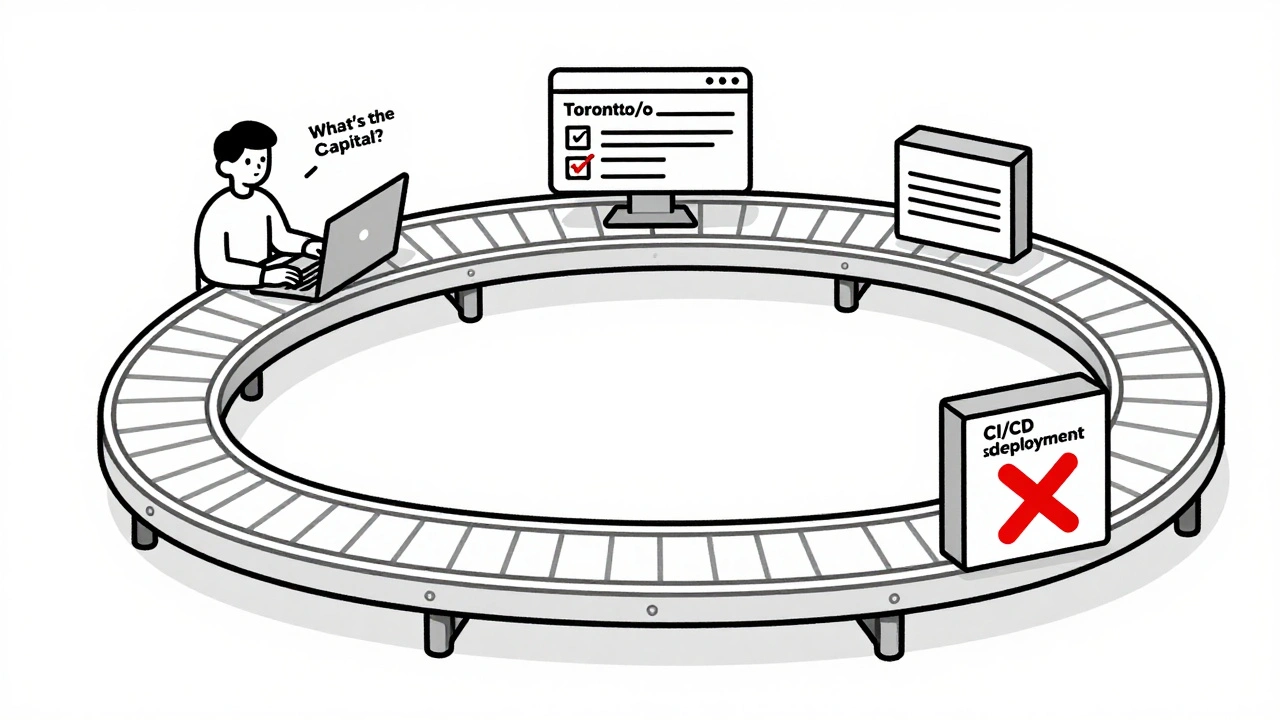

Building a Feedback Loop: Turn Failures Into Tests

The best RAG teams don’t just monitor-they learn.

Maxim AI’s CTO, Alex Chen, says: “The most effective systems convert production failures into synthetic test cases within 24 hours.”

How? When a real query fails-say, a user asks “What’s the capital of Canada?” and the system answers “Toronto”-you automatically:

- Log the query and context

- Generate a synthetic test case: “What’s the capital of Canada?”

- Add it to your test suite

- Run it in CI/CD

- Block future deployments if it fails again

Braintrust.dev found this approach prevents 83% of regressions. Manual testing? Only 22%.

What’s Coming Next in RAG Monitoring

By 2026, Gartner predicts 90% of enterprise RAG systems will have automated evaluation pipelines-up from 35% today.

New developments:

- Context Sufficiency: Ragas 0.1.3 now measures if retrieved documents contain enough info to answer the question-not just if they’re relevant.

- Automated Thresholds: Tools will adjust pass/fail thresholds based on historical performance, not static numbers.

- Adversarial Query Generation: Maxim AI’s new feature uses LLMs to create fake queries designed to break your system. Think of it as red-teaming for AI.

- Standardized Benchmarks: The RAG Evaluation Consortium, launched in January 2025, is building universal test datasets. No more comparing apples to oranges.

And cloud providers? AWS, Azure, and GCP are all building RAG monitoring into their AI platforms. This isn’t a niche tool anymore-it’s infrastructure.

Where to Start Today

If you’re just starting:

- Use Ragas for synthetic testing. Set up Recall@5, Faithfulness, and Answer Relevancy.

- Deploy Langfuse for tracing. Even the free tier gives you visibility.

- Sample 10% of real traffic and score it weekly. Look for patterns, not single errors.

- Turn one production failure into a synthetic test this week. That’s your feedback loop.

You don’t need a $5,000/month tool to start. You need consistency. Monitor. Learn. Repeat.

Common Mistakes to Avoid

- Only testing on clean, simple queries. Real users are messy.

- Ignoring cost per query. A 10% increase in context length can double your bill.

- Using the same synthetic dataset for 6 months. Your data drifts. Your tests should too.

- Not checking for prompt injection. Patronus.ai found 68% of RAG systems are vulnerable.

- Believing high metric scores = good user experience. LangChain’s data shows context relevancy correlates poorly with user satisfaction (r=0.37).

Focus on outcomes, not scores. If users keep rephrasing, your system isn’t working. If they stay on the page, it is.

Artificial Intelligence

Artificial Intelligence