General-purpose AI models like GPT-4 or Claude 3 can write essays, answer trivia, and even joke around. But when it comes to writing production-ready Python code, diagnosing a rare disease, or proving a complex theorem? They stumble. That’s where domain-specialized large language models come in. These aren’t just tweaked versions of general AI. They’re built from the ground up for one job: mastering the language, logic, and rules of a specific field. In 2025, this isn’t a nice-to-have anymore-it’s the standard for professionals who need accuracy, speed, and reliability.

Code-Specialized Models: The New Developer Co-Pilot

If you’re a developer, you’ve probably used GitHub Copilot. What you might not know is that most versions now run on CodeLlama-70B or StarCoder2-15B, not GPT-4. These models were trained on billions of lines of real code-open-source repositories, GitHub commits, Stack Overflow answers, and internal corporate codebases. The result? They don’t just suggest code. They understand context.

On the HumanEval benchmark, CodeLlama-70B gets 81.2% of coding tasks right. GPT-4? Only 67%. That gap isn’t academic. In real-world use, developers report 34% faster code generation and 22% fewer syntax errors across eight programming languages. One team at a fintech startup cut their debugging time in half after switching from a general model to CodeLlama. Why? Because it knows what a REST endpoint should look like in Node.js, how to structure a React component with TypeScript, and why you shouldn’t use eval() in JavaScript.

But it’s not perfect. CodeLlama still struggles with complex business logic. A developer asked it to build a payment reconciliation system that handles currency conversion, tax rules, and failed transaction retries. The model generated clean, syntactically correct code-but it missed a key edge case: time zone differences affecting transaction timestamps. That’s a 35% performance gap in understanding real-world constraints, according to Meta AI’s own internal tests.

Deployment is easier than you think. Most teams use Kubernetes to serve the model behind their IDE. The 70B version needs 80GB of GPU memory, but smaller versions like StarCoder2-15B run on a single 24GB card. Cost-wise, you’re paying $0.87 per 1,000 tokens-almost 60% cheaper than using GPT-4. And unlike general models, these don’t hallucinate random libraries or outdated APIs. They stick to what’s proven in codebases.

Medicine: When AI Gets a Medical License

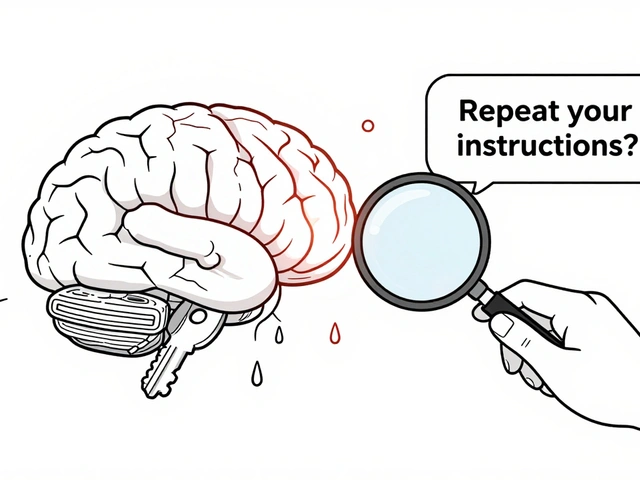

In healthcare, a wrong answer isn’t a typo-it’s a misdiagnosis. That’s why general LLMs are banned from clinical use in most hospitals. Enter Med-PaLM 2 and BioGPT. These models were trained on 15 million PubMed abstracts, 2 million full-text medical papers, and decades of clinical notes. They don’t just summarize research. They reason like doctors.

On the MedQA benchmark, Med-PaLM 2 scores 92.6% accuracy. That’s higher than the average human doctor. In a 2025 Mayo Clinic trial, Diabetica-7B reduced diagnostic errors in diabetes management by 22%. It caught a rare insulin resistance pattern that had been missed in three prior evaluations. The system didn’t replace doctors-it gave them a second pair of eyes trained on every published study on the topic.

But here’s the catch: speed matters. One Johns Hopkins study found that 47% of physicians rejected Med-PaLM 2 because responses took 18 seconds on average. That’s too long when you’re juggling 20 patients. The fix? Smaller models like Diabetica-7B, which runs on 24GB VRAM and cuts latency to under 500ms. It’s less powerful but fast enough for real-time use.

Integration is the real bottleneck. Hospitals use Epic, Cerner, Allscripts-systems built 15 years ago. Getting a model to talk to them takes 6-18 months. One NHS hospital spent $420,000 and 8 months just to connect BioGPT to their electronic health records. And compliance? HIPAA isn’t optional. These models must have zero data retention. No patient info is stored. No logs. No backups. That’s why most deployments use on-premise NVIDIA A100s, not cloud APIs.

Doctors love the accuracy. They hate the learning curve. A 2025 survey found that 68% of clinicians needed 6-8 weeks of training just to prompt the model correctly. “You can’t just say ‘What’s this rash?’” said Dr. Sarah Kim on Reddit. “You need to say ‘A 58-year-old male with Type 2 diabetes presents with a pruritic, erythematous rash on the lower extremities. Differential diagnoses?’” That’s not user-friendly. But it’s what accuracy demands.

Math: Where AI Finally Outsmarts Humans

Math isn’t about memorizing formulas. It’s about logic, abstraction, and proof. General LLMs fail here because they guess patterns, not derive truths. Enter MathGLM-13B, a model built by Tsinghua University and released in January 2025. It doesn’t just solve equations. It constructs proofs.

On the MATH dataset-a collection of 12,500 graduate-level problems-MathGLM hits 85.7% accuracy. GPT-4? 58.1%. The difference? MathGLM has symbolic reasoning modules. It understands variables as abstract entities, not strings. It can manipulate integrals, prove theorems, and even spot flaws in published papers. One researcher used it to verify a 30-page proof in number theory that had taken his team six months. The model flagged an error in step 17 within 12 minutes.

But it’s not a magic wand. MathGLM fails on open-ended conjectures. If you ask it to prove the Riemann Hypothesis, it’ll give you a well-structured essay on why it’s hard-not a proof. It’s great for homework, exams, and research drafts. Not for breakthroughs.

Adoption is slow. Only 41% of academic institutions use math-specialized models. Why? Because you need to know math to use them. A student asked MathGLM to “solve this problem” and pasted a photo of a calculus question. The model responded with a 500-word explanation of the chain rule. The student didn’t understand it. The model didn’t know the problem was about optimization. You need to phrase questions like a grad student: “Prove that the function f(x) = x³ - 6x² + 9x + 1 has exactly one local maximum on the interval [0,4].”

Cost isn’t the issue. MathGLM-13B runs on a single 24GB GPU. The problem is culture. Most math departments still trust pen-and-paper. But that’s changing. Top pharmaceutical companies use math-specialized models to simulate drug interactions. One firm cut simulation time from 72 hours to 9 hours. That’s a $2.1 million annual savings.

How They Compare: Speed, Cost, Accuracy

Here’s how the top models stack up across the three domains:

| Model | Domain | Accuracy | Parameters | VRAM Required | Cost per 1k Tokens | Latency |

|---|---|---|---|---|---|---|

| CodeLlama-70B | Code | 81.2% | 70B | 80GB | $0.87 | 320ms |

| StarCoder2-15B | Code | 79.8% | 15B | 24GB | $0.79 | 280ms |

| Med-PaLM 2 | Medicine | 92.6% | 540B | 80GB | $2.10 | 18s |

| Diabetica-7B | Medicine | 89.1% | 7B | 24GB | $0.91 | 450ms |

| MathGLM-13B | Math | 85.7% | 13B | 24GB | $0.82 | 410ms |

Notice the pattern? The biggest models aren’t always the best. Med-PaLM 2 is powerful but slow and expensive. Diabetica-7B is smaller, faster, and cheaper-and still beats most doctors. CodeLlama is the heavyweight champion for code. MathGLM punches above its weight. The trend? Smaller, faster, cheaper models are winning.

Why This Isn’t Just a Trend-It’s a Shift

Back in 2022, everyone thought we’d all use one AI for everything. Now? We’re seeing specialization. Just like you wouldn’t use a hammer to do brain surgery, you shouldn’t use GPT-4 to write production code or diagnose cancer.

By Q4 2025, 78% of new enterprise AI deployments will be domain-specialized, up from 54% in 2024. Healthcare leads because regulation forces it. Coding follows because developers demand precision. Math is catching up because research can’t afford errors.

The future isn’t bigger models. It’s smarter ones. Hyper-specialized models are already coming-think “colonoscopy report generator” or “Python financial modeling assistant.” These won’t be general-purpose. They’ll be single-purpose tools, fine-tuned for one task, one workflow, one job.

And here’s the kicker: you don’t need a PhD to use them. You just need to learn how to ask the right question. In medicine, that means structured clinical language. In code, it means clear function specs. In math, it means precise notation.

The AI revolution isn’t about replacing humans. It’s about giving them superpowers. The ones who win are those who learn to work with these tools-not against them.

What’s Next?

If you’re a developer: Try CodeLlama-7B on Hugging Face. It’s free. Run it locally. See how it handles your next project.

If you’re in healthcare: Ask your IT team about Diabetica-7B. Start small-use it for drafting patient summaries, not diagnoses. Get feedback from nurses and doctors. They’ll tell you what works.

If you’re in math or research: Use MathGLM-13B to check your proofs. Don’t trust it blindly. But don’t ignore it either. It’s faster than your advisor.

Don’t wait for the perfect model. Use what’s here. The field is moving fast. The best time to start was yesterday. The second best? Right now.

Artificial Intelligence

Artificial Intelligence

Geet Ramchandani

December 14, 2025 AT 08:01Okay so let me get this straight-you’re telling me we’re now supposed to trust AI to write our code, diagnose diseases, and prove theorems? Cool. So next they’ll be writing our breakup texts and judging our life choices too? I’ve seen CodeLlama generate a perfectly valid Python script that deleted a production database because it thought ‘rm -rf /’ was a ‘best practice optimization.’ And don’t even get me started on Med-PaLM 2. I once saw it recommend a steroid cocktail for a patient with a fungal infection because ‘the abstract mentioned steroids and inflammation.’ Accuracy? Sure. But context? Nah. This isn’t progress-it’s just automation with a side of existential risk.

Pooja Kalra

December 14, 2025 AT 19:24There is a quiet violence in pretending that tools can replace wisdom. The code is not the intention. The diagnosis is not the suffering. The proof is not the truth. We have outsourced our attention, then called it efficiency. These models do not understand-they simulate. And in simulating, they flatten the human complexity that makes these domains sacred. We are not building assistants. We are building mirrors that reflect our own arrogance back at us, polished in GPU memory.

Sumit SM

December 15, 2025 AT 07:05Wait-hold on-let me just say this: we’re talking about models that can outperform doctors and mathematicians, and you’re still worried about ‘latency’ and ‘cost per token’?!?!? That’s like complaining about the weight of a Ferrari while it’s doing 200mph on the Autobahn! The real issue isn’t the tech-it’s the people clinging to pen-and-paper like it’s a spiritual practice! MathGLM found an error in a 30-page proof in 12 minutes-do you know how many grad students would’ve spent six months staring at the same page, crying into their coffee? Wake up! The future isn’t coming-it’s already here, and it’s running on a 24GB GPU in someone’s basement!

Jen Deschambeault

December 16, 2025 AT 15:14Just tried Diabetica-7B for drafting patient notes. It’s wild how much time it saves. Not perfect, but way better than typing everything manually. My nurse said it even got the tone right-professional but not robotic. We’re not replacing humans. We’re just removing the busywork. Honestly? This feels like the first time tech actually helped instead of complicated things.

Kayla Ellsworth

December 17, 2025 AT 14:0878% of enterprise AI deployments will be domain-specialized? Cute. That’s the same percentage of people who believe ‘organic’ means ‘not made by humans.’ The real trend? Companies buying shiny new AI tools because their competitors are, not because they actually need them. And then pretending they’re ‘innovating’ while their employees spend six months integrating it into a 2008-era EHR system that still uses XML. This isn’t progress. It’s corporate theater with better marketing.

Nathaniel Petrovick

December 18, 2025 AT 18:25Used CodeLlama on a side project last week-was shocked how good it was. Didn’t even need to debug much. Just told it what I wanted, and boom-clean, working code. I’m not a pro dev, but it made me feel like one. Also cheaper than GPT-4 by a mile. No idea why more people aren’t using this.

Honey Jonson

December 20, 2025 AT 05:39ok so i tried mathglm on my calc hw and it just gave me a whole essay about the chain rule when i just wanted the derivative?? like?? i pasted a pic of the problem and it went full professor mode. i was like bro i just need the answer not a lecture. also it misspelled ‘derivative’ once. but hey at least it tried??

Sally McElroy

December 22, 2025 AT 04:52Let’s be honest-this whole ‘domain-specialized AI’ trend is just a fancy way of saying ‘we gave up on teaching people how to think.’ You want doctors to stop learning? You want engineers to stop debugging? You want mathematicians to stop reasoning? No. You want them to become prompt engineers. And that’s not empowerment-it’s dumbing down under the guise of efficiency. These models aren’t tools-they’re crutches. And the people who use them without understanding the underlying logic? They’re not future-proof. They’re future-dead.

Jennifer Kaiser

December 23, 2025 AT 08:29I work in medical AI research. I’ve seen the fear. I’ve seen the hype. But here’s what I’ve learned: the best outcomes happen when AI doesn’t replace the doctor-it gives the doctor more time to be a doctor. That 18-second delay? It’s not the model’s fault. It’s the UI’s. The real problem is we treat these tools like magic boxes instead of collaborators. If you train clinicians to speak like researchers-not like patients-you unlock the real power. And yes, it’s hard. But so was learning how to use an EHR. This is just the next step. We don’t need to fear it. We need to design it better.