PCables AI Interconnects

Generative AI must comply with WCAG accessibility standards just like human-created content. Learn how to apply assistive technology requirements, avoid legal risks, and build truly inclusive AI systems.

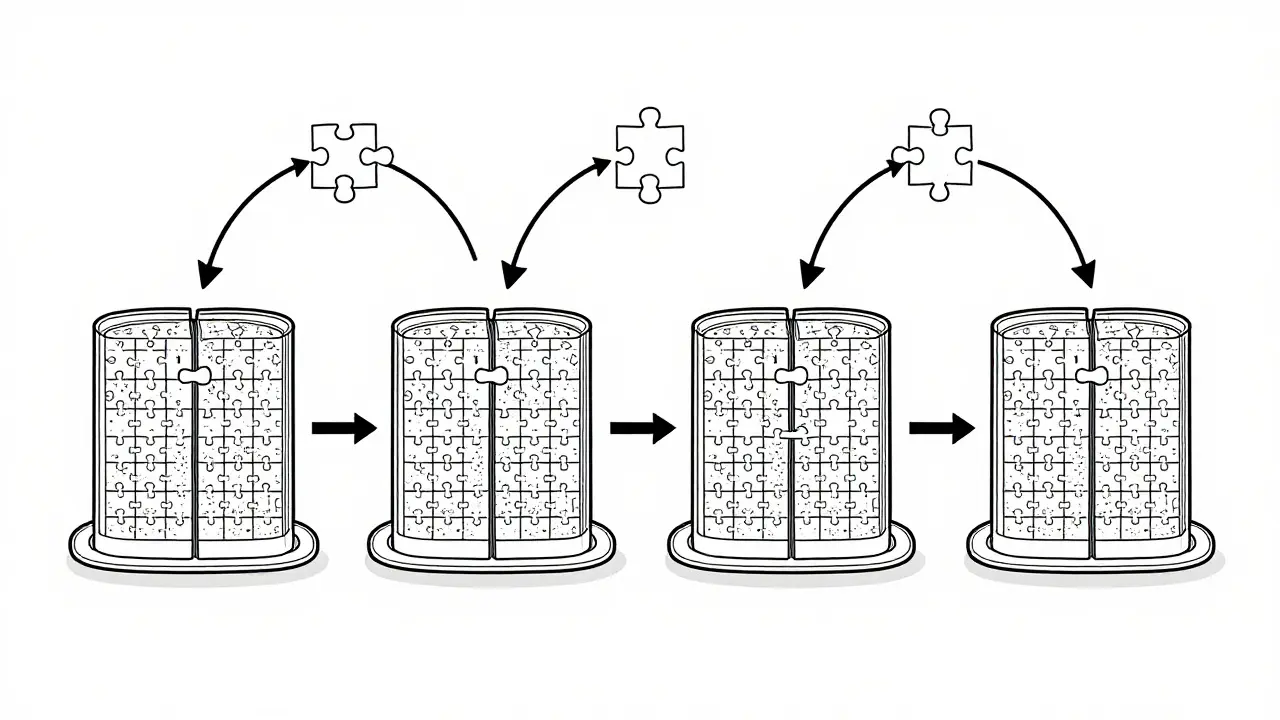

Tensor parallelism lets you run massive LLMs across multiple GPUs by splitting model layers. Learn how it works, why NVLink matters, which frameworks support it, and how to avoid common pitfalls in deployment.

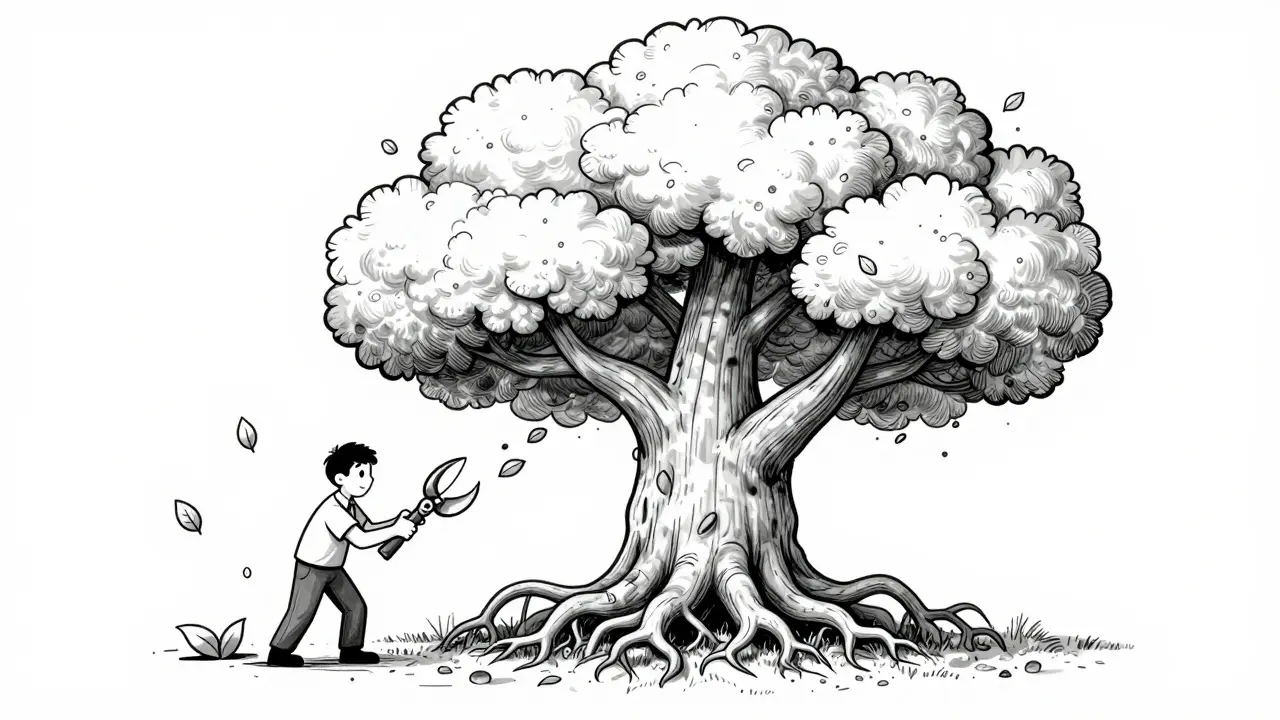

Combining pruning and quantization cuts LLM inference time by up to 6x while preserving accuracy. Learn how HWPQ's unified approach with FP8 and 2:4 sparsity delivers real-world speedups without hardware changes.

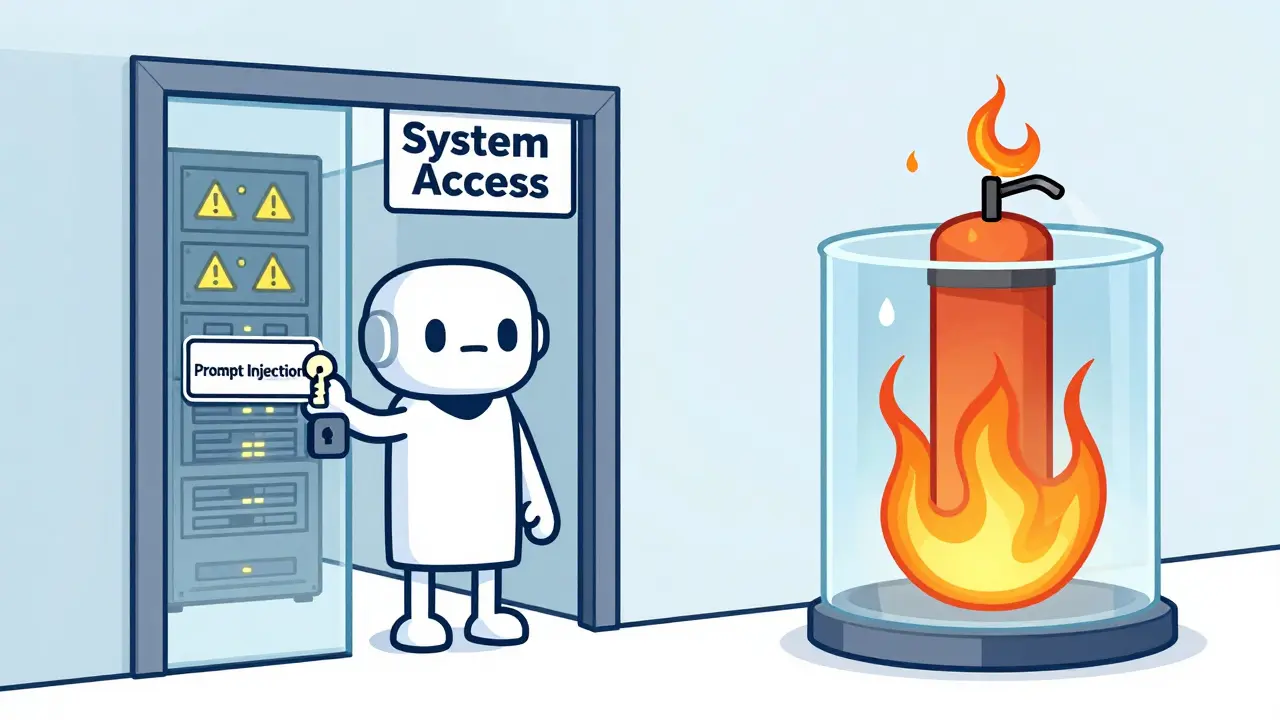

Sandboxing external actions in LLM agents prevents dangerous tool access by isolating processes. Firecracker, gVisor, and Nix offer different trade-offs between security and performance. Learn which method fits your use case.

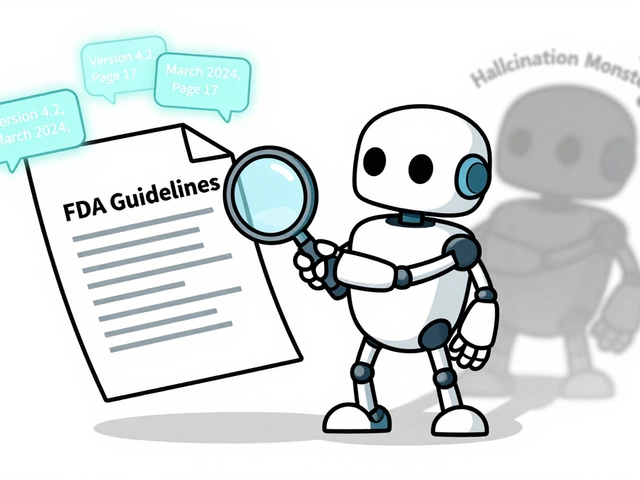

Structured prompts using role, rules, and context are the key to reliable enterprise LLM use. Learn how role-based prompting, chain-of-thought reasoning, and iterative testing improve accuracy, reduce hallucinations, and align outputs with business needs.

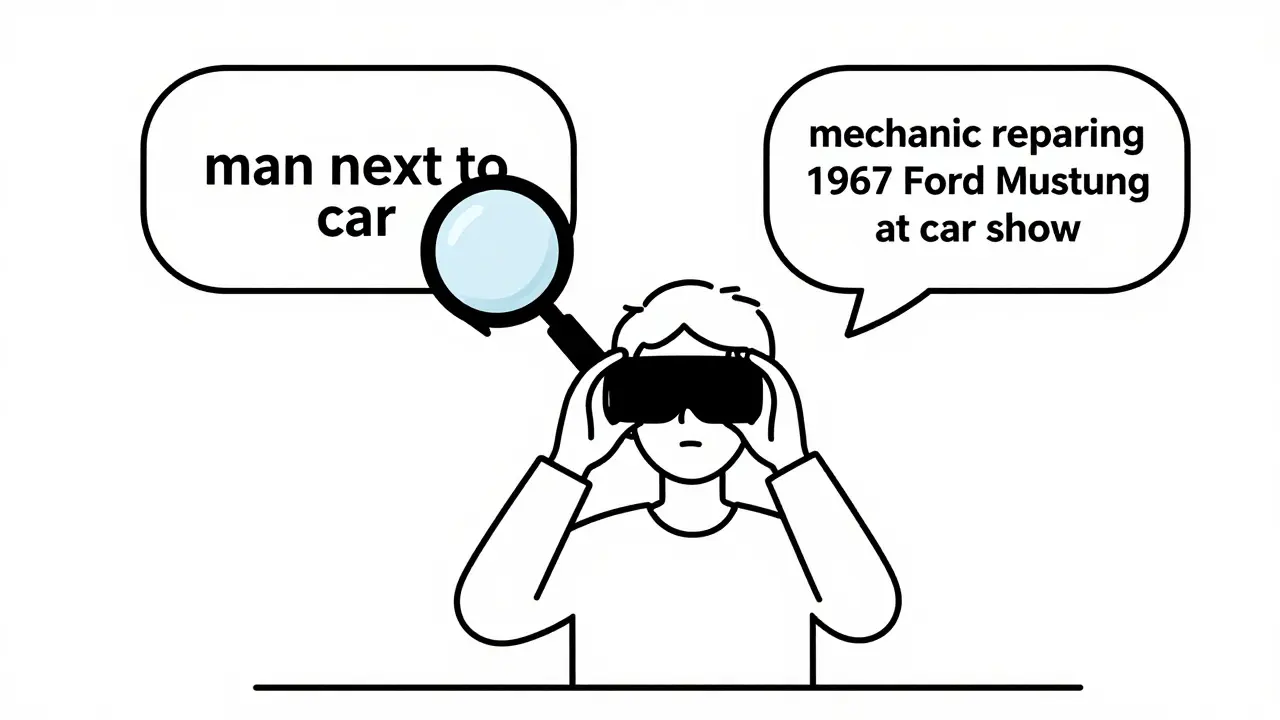

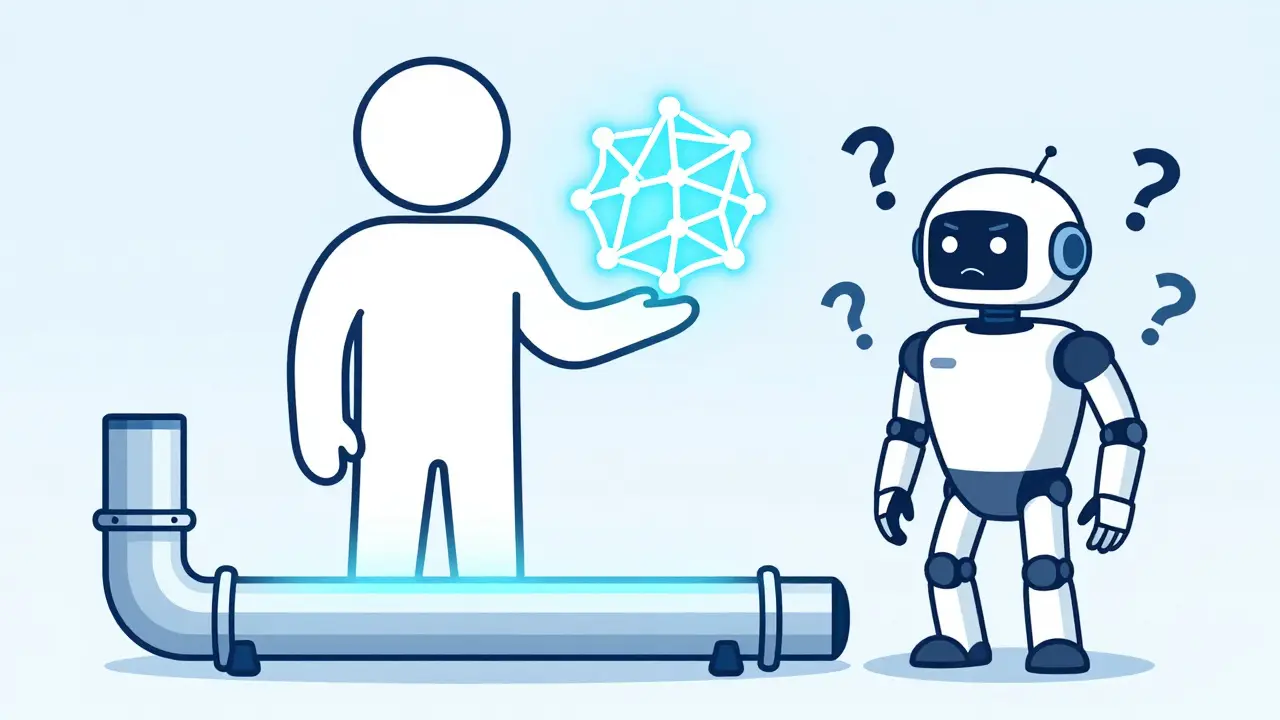

Choosing the right embedding model for your enterprise RAG pipeline isn't about benchmarks - it's about speed, security, and domain-specific accuracy. Learn what actually works in production and how to avoid costly mistakes.

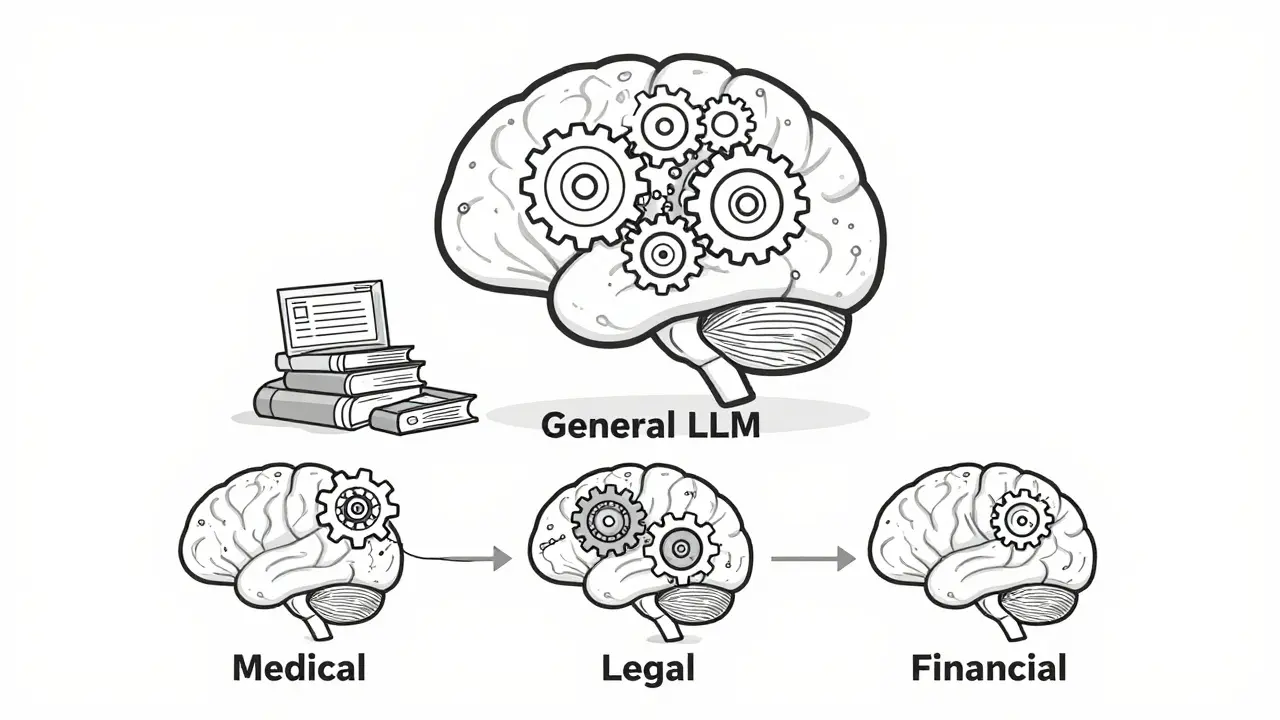

Domain adaptation in NLP lets you fine-tune large language models to understand specialized fields like medicine, law, or finance. Learn how it works, what methods deliver the best results, and why it's essential for real-world AI applications.

Vibe coding lets developers build full-stack apps using AI prompts instead of writing every line of code. Learn what to expect, how it works, where it shines, and where it fails - with real data from 2026.

Template repositories with pre-approved dependencies for vibe coding cut development time by up to 67% and reduce AI errors. Learn the top 4 templates, real risks, and who should use them in 2026.

Domain experts are now turning spreadsheets into full web and mobile apps using vibe coding-a method that uses AI to generate code from plain language prompts. No coding skills required.

Generative AI is reshaping leadership - not by replacing humans, but by freeing them to focus on what matters most: people, strategy, and trust. Learn how top leaders are using it to drive real change.

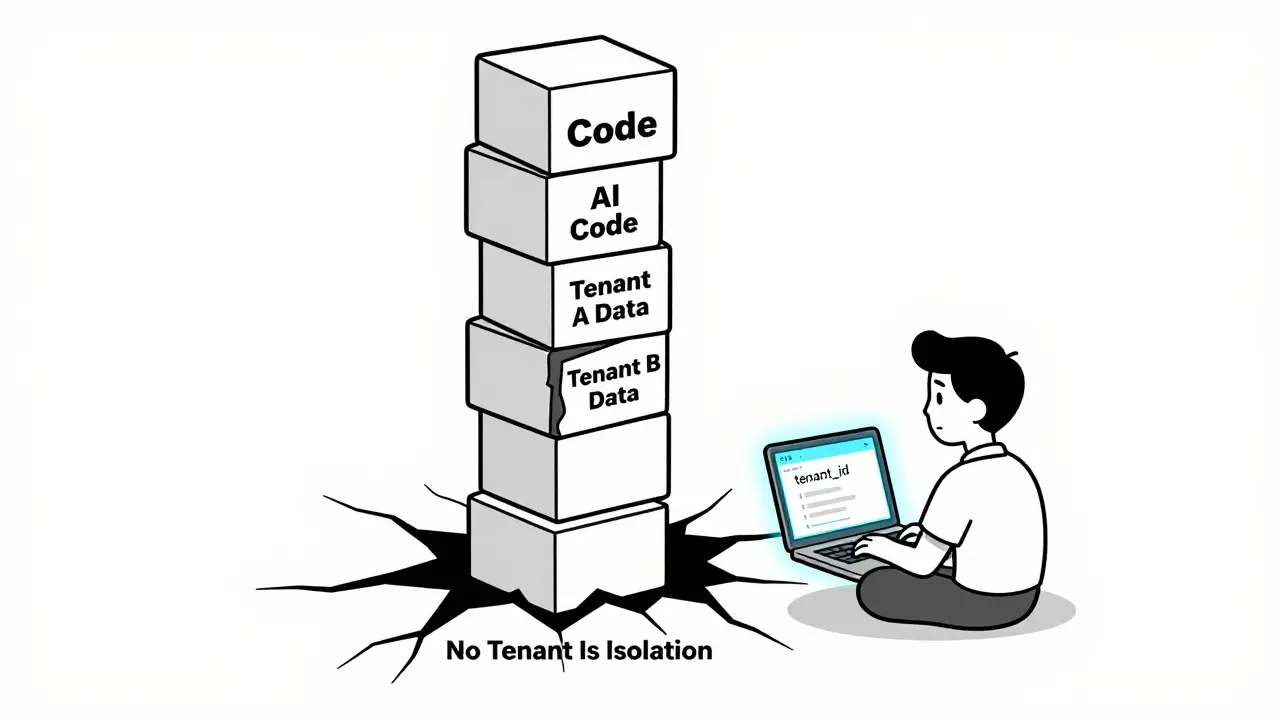

Multi-tenancy in vibe-coded SaaS requires careful isolation, authentication, and cost controls from day one. AI can speed up development-but only if you know how to prompt it right. Learn how to avoid costly mistakes and build secure, scalable SaaS apps.

Artificial Intelligence

Artificial Intelligence