Healthcare administrators spend hours every day on paperwork that doesn’t directly help patients. One of the biggest time-sinks? Writing prior authorization letters and clinical summaries. These documents are required by insurance companies before approving treatments, tests, or prescriptions. But they’re tedious, repetitive, and often require digging through messy electronic health records. On average, a clinician spends 15.3 minutes per prior auth request. Multiply that by hundreds of cases a week, and you’re looking at days lost to bureaucracy - not care.

Enter generative AI. It’s not science fiction anymore. In 2025, AI tools are already cutting that 15-minute task down to under 5 minutes. And it’s not just about speed. It’s about reducing burnout, cutting denials, and letting doctors focus on patients again.

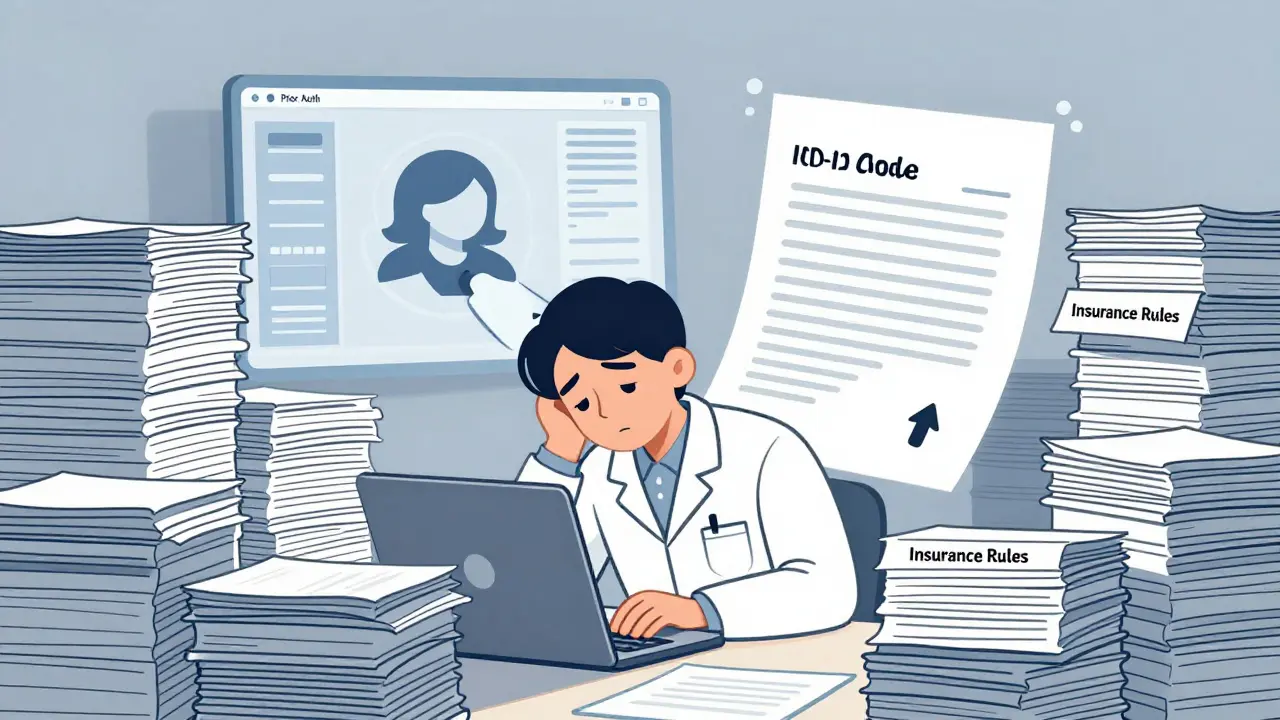

Why Prior Authorization Is a Nightmare

Prior authorization isn’t just paperwork. It’s a maze. Each insurance plan has its own rules. One insurer wants a 300-word letter. Another needs a specific ICD-10 code in bold. Some require lab results attached. Others demand prior treatment history. And if you miss one detail? The claim gets denied. Then you start over.

Physicians aren’t trained to be insurance clerks. Yet, they’re the ones stuck doing it. A 2024 study found that clinicians spend 15-30% of their workday on administrative tasks. That’s 3-6 hours a week just filling out forms. And it’s getting worse. As new drugs and treatments emerge, insurers are demanding more documentation, not less.

The cost? Around $23-31 billion a year in the U.S. alone. That’s billions spent on phone calls, faxes, and letters - not on patient care.

How Generative AI Fixes This

Generative AI doesn’t just rewrite text. It understands context. It pulls data from electronic health records (EHRs) like Epic or Cerner, reads clinical notes, identifies key diagnoses, matches them to insurance requirements, and auto-generates a complete prior auth letter in seconds.

Here’s how it works in practice:

- The clinician orders a test or treatment in the EHR.

- The AI system scans the patient’s history - lab results, medications, previous approvals, notes from specialists.

- It identifies which insurer is involved and pulls their specific prior auth rules.

- It writes a clear, compliant letter using medical terminology and proper coding.

- The admin or clinician reviews it in under a minute and hits send.

Tools like Microsoft’s Nuance DAX Copilot and Epic’s Samantha feature do this in real time. They’re not just typing - they’re reasoning. They know that if a patient has Stage 3 kidney disease and needs an MRI, they need to justify medical necessity beyond just the code. They know which insurers require prior imaging approvals and which ones don’t.

One hospital system in Pittsburgh cut their prior auth processing time by 52% after implementing AI. They saved $4.7 million in a year. That’s not a fluke. It’s repeatable.

Clinical Summaries: The Other Half of the Problem

Prior auth isn’t the only paperwork headache. Clinical summaries - those short overviews sent to specialists, ERs, or follow-up clinics - are just as time-consuming. Doctors used to write them by hand. Now, AI can generate them from a single voice note or EHR entry.

Tools like Abridge and Augmedix record doctor-patient conversations and turn them into structured summaries. They extract key points: symptoms, diagnosis, plan, medications, allergies. Then they format them for the next provider. No more typing after a 12-hour shift.

Accuracy? Around 87% for unstructured notes. That’s not perfect - but it’s better than a tired doctor rushing through a summary at 7 p.m. And with human review, the final product is reliable.

One primary care group in Ohio reduced their summary-writing time by 70%. Their patients started getting faster referrals. Specialists reported clearer, more complete info. No more “What was the reason for the visit again?”

AI vs. Human: Where It Works - and Where It Doesn’t

Generative AI is great for routine cases. If a patient needs a standard MRI for lower back pain and has a documented diagnosis, the AI nails it. Success rate? 94%.

But it struggles with complexity. What if the patient has three rare conditions? Or if the treatment is experimental? Or if the insurance policy has an obscure exception? That’s where AI falls short.

Studies show AI accuracy drops to 72% for rare diseases. Handwritten notes? Only 65% readable. And if the system misreads a medication dosage? That’s dangerous.

That’s why every major medical group - the American Medical Association, Mayo Clinic, Stanford - insists on a human-in-the-loop. AI drafts. Clinician approves. No exceptions.

Some systems even flag high-risk cases automatically. If the AI detects a potential error - like a drug interaction or missing lab result - it stops and asks for human review. That’s smart design.

Big Players and Real-World Tools

Not all AI tools are the same. Here’s how the top players stack up:

| Tool | Accuracy (Prior Auth) | Insurance Coverage | Integration | Cost per 100 Providers |

|---|---|---|---|---|

| Nuance DAX (Microsoft) | 91.3% | 92% | Epic, Cerner, Allscripts | $185,000 |

| Epic Samantha | 89.1% | 88% | Epic only | $160,000 |

| Google Duet AI | 85.4% | 78% | Google Cloud | $155,000 |

| Amazon Bedrock | 78.2% | 75% | AWS | $140,000 |

| Abridge | 82% | 65% | Multiple EHRs | $130,000 |

Nuance leads in accuracy and insurance coverage. Epic is best if you’re already using their EHR. Google and Amazon are cheaper but less reliable for complex cases. Abridge shines in clinical documentation but doesn’t cover all prior auth workflows.

Costs are high upfront - around $185,000 for a 100-provider system. But annual savings? Often over $1 million. And staff turnover drops. One admin told Reddit: “We used to lose 3 people a year from burnout. Now we’re hiring.”

Implementation Challenges

It’s not all smooth sailing. About 63% of hospitals report major headaches during rollout. Common issues:

- EHR data is messy or siloed - AI can’t read what it can’t access.

- Insurance rules change monthly. AI needs constant updates.

- Some clinicians distrust the tool. “It doesn’t understand my patient,” they say.

- Staff resist change. “Why should I learn this?”

Solutions? Start small. Pilot with one insurance plan. Train admins first. Then bring in clinicians. Have a dedicated AI oversight team that reviews errors weekly. Don’t just flip a switch and expect miracles.

Training time? Admins learn in 3-4 weeks. Doctors need 6-8 weeks to trust and use the output effectively.

Regulations, Bias, and Ethics

AI isn’t neutral. A 2024 JAMA study found some systems denied Medicaid patients 12.7% more often than private insurance patients - not because of medical need, but because the training data reflected historical biases in coverage.

California’s 2024 AI in Healthcare Act now requires transparency. If an AI helped make a denial, the patient must be told. Other states are following.

HIPAA compliance is non-negotiable. Tools must encrypt data, remove identifiers, and log every action. Leading vendors meet these standards - but not all do. Always verify.

And yes, there are hallucinations. AI can make up lab results. Invent non-existent diagnoses. That’s why every draft must be reviewed. No automation without human oversight.

What’s Next?

The future is coming fast. By 2026, AI will predict when a patient will need prior auth - before the doctor even orders the test. Imagine: the system sees a patient with chronic pain, knows they’re due for a new MRI, and auto-submits the request before the appointment.

CMS is testing standardized templates across Medicare Advantage plans. That could cut confusion by half.

And by 2027, blockchain might be used to verify prior auth approvals - making fraud and double-submissions nearly impossible.

But the biggest shift? Integration. No more standalone AI tools. In 68% of health systems, AI will be baked into their EHRs - like a spellchecker for paperwork.

Final Thoughts

Generative AI won’t replace healthcare administrators. It will free them. It won’t replace doctors. It will unburden them.

This isn’t about cutting costs. It’s about restoring dignity to the job. About letting nurses, coders, and physicians do what they trained for - care for people.

The tools are here. The data is there. The question isn’t whether to use AI. It’s how fast you can implement it - and how well you’ll train your team to use it right.

Artificial Intelligence

Artificial Intelligence

Kate Tran

December 16, 2025 AT 14:03finally someone gets it. i used to spend 2 hours a day just filling out prior auth forms. now the ai does it in 5 mins and i actually have time to talk to patients. no more crying in the supply closet. thank you.

amber hopman

December 17, 2025 AT 21:49i’ve been using Nuance DAX at my hospital and it’s been a game changer. but i’m still nervous about the bias stuff. we had a case where it kept denying a medicaid patient for a simple MRI even though the docs said it was clearly necessary. had to override it twice. i don’t trust it fully yet, but i’m willing to give it time if we keep auditing it.

Jim Sonntag

December 18, 2025 AT 03:34so let me get this straight. we’re spending 23 billion a year on paperwork so doctors can be glorified copy editors, and now we’re gonna pay 185k to let a robot do the boring stuff? genius. next they’ll invent ai to sign our name on the damn forms too. i miss the days when we just faxed a note and hoped for the best.

Deepak Sungra

December 18, 2025 AT 10:25bro this is why i hate healthcare. you spend 10 years in med school just to become a glorified data entry clerk. ai is the only thing keeping me from quitting. but honestly? the system still sucks. they change insurance rules every month and the ai keeps screwing up. i just copy paste the last one and hope for the best. at least now i can scroll tiktok while waiting for it to finish.

Samar Omar

December 19, 2025 AT 07:53let me be perfectly clear: generative ai in healthcare is not merely a tool - it is a philosophical rupture in the very fabric of clinical epistemology. we are witnessing the commodification of medical intuition, the erosion of embodied knowledge, and the silent colonization of clinical judgment by algorithmic hegemony. the 87% accuracy rate? it’s not a number - it’s a moral crisis. when a machine decides whether a diabetic patient gets their insulin approved, we are no longer practicing medicine - we are performing bureaucratic theater for corporate shareholders. and don’t even get me started on the hallucinations. one time, it invented a non-existent lab result for a patient with stage 4 cancer. i nearly fainted. this isn’t progress. it’s a dystopian fever dream dressed in EHR templates.

chioma okwara

December 19, 2025 AT 22:17you guys keep saying 'ai drafts, human approves' but you're all just clicking 'approve' without reading anything. i checked 3 drafts last week - one said 'patient has no kidneys' when they clearly had a transplant. another said 'treatment: magic' for a migraine. if you're not proofreading, you're not saving time - you're just being lazy and risking lives. also, 'prior auth' is two words, not one. fix your spelling.

John Fox

December 20, 2025 AT 04:10ai is helping. no drama. just less paperwork. doctors can finally breathe. that's all that matters.