Why Citations Matter More Than Ever in RAG Systems

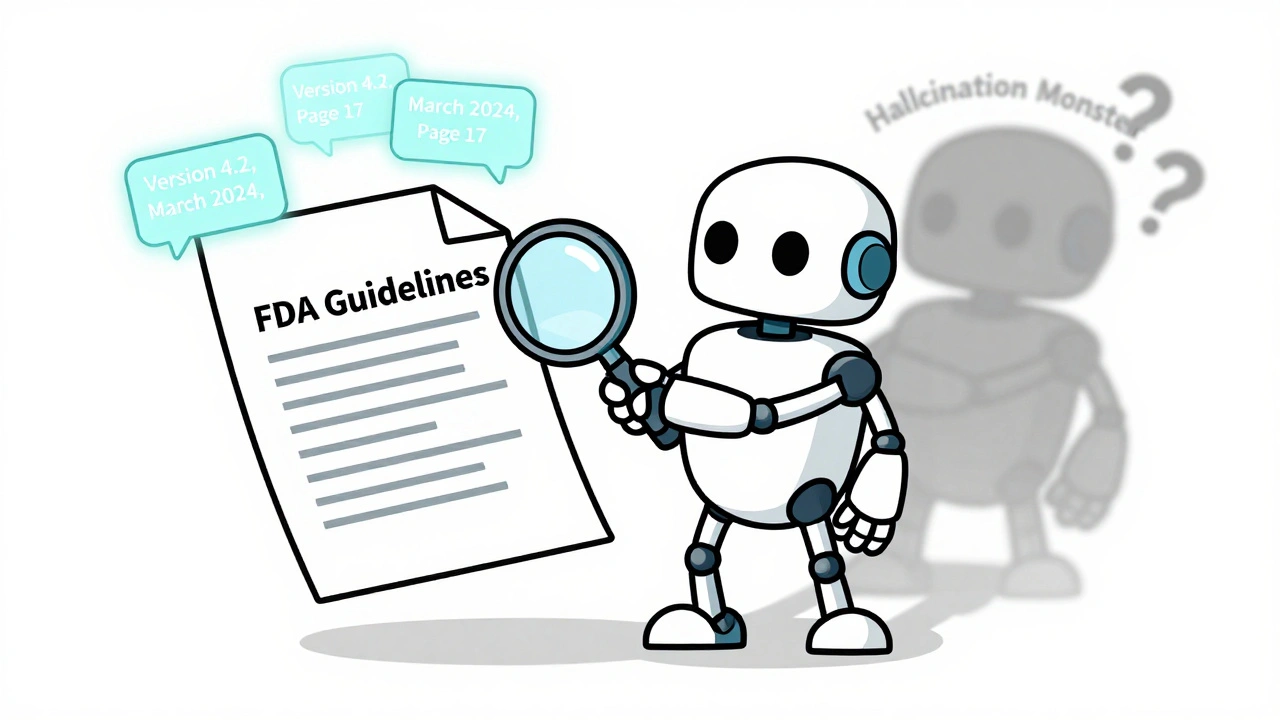

Large Language Models (LLMs) are great at sounding confident. But confidence doesn’t mean correctness. Without citations, a RAG system is just a fancy hallucination engine with a polished interface. You ask it for the latest FDA guidelines on insulin dosing, and it gives you a plausible-sounding answer - but there’s no way to check if it’s true. That’s dangerous in healthcare, legal, or financial settings. Citations fix that. They turn guesswork into verifiable facts.

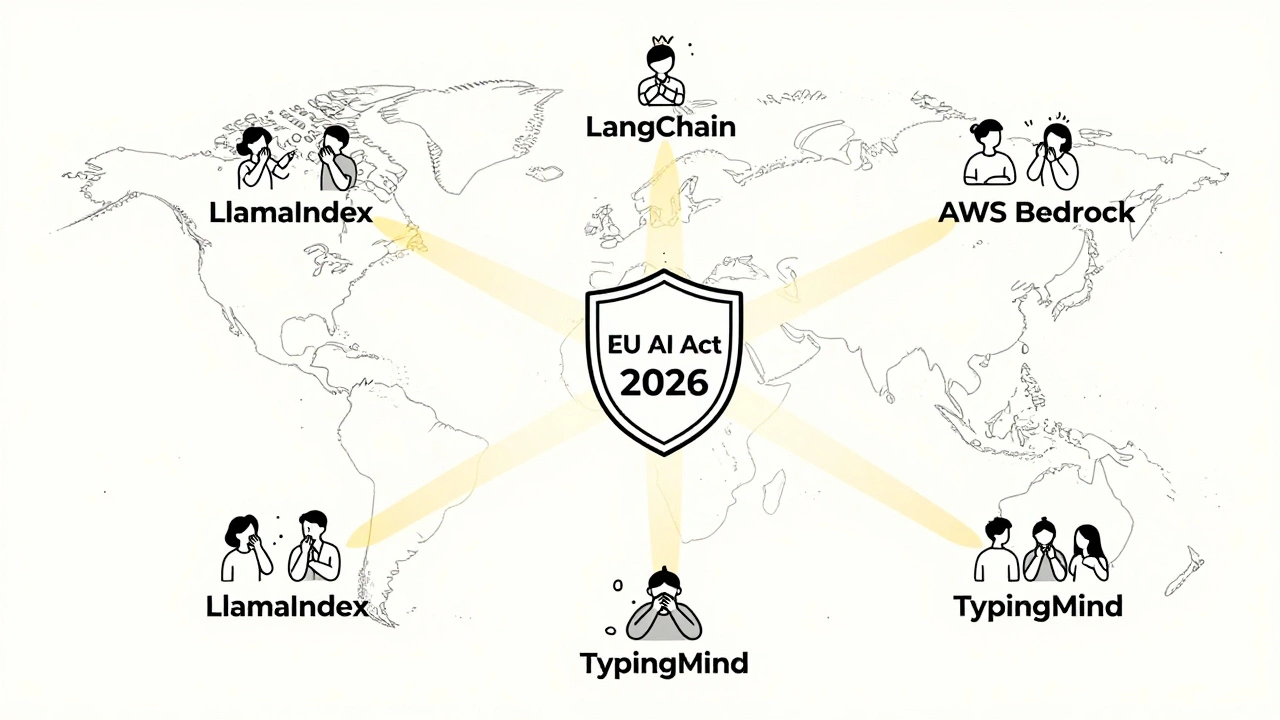

By 2025, 83% of enterprise RAG deployments include citation functionality, up from just 47% in late 2024. Why? Because users and regulators won’t accept answers without proof. The EU AI Act, effective February 2026, makes source attribution mandatory for factual claims. Companies that delay implementation risk fines, lawsuits, and loss of trust. Citations aren’t a nice-to-have anymore. They’re the baseline for responsible AI.

How RAG Citations Actually Work

RAG stands for Retrieval-Augmented Generation. First, the system searches your knowledge base for relevant documents. Then, it uses those documents to generate a response. The citation step is where it links each claim back to its source. Simple in theory, messy in practice.

Here’s what happens behind the scenes:

- Your document is split into small chunks - usually 512 characters, as recommended by LlamaIndex.

- Each chunk gets metadata: title, author, date, source ID, and sometimes section headings.

- When a user asks a question, the system finds the most relevant chunks using vector search.

- The LLM generates a response using only those chunks.

- The system extracts source metadata and attaches it to each claim.

But this process breaks easily. If a document is poorly formatted - say, a PDF with garbled headings - the system can’t tell which section a quote came from. If chunks are too large, multiple unrelated ideas get lumped together. If chunks are too small, context disappears. That’s why chunk size matters. Zilliz’s tests showed 98.7% traceability with 512-character chunks using Milvus 2.3.3.

What Makes a Good Citation

Not all citations are created equal. A citation that says “Source: Document 12” is useless. A citation that says “Source: FDA Guidelines on Insulin Administration, Version 4.2, March 2024, Page 17” is actionable.

TypingMind’s internal testing found that these four elements boost citation trust by 37%:

- Clear source titles - Avoid vague names like “Company Policy.” Use “2025 HR Benefits Handbook - Section 3.1.”

- Consistent formatting - Always use the same structure: Title, Date, Source. No exceptions.

- Exclusion of irrelevant content - Remove footnotes, ads, and boilerplate. They confuse the system.

- Weekly updates - Outdated documents cause 68% of citation drift. Update your corpus every week.

Metadata is the unsung hero here. Adding author names, publication dates, and version numbers lets users judge credibility. A citation from a 2018 blog post shouldn’t carry the same weight as one from a peer-reviewed journal.

Why Your Citations Are Probably Wrong (And How to Fix Them)

Even top RAG systems make citation errors. A 2025 study found baseline RAG implementations have error rates as high as 38.7%. That means nearly 4 in 10 citations are misleading, missing, or fake.

Here are the top three reasons:

- Citation drift - The LLM changes the wording so much that the original source no longer matches. This happens in 49% of multi-turn conversations.

- Truncated citations - When a quote spans two chunks, the system picks only one. Users see “Source: Document A” - but the real source is “Document A and Document B.”

- Unclear metadata - If your PDF has “Section 1” as the title and no date, the system has nothing to work with.

The solution isn’t just better prompts. It’s better correction. The CiteFix framework (April 2025) doesn’t just detect errors - it fixes them. It uses six lightweight methods, from simple keyword matching to fine-tuned BERTScore models. For Llama-3-70B, hybrid lexical-semantic matching improved citation accuracy by 27.8%. For Claude-3-Opus, BERTScore gave a 22.3% boost.

Key takeaway: Don’t rely on default RAG setups. Use a post-processing layer like CiteFix to clean up citations after generation.

Framework Showdown: LlamaIndex vs. LangChain vs. Proprietary Tools

Not all RAG frameworks handle citations the same way.

| Framework | Out-of-the-box Accuracy | Customization Needed | Best For |

|---|---|---|---|

| LlamaIndex | 82.4% | High - requires chunk size tuning, metadata mapping | Teams with technical expertise and structured data |

| LangChain | 78.1% | Medium - flexible but needs manual prompt engineering | Developers building custom pipelines |

| TypingMind | 89.7% | Low - pre-configured for enterprise use | Business users needing plug-and-play reliability |

| AWS Bedrock (with citation verification) | 85.9% | Low - automatic disambiguation built-in | Cloud-native enterprises using AWS |

TypingMind leads in real-world accuracy, thanks to its strict prompt rules: “Always cite source titles.” That single directive improved consistency by 63%. AWS Bedrock’s new disambiguation feature cuts citation errors by nearly 40% in technical docs. LlamaIndex is powerful but demands more work. If you’re a startup with limited engineers, go with TypingMind or AWS. If you’re a tech team with time to tweak, LlamaIndex gives you control.

Real-World Successes - And Why Some Failed

One Fortune 500 financial firm reduced compliance review time by 76% after switching to a Milvus-based RAG system with proper citations. Their auditors could now verify every claim in minutes instead of days.

Another company, a legal tech startup, saw 92% citation failure - until they fixed their source data. Their PDFs had hidden Markdown artifacts from conversion. The system kept citing “# Section 2” instead of “Section 2: Contract Terms.” After preprocessing the documents to clean formatting, accuracy jumped to 98%.

On the flip side, companies that treated citations as an afterthought failed. One health tech firm used a basic RAG setup with unstructured Wikipedia-style articles. Citations pointed to “Wikipedia: Diabetes” - no date, no author, no version. Users lost trust. The system was shut down.

The lesson? Garbage in, garbage out. No amount of AI magic fixes bad data.

What’s Next for RAG Citations

The field is moving fast. LlamaIndex’s new “Citation Pro” feature, announced in April 2025, uses adaptive chunk sizing - automatically adjusting from 256 to 1024 characters based on content complexity. Early tests show 18.3% better accuracy.

The RAG Citation Consortium, formed in January 2025 with 47 members including Microsoft, Google, and IBM, is working on a universal format for machine-readable citations. Think of it like DOI for AI-generated answers.

But the biggest driver isn’t tech - it’s regulation. The EU AI Act isn’t the only one. The U.S. is drafting similar rules. By 2026, companies without traceable citations won’t be able to sell AI tools in major markets.

Meanwhile, developers are responding. GitHub repositories with “citation” in RAG code grew 227% in 2024. The tools are getting better. The standards are forming. The clock is ticking.

How to Start Getting Citations Right Today

You don’t need to build a custom system from scratch. Here’s your 3-step plan:

- Fix your data - Clean up your documents. Give every source a clear title, date, and version. Remove clutter. Update weekly.

- Use a proven framework - Pick TypingMind for ease, LlamaIndex for control, or AWS Bedrock if you’re on AWS. Don’t try to roll your own unless you have a research team.

- Add CiteFix-style correction - Even the best systems make mistakes. Run outputs through a lightweight post-processor to fix missing or wrong citations.

And never forget this: A citation is only as good as the source behind it. If your knowledge base is outdated, incomplete, or messy, no AI can save you. Start with clean data. The rest follows.

Artificial Intelligence

Artificial Intelligence

Noel Dhiraj

December 14, 2025 AT 05:34Start with your PDFs. Fix the titles. Remove the junk. Update weekly. That's 80% of the battle.

vidhi patel

December 15, 2025 AT 10:36Priti Yadav

December 16, 2025 AT 20:23Ajit Kumar

December 18, 2025 AT 18:18Diwakar Pandey

December 20, 2025 AT 07:01