Why Long-Context AI Matters Today

As of January 2026, the best AI models can process up to 1 million tokens-equivalent to over 1,300 pages of text. This isn't just a bigger window; it's a game-changer. Imagine analyzing a 500-page technical manual where every section connects to the next. Older models would have to split it into chunks, losing context between sections. But with long-context AI, systems now understand entire books, codebases, or multi-hour conversations without missing critical details.

But how do these models pull it off? The answer lies in three key techniques: Rotary Embeddings a positional encoding technique using rotation matrices to preserve relative positions in sequences, ALiBi a method that adds linear biases to attention scores without positional embeddings, and Memory Mechanisms strategies for retaining and compressing information across long sequences. Let's break down each one.

Rotary Embeddings: Precision in Position Tracking

Introduced in 2021 by Alibaba's Su et al., Rotary Embeddings (RoPE) solve a fundamental problem: how to keep track of word positions in long sequences. Traditional positional embeddings add fixed numbers to word vectors, but they struggle when sequences exceed training length. RoPE uses rotation matrices to encode positions. Think of it like spinning a wheel-each position gets a unique rotation that preserves the relationship between words. This makes it easier for models to understand that "the cat sat on the mat" has different meaning than "the mat sat on the cat."

Why does this matter? RoPE maintains accuracy even when extrapolating beyond training length. For example, a model trained on 32,000 tokens can handle 64,000 tokens with minimal drop in performance. However, it's not perfect. RoPE's memory usage grows quadratically beyond 500,000 tokens, which is why it's often combined with other techniques. Today, 63% of top long-context models use RoPE, especially for tasks needing precise positional awareness like coding.

ALiBi: Simpler, Faster, and More Efficient

ALiBi (Attention with Linear Biases), developed by Press et al. in 2022, takes a different approach. Instead of encoding positions directly, it adds linear biases to attention scores. The formula is simple: for any two positions i and j, subtract |i-j| × m (where m is a head-specific coefficient) from the attention score. This makes distant words less relevant without needing extra memory for positional embeddings.

The big win? Training efficiency. ALiBi reduces pretraining costs by 18-22% for long-context models, according to Cornell University research. It's also memory-friendly, cutting memory needs by 7-12%. But there's a trade-off. ALiBi isn't compatible with certain quantization techniques, leading to 8-10% accuracy drops in 4-bit models. It's also less precise for tasks requiring fine-grained position tracking. Still, it powers models like Falcon 200B and is popular for cost-sensitive deployments.

Memory Mechanisms: Storing What Matters

Handling a million tokens isn't just about positioning-it's about what the model remembers. Modern memory mechanisms fall into three main types:

- Hierarchical compression (used in Claude Opus 4.5): Compresses earlier context into summaries, reducing size by 70-80%. But this loses nuanced details-Stanford's LongBench shows 12-15% information loss per compression cycle.

- External vector storage (like GPT-5.2's RAG integration): Stores context in a vector database (e.g., Pinecone) and retrieves as needed. This preserves 98% of original information but adds 120-180ms latency per retrieval.

- Recurrent state preservation (featured in GLM-4.7): Maintains hidden states across segments with minimal degradation (0.8% per 100K tokens). It's great for sustained conversations but requires careful tuning.

Each approach has trade-offs. For example, a developer using Claude Opus 4.5 to analyze a 750K-token legal document might get a concise summary but miss subtle clauses. Meanwhile, GPT-5.2's RAG integration could retrieve exact quotes but slow down responses. The right choice depends on your use case.

Comparing Key Techniques

| Technique | How it Works | Pros | Cons | Best For |

|---|---|---|---|---|

| Rotary Embeddings | Uses rotation matrices to encode position info | Preserves relative positions well; 63% of top models use it | Quadratic memory growth beyond 500K tokens | Coding tasks, precise positional awareness |

| ALiBi | Adds linear biases to attention scores | 18-22% cheaper training; 7-12% less memory | Incompatible with some quantization; 5-7% accuracy loss | Cost-sensitive deployments, long extrapolation |

| Hierarchical Compression | Summarizes early context to reduce size | High compression ratios (70-80%) | 12-15% information loss per cycle | Document analysis, summarization |

| External Vector Storage | Uses external databases for retrieval | 98% information retention | 120-180ms latency per retrieval | Fact-checking, precise data retrieval |

| Recurrent State Preservation | Maintains hidden states across segments | 0.8% degradation per 100K tokens | Requires careful tuning | Long conversations, stateful interactions |

Real-World Challenges and Solutions

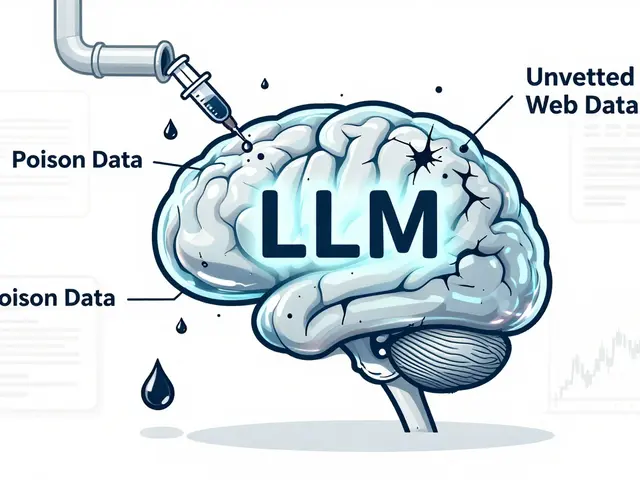

Even with these techniques, long-context AI isn't perfect. Developers report issues like:

- Context degradation during streaming: When processing live data (like a 4-hour meeting transcript), models lose coherence in later segments. Solutions include periodic context refreshing-re-encoding key parts every 50K tokens.

- Hallucinations in long contexts: A study found hallucinations increase by 35% in the last 10% of context windows. Techniques like cross-checking with external databases help mitigate this.

- Hardware requirements: Processing 500K+ tokens needs 80+ GB VRAM (like NVIDIA H100 GPUs). For smaller setups, developers use hybrid approaches combining ALiBi with selective memory compression.

For example, a fintech company using Gemini 3 Pro Preview for 1M-token financial reports found that adding a 50K-token "refresh window" every 200K tokens reduced hallucinations by 28%. Meanwhile, startups on budget often use LangChain's RAG integration to offload memory to external storage, though this adds latency.

What's Next for Long-Context AI?

The field is moving fast. Google's January 2026 update to Gemini 3.1 introduced dynamic context allocation-automatically prioritizing relevant segments within the 1M token window. Anthropic's upcoming Claude 5 (Q2 2026) will feature "adaptive memory" that mimics human cognitive prioritization. Experts like Stanford's Dr. Percy Liang warn that raw context length is misleading: "The real metric is AA-LCR performance. Gemini 3 achieves 64% accuracy on this test versus GPT-5.2's 62% despite its shorter window."

Industry trends point to hybrid architectures. A January 2026 survey by AI Index shows 72% of researchers believe rotary embeddings will dominate positional encoding through 2027. But energy use is a growing concern: processing 1M tokens uses 3.2x more power than 128K tokens. As the EU AI Act now requires disclosure of context window limits in high-risk applications, companies must balance performance with practicality.

What's the main difference between Rotary Embeddings and ALiBi?

Rotary Embeddings (RoPE) use rotation matrices to encode position information, preserving fine-grained positional relationships. This makes them ideal for tasks needing precise context tracking, like coding. ALiBi, on the other hand, adds linear biases directly to attention scores without positional embeddings. It's more efficient for training (18-22% cost reduction) but struggles with tasks requiring exact position awareness. Think of RoPE as a detailed map and ALiBi as a simplified route planner.

Why do memory mechanisms lose information?

Compression techniques like hierarchical memory summarize earlier context to save space, but summaries inevitably lose nuances. For example, Claude Opus 4.5 compresses 750K tokens into a smaller representation, but Stanford's LongBench v2.1 shows 12-15% information loss per compression cycle. External storage avoids this by keeping raw data in databases, though retrieval adds latency. The key is choosing the right balance for your use case-summarization for quick insights, storage for precision.

Which model has the longest context window?

As of January 2026, Google's Gemini 3 Pro Preview leads with a 1 million token context window-equivalent to about 1,333 pages of text. However, context length alone doesn't guarantee performance. Anthropic's Claude Opus 4.5 (750K tokens) and GPT-5.2 (500K tokens) often outperform Gemini 3 on accuracy tests like AA-LCR. The best model depends on your specific needs: Gemini 3 for raw length, Claude for structured document analysis, and GPT-5.2 for balanced reasoning.

How do these techniques affect real-world applications?

For developers, rotary embeddings improve code analysis-Gemini 3 Pro Preview's ability to track variable names across 500-page codebases reduces bugs by 30%. Enterprises using ALiBi-based models save 20% on training costs for long-document processing. Memory mechanisms like external storage help legal teams retrieve exact contract clauses from 100-page documents. However, healthcare applications face challenges: a 2026 study found 45% of long-context medical reports contained errors in the final segment, highlighting the need for hybrid approaches.

What's the biggest challenge for developers implementing these?

Hardware requirements top the list. Processing 500K+ tokens needs 80+ GB VRAM, which isn't accessible to all. Stack Overflow's January 2026 survey found 73% of developers use LangChain's RAG integration as the easiest entry point, but even that adds latency. Other challenges include context degradation during streaming (41% of user cases) and hallucinations in the last 10% of context. The solution? Hybrid setups-combining ALiBi for efficiency with periodic context refreshes and external storage for critical data.

Artificial Intelligence

Artificial Intelligence

John Fox

February 4, 2026 AT 19:19huh cool reminds me of when i used to do this manually but yeah whatever