By 2026, if you’re still choosing between NLP pipelines and end-to-end LLMs as if they’re competitors, you’re already behind. They’re not rivals. They’re teammates. The real question isn’t which one to use-it’s when to compose and when to prompt.

What You’re Really Trying to Solve

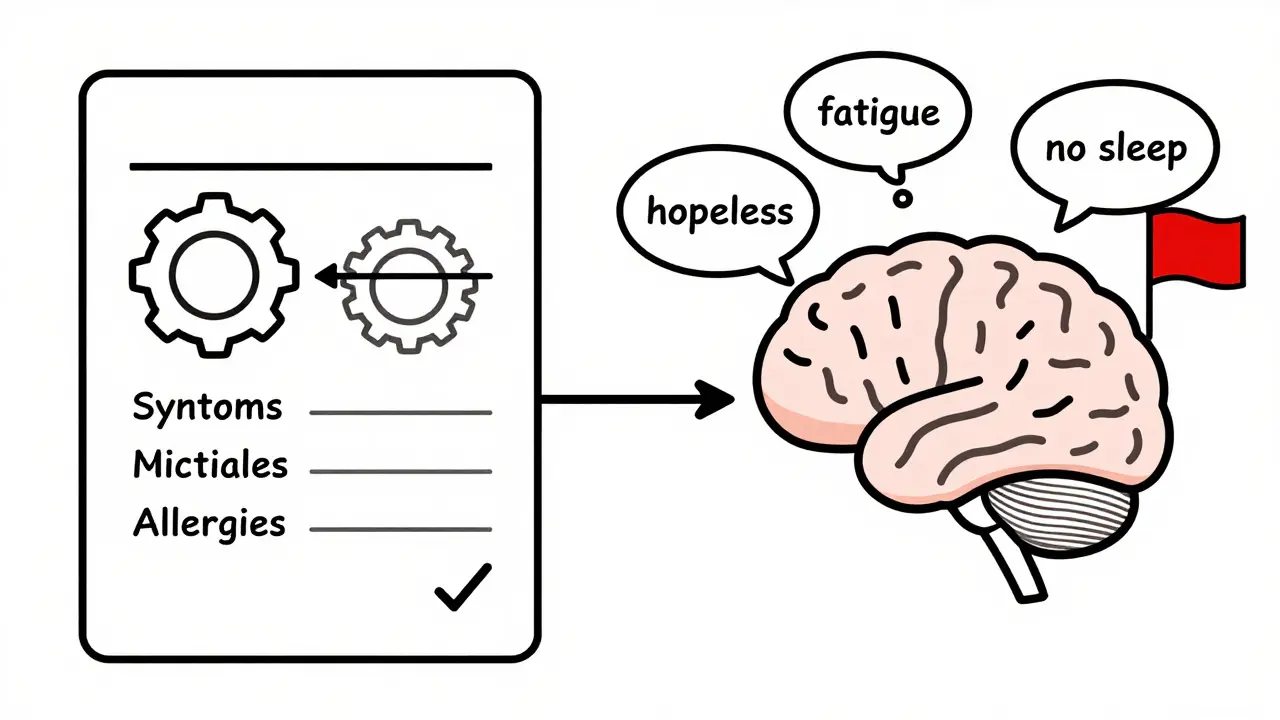

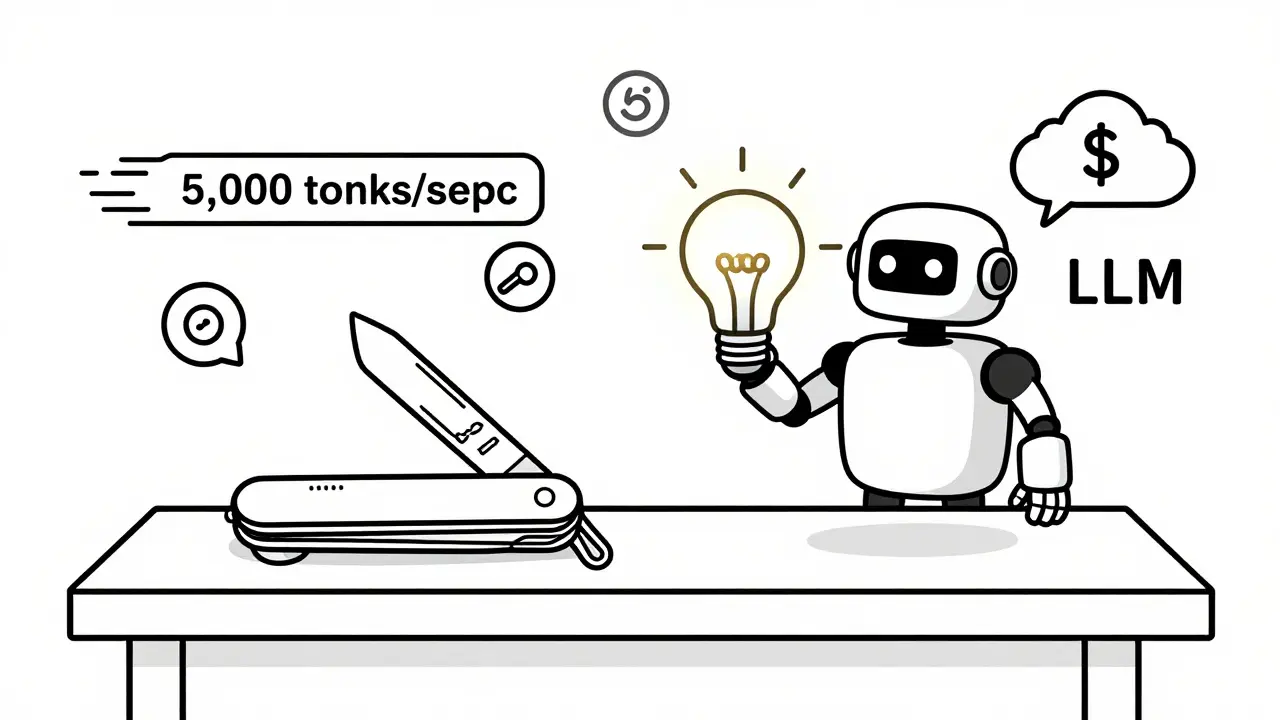

Most teams start with a simple goal: automate text processing. Maybe it’s classifying customer support tickets. Or pulling product features from reviews. Or flagging unsafe content in real time. But then they hit a wall. If your system needs to respond in under 50 milliseconds, handle 10,000 requests per minute, and never guess-NLP pipelines are your only sane choice. If you need to summarize a 50-page research paper, rewrite a legal clause in plain English, or generate product descriptions that sound human-LLMs win. But most real problems? They need both. The mistake? Thinking you have to pick one. You don’t. You just need to know where each one shines.NLP Pipelines: The Precision Tools

Think of NLP pipelines like a Swiss Army knife with 10 specialized blades. Each blade does one thing, and does it perfectly. A typical pipeline might look like this:- Tokenize the text (split into words and punctuation)

- Tag parts of speech (noun, verb, adjective)

- Extract named entities (people, companies, locations)

- Run sentiment analysis

- Apply business rules (e.g., if ‘refund’ + ‘angry’ → escalate)

End-to-End LLMs: The Generalists

Now imagine handing a PhD in linguistics, psychology, and business strategy the same task. That’s what an LLM is. You don’t build steps. You write a prompt:"Based on this customer review, summarize the main complaint, identify the product feature mentioned, and suggest a response tone (apologetic, enthusiastic, neutral)."The model processes everything at once. It doesn’t need separate modules for entity extraction or sentiment. It infers them from context. That’s powerful. A GPT-4 model can extract relationships from scientific papers, rewrite legal jargon into plain language, or draft a marketing email that feels personal-all from one prompt. But it’s expensive. A single request to GPT-4 can cost $0.03 per 1,000 tokens. That’s 100x more than a pipeline. And it needs a GPU. A100s cost $15,000 each. Cloud APIs? You’re paying per second. Latency? 100ms to 2 seconds. That’s too slow for live chat moderation. Too slow for real-time fraud detection. And then there’s the unpredictability. Ask the same prompt twice? You might get two different answers. Hallucinations? Up to 25% in complex tasks. One financial firm found an LLM invented fake regulatory citations-just because the prompt sounded plausible. LLMs are brilliant. But they’re not reliable. Not yet.

When to Use Each

Here’s the decision tree most teams miss:- Use NLP pipelines if: You need speed, cost control, or deterministic output. Examples: real-time content moderation, product tagging, compliance filtering, high-volume data extraction.

- Use LLMs if: You need creativity, context understanding, or multi-step reasoning. Examples: summarizing long documents, generating customer responses, translating nuanced tone, analyzing unstructured feedback.

- Use both if: Your task is important, complex, and high-volume. This is where 90% of enterprise value is created.

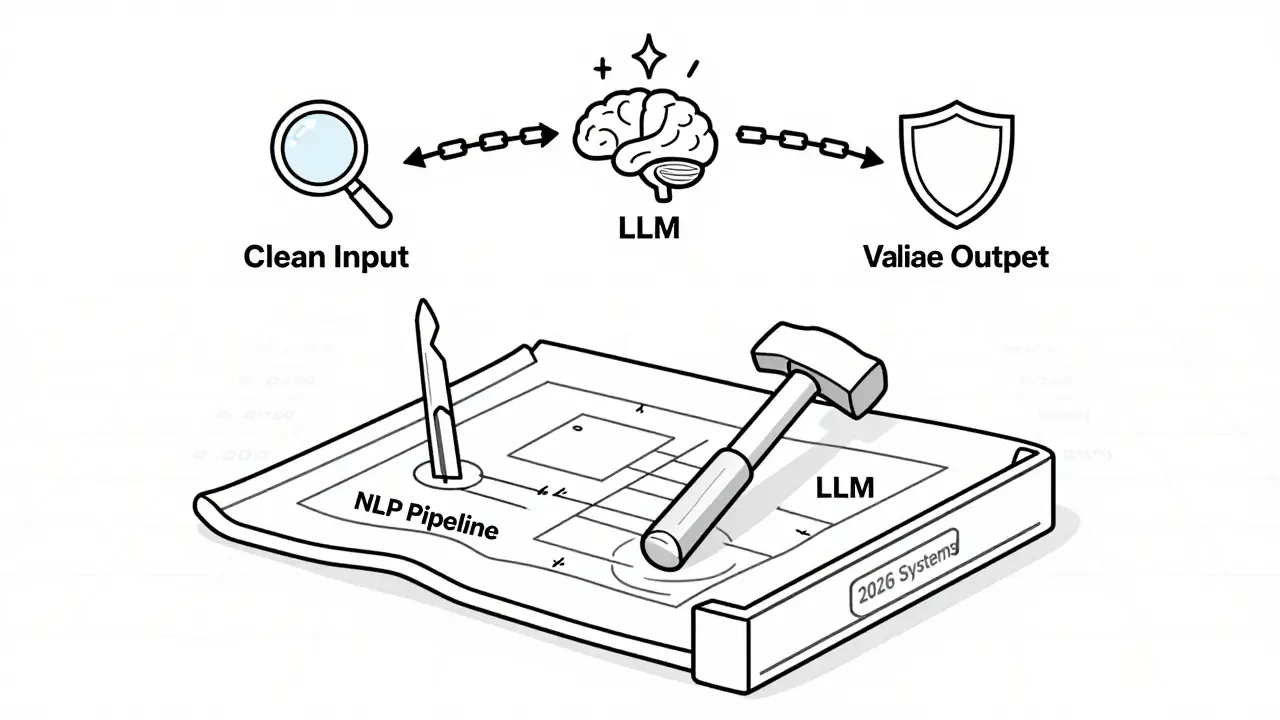

The Hybrid Approach: What the Pros Do

The smartest teams don’t replace pipelines with LLMs. They use pipelines to make LLMs better. Here’s how GetStream and Elastic do it:- Preprocess with NLP: Clean the input. Extract entities. Remove noise. This cuts LLM token usage by 60%.

- Feed clean data to LLM: Now the model doesn’t waste time on garbage. It focuses on meaning.

- Validate with NLP: After the LLM generates a response, run it through a rule-based checker. Is the sentiment score consistent? Are the entities still valid? If not, flag it.

Where LLMs Still Fail (And Why Pipelines Still Win)

LLMs struggle in regulated industries. Why? - Not auditable: You can’t trace how it reached a decision. A pipeline? Every step is logged. - Not repeatable: Same input, different output. Bad for compliance. - Not controllable: You can’t guarantee it won’t hallucinate. The EU AI Act, which took effect in February 2025, requires high-risk systems (finance, healthcare, hiring) to be deterministic. Pure LLMs? Non-compliant. Hybrid systems? Fine. Even OpenAI and Anthropic now offer "deterministic modes"-but they’re slower and cost more. So why not use NLP for the parts that need certainty?

What You Should Build in 2026

Stop thinking in binaries. Start thinking in workflows. Here’s your blueprint:- Start with NLP pipelines for the 80% of tasks that are predictable.

- Use LLMs only for the 20% that need understanding, not just extraction.

- Use NLP to clean input and validate output. This reduces LLM cost by 50-70%.

- Monitor for "prompt drift"-if LLM responses change over time, version your prompts and test them weekly.

- Track cost per task. If an LLM costs more than $0.01 per request and doesn’t improve accuracy by 10%, you’re overpaying.

Tools to Get Started

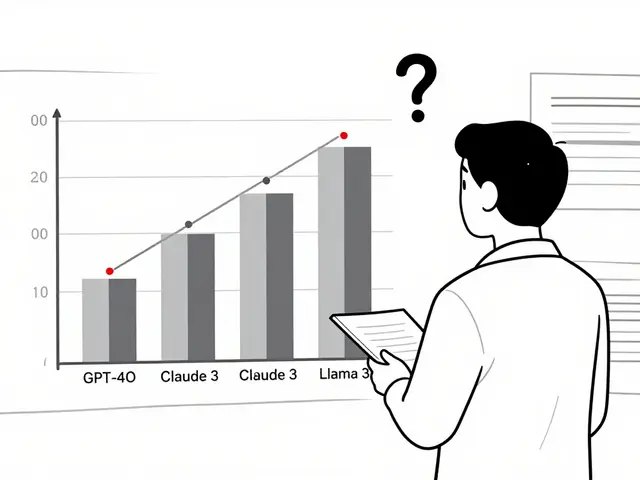

You don’t need to build from scratch.- NLP pipelines: spaCy (fast, reliable), NLTK (flexible), Stanford CoreNLP (academic-grade)

- LLMs: GPT-4-turbo (best for general use), Claude 3.5 (best for deterministic output), Llama 3 (open-source, cost-effective)

- Hybrid frameworks: Elastic’s ESRE (combines BM25 + vector search + LLM), LangChain (for chaining steps), LlamaIndex (for retrieval-augmented generation)

Final Thought

NLP pipelines aren’t outdated. LLMs aren’t magic. The future belongs to teams who treat them like tools in a toolbox-not replacements. The best language systems in 2026 won’t be the ones with the biggest models. They’ll be the ones that know when to use a scalpel-and when to use a sledgehammer.Are NLP pipelines obsolete because of LLMs?

No. NLP pipelines are more relevant than ever. They’re faster, cheaper, and more reliable for high-volume, rule-based tasks. LLMs handle complexity, but pipelines handle scale. Most enterprise systems use both.

Can I use LLMs for real-time applications like chatbots?

Only if you can tolerate 1-2 second delays. For live customer chat, LLMs alone cause 30-40% user drop-off. Use NLP pipelines for simple replies (e.g., "Your order shipped") and LLMs only for complex questions. Hybrid systems reduce latency by 70%.

How do I reduce LLM costs without losing accuracy?

Preprocess with NLP. Clean inputs, extract entities, and remove noise before sending data to the LLM. This cuts token usage by 50-70%. Also, use smaller models like Llama 3 for simple tasks. Only use GPT-4 or Claude 3.5 for complex reasoning.

Why do LLMs hallucinate, and can I stop it?

LLMs predict text based on patterns, not facts. They don’t know what’s true-they know what’s likely. You can’t fully stop hallucinations, but you can reduce them: use NLP validation, restrict output format, and apply post-processing rules. Hybrid systems cut hallucinations by 60-80%.

Is hybrid AI the future of NLP?

Yes. Gartner predicts 90% of enterprise language systems will be hybrid by 2027. NLP handles cost-sensitive, deterministic tasks. LLMs handle creative, contextual ones. Together, they’re faster, cheaper, and more accurate than either alone.

Artificial Intelligence

Artificial Intelligence

Angelina Jefary

January 21, 2026 AT 14:25Okay but have you seen how many NLP pipelines are secretly collecting user data and selling it to shadowy data brokers? I'm not saying the government's behind it... but why does every sentiment analyzer suddenly know when I'm mad at my ex? 🤔

Jennifer Kaiser

January 23, 2026 AT 03:36It’s funny how we keep framing this as a tool choice when really it’s about power. Pipelines are control. LLMs are chaos. And the hybrid? That’s just capitalism trying to have both. We’re not optimizing for accuracy-we’re optimizing for profit. Who gets to decide what’s ‘important’ enough for an LLM? Who gets left out when the cost-per-request matters more than the human behind the text?

It’s not about which tool is better. It’s about who gets to speak, and who gets filtered out before the machine even listens.

TIARA SUKMA UTAMA

January 24, 2026 AT 13:45Use spaCy. It’s free. LLMs cost money. Done.

Jasmine Oey

January 26, 2026 AT 02:42OMG I’m literally crying tears of joy because this post finally gets it!! I’ve been screaming into the void for years that LLMs are NOT magic fairy dust!! And pipelines? They’re the unsung heroes of enterprise NLP!! I mean, come on!! Have you SEEN what happens when you let a GPT loose on customer service without a safety net?? It turns ‘I need a refund’ into a 500-word poetic ode to capitalism!! 😭😭😭

And don’t even get me started on hallucinations. I once saw an LLM invent a fake law that didn’t exist and convince a whole legal team it was real. I’m not even joking. It cited ‘Section 7.3.1 of the Digital Empathy Act’ which doesn’t exist. Like… what even is our future??

Hybrid systems are the only way. Pipelines are the bouncer. LLMs are the drunk guy at the party. You need the bouncer to keep the party from burning down.

Marissa Martin

January 27, 2026 AT 05:33I don’t know why people keep defending pipelines like they’re the good guys. They’re just brittle. They don’t learn. They don’t adapt. They just… repeat. And LLMs? They’re messy, yes. But they’re alive in a way pipelines will never be. We’re scared of what we don’t understand. That’s all.

James Winter

January 28, 2026 AT 14:28USA built the internet. Canada? Still using Excel sheets for NLP. Pipelines? Fine. But if you’re not using GPT-4, you’re falling behind. Stop being lazy.

Aimee Quenneville

January 29, 2026 AT 06:47so like… pipelines are the boring dad who remembers your birthday, and llms are the wild aunt who shows up with a llama and a questionable life advice? 🤷♀️

also why does every tech post sound like a TED talk written by a startup founder on 5 energy drinks??

Cynthia Lamont

January 29, 2026 AT 09:07Let’s be real-this whole ‘hybrid’ thing is just corporate speak for ‘we can’t afford to run GPT-4 on everything, so we’re gonna duct tape a rule engine to it and call it AI innovation.’

And don’t get me started on ‘NLP-guided prompting.’ That’s just a fancy way of saying ‘we fed the LLM cleaned data so it wouldn’t hallucinate as much.’

But hey, if you want to pay $500/day instead of $12,000/day, fine. But don’t pretend you’re building the future. You’re just doing cost-cutting with buzzwords.

Also, spaCy + GPT-4-turbo? That’s the bare minimum. If you’re not fine-tuning your own model on proprietary data, you’re not even playing the game. You’re just watching.

And don’t even mention ‘deterministic modes.’ That’s GPT-4 pretending to be a pipeline. It’s slower. It’s pricier. It’s just a pipeline with a fancy coat.

Real engineers don’t ‘choose between tools.’ They build systems that break the rules. And right now? The rules are written by vendors who want you to keep buying API credits.

Stop optimizing for cost. Start optimizing for control. And stop calling this ‘engineering.’ It’s just tech theater.

Kirk Doherty

January 30, 2026 AT 16:58Works. Just works.