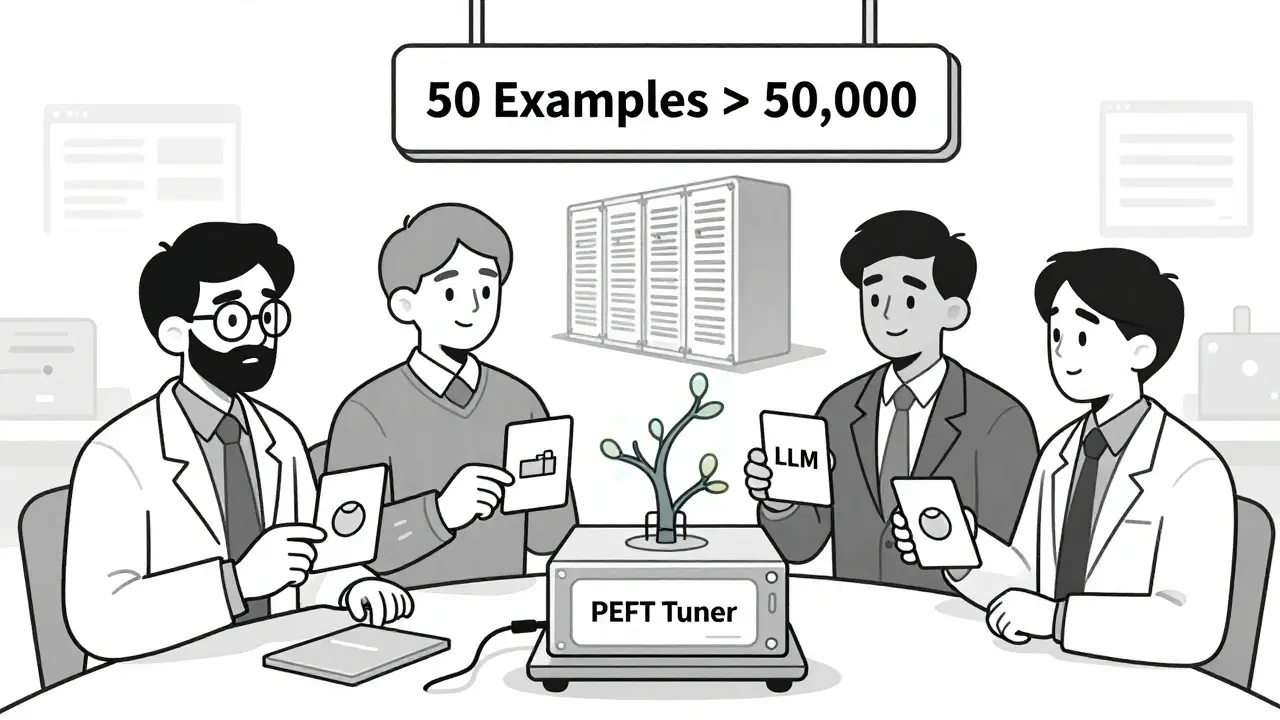

What if you could train a massive language model to do something specific-like summarize medical records or flag fraudulent transactions-with just 50 examples? Not 50,000. Not even 5,000. Just 50. That’s the promise of few-shot fine-tuning, and it’s changing how companies use AI when data is hard to come by.

Traditional fine-tuning used to mean feeding a model thousands, sometimes millions, of labeled examples. You needed a clean, well-annotated dataset. But in real life? That’s often impossible. Medical records are protected by HIPAA. Legal documents are confidential. Customer support logs are messy and scattered. In these cases, collecting enough data for full fine-tuning isn’t just hard-it’s illegal or impractical. That’s where few-shot fine-tuning steps in.

How Few-Shot Fine-Tuning Works (Without the Jargon)

Think of a large language model like a highly trained lawyer who’s read every law book ever written. Now, you want them to specialize in patent law. You don’t have time to make them read another 10,000 patent cases. Instead, you give them 50 carefully chosen examples: Here’s a patent claim, here’s how it was interpreted, here’s the outcome. You don’t retrain the whole lawyer. You just adjust a tiny part of their brain-the part that handles legal reasoning.

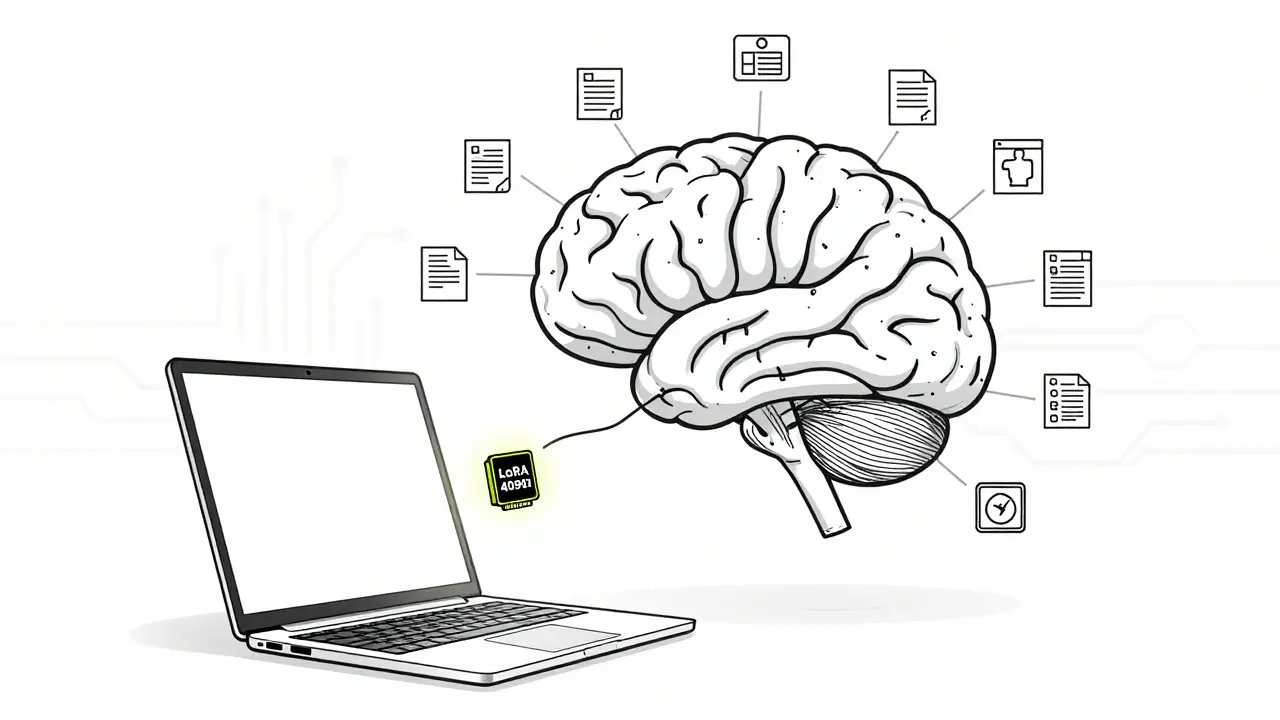

That’s what few-shot fine-tuning does. Instead of updating all the billions of parameters in models like LLaMA or GPT, it tweaks only a tiny fraction-sometimes less than 0.1%. This is done using techniques called Parameter-Efficient Fine-Tuning (PEFT). The most popular method is LoRA (Low-Rank Adaptation). It works by adding small, trainable matrices to the model’s existing layers. These matrices act like temporary plugins that learn the new task without touching the original weights.

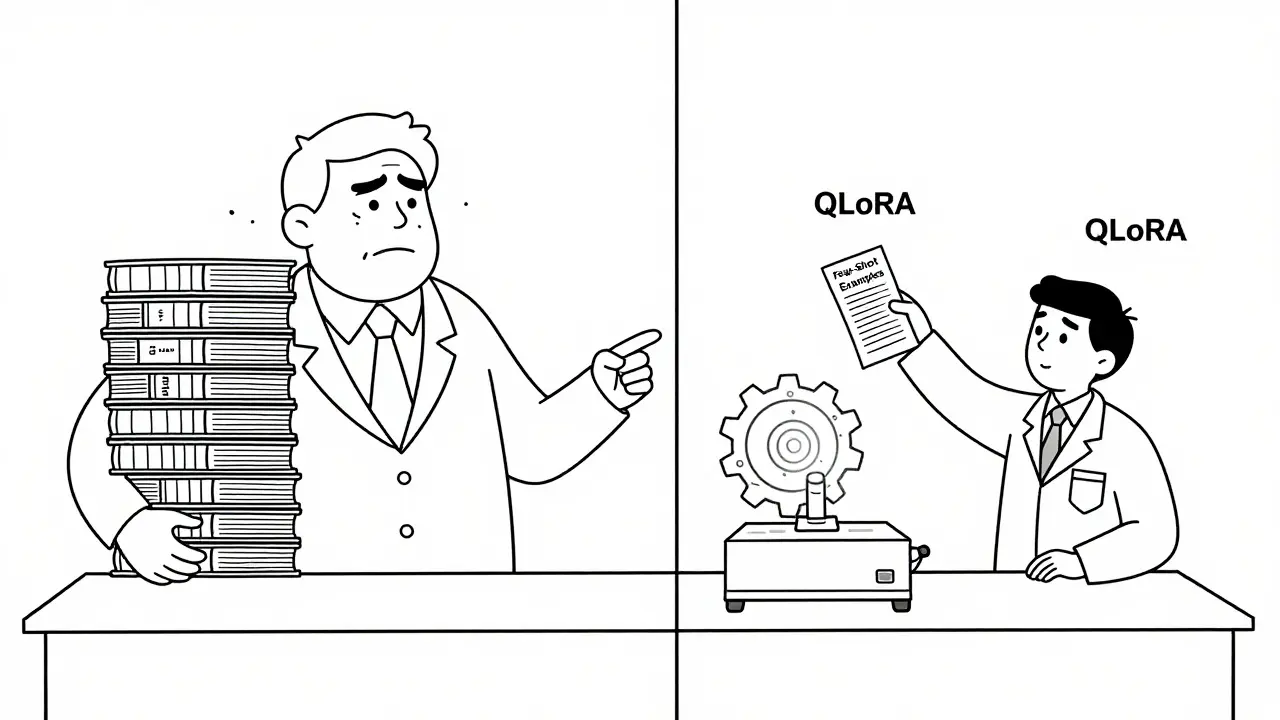

Then came QLoRA (Quantized Low-Rank Adaptation). This took LoRA and made it even more efficient. By using 4-bit quantization, QLoRA shrinks the memory needed to run these models. Where full fine-tuning a 65-billion-parameter model once required 780GB of GPU memory, QLoRA does it in just 48GB. That means you can now do this on a single consumer-grade GPU like the NVIDIA RTX 4090. No need for a cluster of expensive servers.

Performance: How Good Is It Really?

Let’s cut through the hype. Few-shot fine-tuning doesn’t beat full fine-tuning. But it gets remarkably close.

On math reasoning tasks like GSM8K, QLoRA achieves 99.4% of the accuracy of full fine-tuning. In medical note summarization, Partners HealthCare saw a 22.7% boost in accuracy using just 75 labeled examples. A fintech startup cut their adaptation costs from $18,500 to $460 per task while keeping 94.3% of the performance. That’s not just savings-that’s a game-changer for small teams.

But here’s the catch: it doesn’t work well for everything. If you’re trying to adapt a model to a completely new language, few-shot fine-tuning only hits 63.2% accuracy, while full fine-tuning gets 81.4%. It also tends to hallucinate more-18.3% more than fully fine-tuned models-unless you tune the hyperparameters carefully.

Compare that to in-context learning (just prompting the model with examples). On medical coding tasks, fine-tuned models outperform zero-shot or few-shot prompting by 12-18%. Why? Because prompting relies on the model’s existing knowledge. Fine-tuning teaches it something new.

When Does It Fail?

Not every small dataset works. And not every 10 examples will do the trick.

Google Research’s 2025 guidelines say you need at least 50 carefully chosen examples per class for classification tasks. Below that, performance becomes unstable. One data scientist on Reddit spent 37 hours just tuning the learning rate on a model trained with 75 medical examples. Why? Because with so little data, the model latches onto patterns that aren’t real.

Overfitting is the silent killer. If your 20 examples all use the same phrasing, the model won’t generalize. It’ll memorize, not learn. That’s why curation matters more than quantity. A team at Mayo Clinic found that 75 high-quality examples outperformed 200 poorly labeled ones.

Another pitfall? Learning rate. Too high, and the model forgets everything it learned. Too low, and it barely moves. Most failures happen when the learning rate is above 2e-4. Experts recommend starting between 1e-5 and 5e-4, with batch sizes of 4-16 and 3-10 epochs. Early stopping is critical.

Who’s Using This-and Why?

It’s not just research labs. Real companies are using few-shot fine-tuning right now.

- Healthcare: 68% of AI initiatives in hospitals use PEFT methods because patient data can’t be shared. One system now summarizes ER notes with 92% accuracy using under 100 examples.

- Legal Tech: Law firms use it to extract clauses from contracts. With just 40 annotated documents, models learned to spot non-compete clauses with 89% precision.

- Finance: Compliance teams use it to flag suspicious transactions. A startup reduced their false positives by 31% after fine-tuning a model on 60 labeled fraud cases.

The market is responding. The global LLM customization market hit $3.8 billion in 2025, with PEFT methods making up 54% of it. Google Cloud, Microsoft Azure, and NVIDIA (after acquiring OctoML) now bake PEFT tools into their platforms. You don’t need to build this from scratch anymore.

Getting Started: What You Actually Need

Here’s how to begin-no PhD required.

- Pick your task. What exactly do you want the model to do? Summarize? Classify? Extract? Be specific.

- Collect 50-100 high-quality examples. This is the hardest part. You need domain experts-doctors, lawyers, analysts-to label them. Expect to spend 8-40 hours per task.

- Use Hugging Face’s PEFT library. Since February 2026, Transformers v4.38 has native QLoRA support. That cuts setup time by 60%. Just load your model, enable QLoRA, and start training.

- Set hyperparameters. Start with rank=8, learning_rate=3e-5, batch_size=8, epochs=5. Monitor loss. If it spikes, lower the learning rate.

- Evaluate on unseen examples. Don’t just test on your training data. Use 10-20 held-out examples. If accuracy drops more than 10%, you’re overfitting.

Most people succeed if they focus on data quality, not quantity. One user on Hugging Face’s forum said: “I had 17 examples. It failed. I swapped 3 bad ones for 3 better ones. It worked.”

The Future: What’s Coming Next

The next leap isn’t just about using less data-it’s about finding the right data automatically.

Meta AI’s Dynamic Rank Adjustment (a new technique announced in January 2026) automatically adjusts the LoRA rank during training. It’s like the model deciding for itself how much to change. It improved performance by 4.7% across 15 tasks.

Stanford’s 2026 roadmap predicts “automated example selection systems” within 18 months. These tools will scan your unlabeled documents and pick the 10 most informative ones to label. Imagine uploading 10,000 legal contracts and getting back a list of the 10 that will teach your model the most.

But there’s a warning: as cloud providers integrate these tools into their platforms, standalone PEFT tools may shrink. Forrester predicts a 35% drop in the market for specialized tuning tools by 2027. The real value is shifting from software to expertise-knowing how to curate data, tune models, and validate results.

Final Take: Is It Worth It?

If you’re working in a field with tight data rules-healthcare, law, finance, government-then yes. Few-shot fine-tuning isn’t magic. It won’t turn a 10-example dataset into a perfect model. But if you have even 50 clean examples, it gives you superpowers. You can adapt massive models to your exact needs without breaking budgets or violating privacy laws.

The barrier to entry has never been lower. You can do this on a laptop. You don’t need a team of engineers. You just need a few good examples and the patience to tune them right.

And that’s the real breakthrough: in a world drowning in data, the rarest resource isn’t compute-it’s quality examples. Few-shot fine-tuning teaches us to value them.

Can few-shot fine-tuning work with fewer than 10 examples?

Technically, yes-but results are unreliable. Research from Google and Stanford shows performance drops sharply below 20 examples. Models start memorizing noise instead of learning patterns. Experts recommend at least 50 examples per class for classification tasks. If you have fewer than 10, consider in-context learning (prompting) instead.

Do I need a powerful GPU to run QLoRA?

No. QLoRA was designed to run on consumer hardware. You can fine-tune a 65B-parameter model like LLaMA-3 on a single NVIDIA RTX 4090 with 24GB VRAM. This is a major shift from earlier methods that required multiple 80GB A100s. Even a 7B model can be tuned on a 16GB GPU like the RTX 3060.

Is QLoRA better than LoRA?

QLoRA is an upgrade to LoRA. It uses 4-bit quantization to reduce memory usage by 70% while preserving 99% of performance. If you have limited GPU memory, QLoRA is the clear choice. If you’re working with smaller models (7B-13B) and have plenty of memory, LoRA is simpler and just as effective.

Can I use few-shot fine-tuning for languages I’ve never trained on?

Not well. Few-shot fine-tuning works best when the model already has some knowledge of the language. For example, fine-tuning a model on Swedish medical terms works because the model already understands Swedish. But if you try to adapt a model trained on English to a completely new language like Basque with only 50 examples, accuracy drops to 63%. Full fine-tuning or multilingual pretraining is better for new languages.

What’s the biggest mistake people make when trying few-shot fine-tuning?

They focus on quantity over quality. Many teams collect 200 examples, but 70% are duplicates, poorly labeled, or off-topic. The real win comes from 50 expert-curated examples. Also, most skip hyperparameter tuning. A learning rate of 5e-4 instead of 3e-5 can cause training to collapse. Always start low and monitor loss carefully.

Is few-shot fine-tuning the same as prompt engineering?

No. Prompt engineering uses examples within the input to guide the model’s response without changing the model itself. Few-shot fine-tuning actually updates the model’s weights using those examples. The result? The model becomes better at the task permanently. Prompting is fast but inconsistent. Fine-tuning is slower but reliable.

Are there open-source tools I can use today?

Yes. Hugging Face’s PEFT library (with QLoRA support since v4.38, released Feb 2026) is the easiest way to start. Also available are LoRA implementations in Transformers and libraries like Unsloth and Axolotl. All are free, open-source, and work with models from Hugging Face Hub.

How long does it take to fine-tune a model with few-shot data?

On a single RTX 4090, fine-tuning a 7B model with 100 examples takes about 20-40 minutes. For a 13B model, it’s 1-2 hours. Training time scales with model size, not dataset size. Since you’re only updating a tiny fraction of parameters, even large models train quickly.

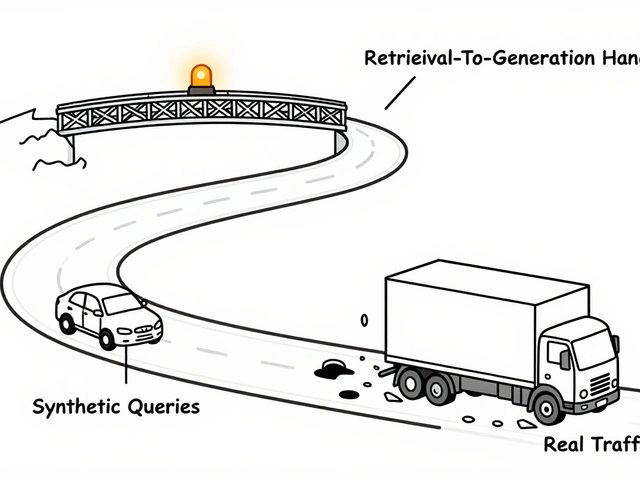

Can I combine few-shot fine-tuning with RAG (Retrieval-Augmented Generation)?

Absolutely. Many teams use fine-tuning to make the model better at understanding and generating responses, then use RAG to pull in up-to-date documents. For example, a legal firm might fine-tune a model on past case summaries, then use RAG to fetch recent rulings during inference. This combo is becoming standard in enterprise AI.

Will few-shot fine-tuning replace full fine-tuning?

No-but it will replace it in most practical cases. Full fine-tuning still wins on raw accuracy, especially with large datasets. But when data is scarce, expensive, or restricted, few-shot methods are the only viable option. Gartner predicts 78% of enterprise LLM deployments will use PEFT by 2026. That’s not replacement-it’s adaptation to reality.

Artificial Intelligence

Artificial Intelligence