When you shrink a large language model from 16-bit to 4-bit precision, you’re not just making it smaller-you’re throwing away precision. And if you don’t handle it right, the model starts giving weird, unreliable answers. This isn’t theoretical. A 4-bit quantized LLM without proper calibration can lose 50% of its accuracy on basic language tasks. That’s the difference between a model that understands context and one that hallucinates facts confidently.

Why Quantization Breaks Models

Large language models like Llama-3 or Mistral use 16-bit or 32-bit floating-point numbers to represent weights and activations. These numbers are precise but memory-heavy. A 7B-parameter model in FP16 takes up about 14GB. In 4-bit, it drops to under 4GB. That’s the dream: run big models on consumer GPUs, phones, or edge devices. But here’s the catch: LLMs don’t distribute their values evenly. Most weights cluster around zero, but 1-3% are extreme outliers-values that are 5x, 10x, even 20x larger than the rest. These outliers dominate the model’s behavior. When you force all values into a tight 4-bit range, those outliers either get crushed into near-zero or they blow out the entire quantization range. Either way, accuracy plummets.Calibration: Finding the Right Scale

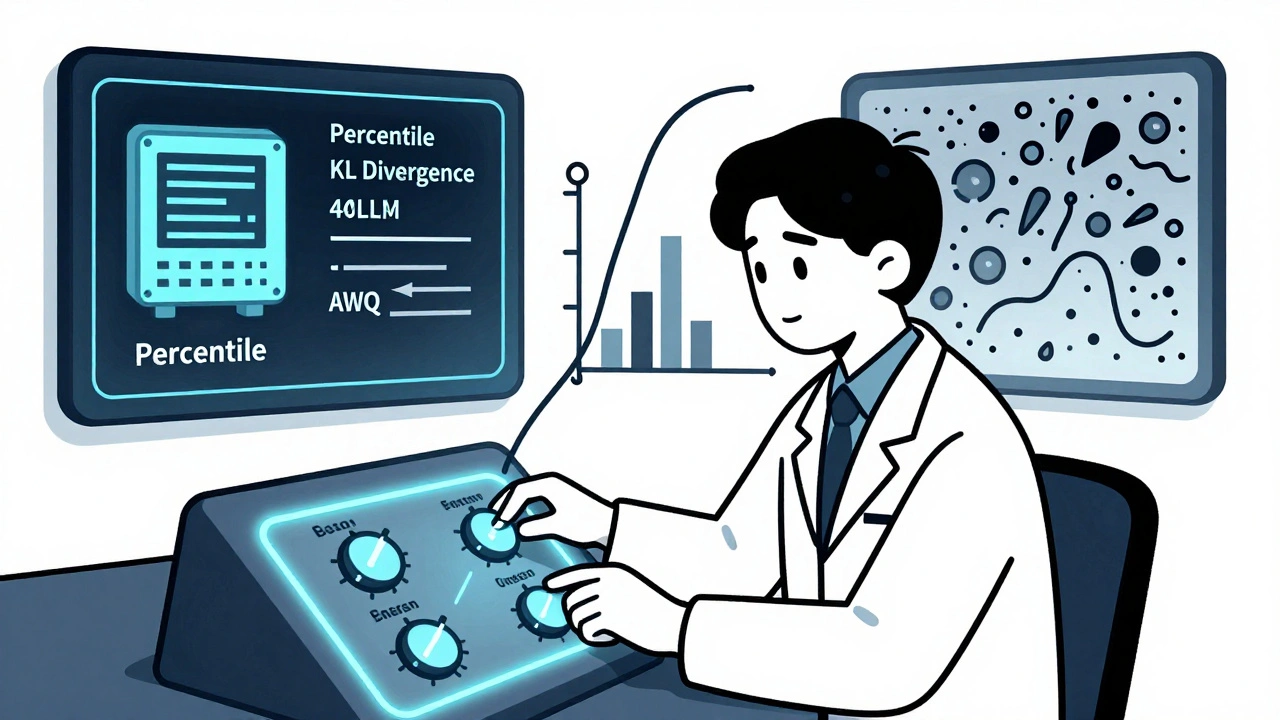

Calibration is the process of figuring out how to map the full range of real numbers into a tiny quantized space without losing too much meaning. Think of it like resizing a photo: if you just shrink it uniformly, the details get lost. Calibration finds the best way to crop or stretch the image so the important parts stay sharp. The simplest method is min-max calibration. You run a small set of sample inputs (usually 128-512) through the model and record the highest and lowest activation values. Then you scale everything to fit between the min and max of your 4-bit range. Easy. But it’s also dangerous. If one outlier activation hits 1000 while everything else is under 10, the entire range gets stretched to fit that one value. The result? 99% of your data gets squeezed into the bottom 5% of the quantized range. Accuracy drops fast. Better approaches exist:- Percentile calibration: Ignore the top 0.1%-1% of values. This cuts outlier influence without throwing out too much data. NVIDIA found this reduces calibration error by 15-25% over min-max for 8-bit quantization.

- KL divergence calibration: This method compares the original activation distribution to the quantized one and adjusts scaling to minimize the difference. It’s more accurate-5-10% better than min-max-but takes 2-3x longer and needs more samples (512-1024).

- MSE calibration: Minimizes the mean squared error between original and quantized outputs. Gives you a 3-7% accuracy bump over min-max with only 1.5-2x more time. A solid middle ground.

- Per-channel calibration: Instead of using one scale for all weights in a layer, use a different scale for each output channel. This gives finer control. It’s consistently 8-12% more accurate than per-tensor scaling, but adds 5-10% to model size because you have to store extra scaling factors.

Outlier Handling: The Secret Weapon

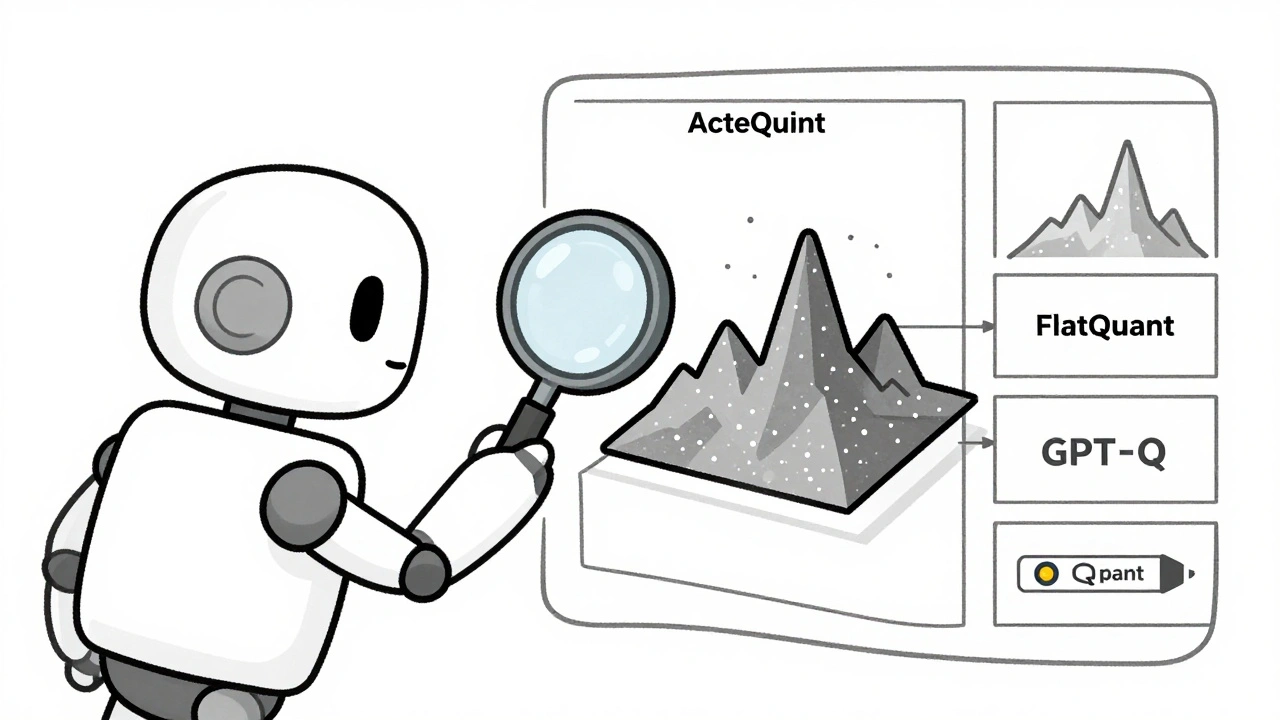

Calibration helps, but it’s not enough. Outliers are the real enemy. That’s where outlier handling techniques come in. SmoothQuant, developed by MIT in 2022, shifts the problem from activations to weights. It applies a smoothing factor (usually α=0.5) to reduce the extreme values in activations and compensates by scaling the weights. This reduces outlier impact by 35-45%. It’s simple to implement and works well with existing quantization pipelines. AWQ (Activation-aware Weight Quantization), from Tsinghua University, goes further. Instead of just clipping outliers, it looks at how activations behave during inference and adjusts weight scales per channel to minimize worst-case errors. On the MMLU benchmark, AWQ at 4-bit hits 58.7% accuracy. Standard post-training quantization? Only 52.1%. That’s a 6.6-point jump-huge in LLM terms. GPTQ (2022) takes a different route. It identifies outlier channels-weight groups that contain extreme values-and quantizes them separately with higher precision. For the OPT-175B model, this cut perplexity degradation from 45% down to 15-20% at 4-bit. GPTQ is now the default in tools like AutoGPTQ and is widely used in community models. FlatQuant (2023) flips the script. Instead of fighting outliers, it flattens the activation distribution by learning optimal clipping thresholds. The result? It shrinks the accuracy gap between full-precision and 4-bit models from 15-20% down to just 5-8% on GLUE tasks. ZeroQAT (2024) is a game-changer for people who can’t afford training. Quantization-Aware Training (QAT) usually requires retraining the model with simulated quantization, which takes days and needs the full dataset. ZeroQAT skips backpropagation entirely. It uses zeroth-order optimization to tweak parameters directly. It keeps 95-98% of QAT’s accuracy while cutting memory use by 60%.

What Works Best? A Quick Comparison

| Technique | Accuracy Gain vs Baseline | Calibration Time | Memory Overhead | Best For |

|---|---|---|---|---|

| Min-Max Calibration | 0% | Low | None | Quick prototyping |

| Percentile Calibration | +15-25% | Low | None | General use |

| KL Divergence | +5-10% | High (2-3x) | None | High accuracy needed |

| MSE Calibration | +3-7% | Medium (1.5-2x) | None | Balanced speed/accuracy |

| Per-Channel Calibration | +8-12% | Medium | +5-10% | Server deployments |

| SmoothQuant | +10-15% | Low | None | Easy integration |

| AWQ | +6-12% | Medium | None | MMLU, reasoning tasks |

| GPTQ | +25-30% | High | None | Large models (70B+) |

| FlatQuant | +10-15% | Medium | None | General tasks, GLUE |

| ZeroQAT | +95-98% of QAT | Medium | -60% | No training data available |

Real-World Trade-Offs

You can’t just pick the “best” technique. It depends on your constraints. If you’re an individual developer trying to run Llama-3-8B on an RTX 3090, you care about speed and memory. GPTQ or AWQ with percentile calibration gives you 4GB models with 90%+ of full-precision accuracy. You’ll wait 8-10 hours on an A100 to calibrate, but once done, inference is fast. If you’re deploying in production and need reliability, per-channel AWQ with KL divergence calibration is the gold standard. But you’ll pay in memory and time. The model is bigger. Calibration takes longer. You need more GPU RAM. QAT gives the best accuracy-3-5% better than PTQ-but it needs the original training data and days of compute. For a 70B model, that’s over $1 million in cloud costs. ZeroQAT changes that. It gets you 97% of QAT’s accuracy without retraining. That’s huge for startups and researchers without deep pockets.

What Experts Say

Maarten Grootendorst calls calibration “the most critical step in PTQ,” saying it determines 70-80% of final accuracy. NVIDIA says AWQ closes the gap between quantized and full-precision models by 6-8 points. But the reality is messier. A 2025 ACL paper from Stanford and MIT found that even the best quantized models have 15-25% higher calibration error than full-precision ones. That means they’re less confident when they’re right-and more confident when they’re wrong. That’s dangerous in medical, legal, or financial apps. Dr. Younes Belkada, creator of bitsandbytes, says outlier handling contributes 40-50% of accuracy preservation in 4-bit models. That’s not a side note-it’s the core. Without it, quantization fails. And users aren’t lying. On Reddit and Hugging Face, people report that changing a single calibration sample can drop accuracy by 20 points. Calibration feels like black magic because the math is opaque, and the results are unstable.How to Get Started

Here’s a practical path:- Start with a 7B-13B model like Llama-2-7B or Mistral-7B. Larger models are harder to debug.

- Use Hugging Face’s

bitsandbyteslibrary for 4-bit quantization. It’s simple and well-documented. - Run calibration with 256-512 samples from your target domain. Don’t use random data.

- Try percentile calibration first (ignore top 0.5%). If accuracy is still low, switch to AWQ.

- Test on a few real prompts. Don’t just rely on benchmarks.

- If you have time and GPU memory, try GPTQ for maximum accuracy. If you don’t have training data, try ZeroQAT.

Artificial Intelligence

Artificial Intelligence