Government agencies are no longer asking if they should use AI to write code-they’re figuring out how to buy it right. In 2025, AI Coding as a Service (AI CaaS) isn’t a buzzword anymore. It’s a core part of federal software development. But buying AI that writes code is nothing like buying software licenses. You’re not just paying for a tool. You’re paying for reliability, security, and accountability in code that could run critical systems-from tax processing to defense logistics. And that changes everything about how contracts are written, how performance is measured, and who’s liable when things go wrong.

What Exactly Is AI Coding as a Service?

AI CaaS delivers automated code generation, debugging, and documentation through cloud-based APIs. Think of it like having a senior developer sitting at your desk, typing out functions in Python, Java, or C# based on your prompts. But this developer doesn’t sleep, doesn’t get tired, and doesn’t ask questions. It just generates code. The difference between commercial tools like GitHub Copilot and what government agencies now require? It’s not just about speed or cost. It’s about control.

Commercial AI coding tools work great for startups and private companies. They’re cheap, fast to deploy, and update every few weeks. But in government, you can’t just plug in a tool and hope it doesn’t generate a security flaw in a payroll system. That’s why agencies now demand AI CaaS solutions that meet strict technical and compliance standards-standards that don’t exist in the commercial market.

Why Government Contracts Are Different

Most commercial AI coding services charge per user or per token. GitHub Copilot costs $10 per user per month. Amazon CodeWhisperer is $8.40. Simple. Predictable. But federal contracts? They don’t work that way. The GSA added AI CaaS to its Multiple Award Schedule in August 2025 under SIN 54151-9, meaning agencies now buy these services through fixed-price or time-and-materials contracts-not subscriptions.

Why? Because you can’t have a vendor walk away because the code generated a bug in a VA benefits system. You need accountability. You need SLAs that back up performance with real consequences. And you need to know exactly who owns the code the AI writes.

Commercial tools train on public code. Government systems use proprietary standards, legacy codebases, and classified workflows. That’s why agencies now require vendors to prove their AI models can generate code that follows Federal Source Code Policy, FISMA, and even NASA-STD-8739.8. If the AI doesn’t know how to write code that complies with your agency’s rules, it’s useless-even if it’s 95% accurate on GitHub repos.

Key SLA Requirements for AI CaaS Contracts

Government SLAs for AI CaaS aren’t vague promises. They’re measurable, enforceable, and backed by penalties. Here’s what’s required as of December 2025:

- Code Accuracy: Minimum 92% accuracy rate, verified by third-party testing across 10 government-relevant languages (Python, Java, C#, SQL, etc.). This isn’t a suggestion-it’s a contract term.

- Latency: 95% of code generation requests must respond in under 2.5 seconds. Slower than that, and you’re paying less.

- Uptime: 99.85% availability. Miss that by 0.1%, and the vendor pays 0.5% of your monthly contract value as a penalty.

- Security: End-to-end encryption of all code snippets, air-gapped environments for sensitive projects, and quarterly penetration testing by FedRAMP-accredited firms.

- Scalability: Must support up to 50,000 concurrent users across agencies with no more than 15% performance drop at peak load.

- Integration: Must work with GitHub Enterprise, GitLab, and Code.gov. No exceptions.

These aren’t nice-to-haves. They’re non-negotiable. The Department of Defense’s CDAO reported that agencies using AI CaaS with these SLAs saw a 67% reduction in contract drafting time. But those without them? They ended up spending more time reviewing bad code than they saved.

Compliance Is the Real Differentiator

Here’s the truth: commercial AI coding tools are faster and cheaper. But they’re also risky in government settings. Only 63% of commercial AI tools are FedRAMP Moderate compliant. Government contracts? 100% are. That’s not a coincidence. It’s a requirement.

Agencies can’t afford to let an AI generate code that violates FAR 52.227-14 (Rights in Data) or 52.227-17 (Patents-Data Rights). If the AI writes a function that accidentally copies proprietary code from a vendor’s system, who’s liable? The agency? The vendor? The AI? The contract must answer that.

That’s why the OMB’s M-25-22 guidance-issued October 1, 2025-has become the de facto standard for 87% of federal AI contracts. It forces vendors to document not just how their AI works, but how it’s governed. They need to prove they can detect bias in code generation, track data lineage, and prevent training on government code without written consent.

One agency, the IRS, found that commercial AI tools only caught 65% of missing contract clauses. Their AI CaaS solution, fine-tuned to federal standards, caught 90%. That’s the gap. Not in speed. Not in price. In precision.

What Vendors Get Right-and What They Keep Getting Wrong

Successful AI CaaS vendors in government don’t just sell technology. They sell outcomes. The top performers-like Anthropic and Trenchant Analytics-don’t lead with their model size. They lead with use cases.

They show you how their AI automates FISMA documentation. How it generates secure API endpoints that comply with NIST standards. How it flags outdated FAR clauses in draft contracts. That’s what wins bids.

But many vendors still fail. The Government Accountability Office found that 43% of initial AI CaaS deployments hit integration roadblocks because the AI didn’t understand legacy government systems. One NASA contracting officer reported that 38% of initial AI-generated code failed to meet NASA-STD-8739.8 software assurance rules. That’s not a bug. That’s a procurement failure.

Another common mistake? Assuming AI can learn from internal code without consent. The GSA explicitly prohibits training models on government code unless the agency gives written permission. Some vendors tried to sneak in “learning” clauses. Those contracts got rejected.

Implementation Challenges Agencies Face

Getting AI CaaS working isn’t just about signing a contract. It’s about changing how people work.

Contracting officers need to learn new skills. The GSA’s training program shows it takes an average of 8.2 weeks for a procurement specialist to understand how to evaluate AI code accuracy, interpret bias reports, and assess intellectual property risks. That’s not a week-long webinar. That’s hands-on training with real code samples.

Integration is another hurdle. The IRS solved this by building their own Contract Clause Review Tool that cuts review time from six hours to six minutes. But not every agency has that kind of budget. Smaller agencies struggle to connect AI CaaS to old contract management systems. The result? AI generates perfect code. But no one can find it in their system.

Then there’s the human factor. A VA contracting officer reported that AI helped reduce proposal drafting from 40 hours to 6-but 15% of the outputs misapplied FAR clauses. That’s not a failure of the AI. It’s a failure of oversight. The code needs a human reviewer who understands federal procurement rules.

Who’s Winning in AI CaaS Procurement?

The market is split. The Department of Defense leads with 68% of its software contracts using AI CaaS. HHS and IRS follow at 52% and 47%. But the real story is in the vendors.

Booz Allen Hamilton holds 22% of the market-not because they built the best AI, but because they’ve spent decades winning federal IT contracts. They bundle AI CaaS into larger service packages. Anthropic, with 15% share, wins by focusing on safety and transparency. Small businesses hold 31% of contracts through teaming arrangements, proving that innovation doesn’t always come from the giants.

Open-source tools like CodeLlama are gaining traction, but they’re not yet viable for mission-critical systems. Why? No SLAs. No support. No compliance guarantees. In government, that’s a dealbreaker.

What’s Next? The Roadmap Through 2026

The GSA is already planning ahead. By Q2 2026, they’ll release standardized SLA templates for AI CaaS. That means agencies won’t have to reinvent the wheel every time they buy. By Q4 2026, bias testing for code generation will be mandatory. Vendors will have to prove their AI doesn’t favor certain coding styles, languages, or developers based on race, gender, or background.

The bigger shift? Centralization. The GSA’s “OneGov” strategy aims to have 78% of federal agencies buy AI CaaS through GSA channels by 2027. That’s a massive consolidation. It means better pricing, better compliance, and fewer fragmented contracts.

And the numbers? The market is growing fast. $3.2 billion in FY2025. Projected to hit $5.8 billion by FY2027. But the real value isn’t in the dollar amount. It’s in the time saved, the errors prevented, and the contracts delivered faster.

Final Thoughts: It’s Not About the AI. It’s About the Contract.

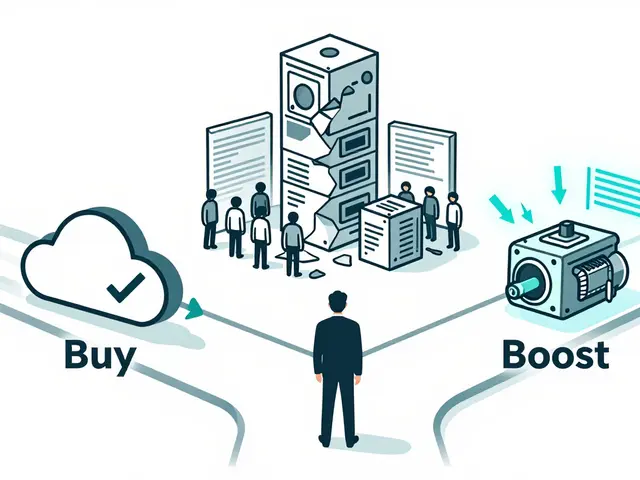

AI CaaS isn’t magic. It’s a tool. And like any tool, its value depends on how you use it. In government, the tool doesn’t matter if the contract doesn’t protect you.

Don’t buy AI because it’s trendy. Buy it because your SLAs force accuracy, security, and accountability. Don’t choose a vendor because they’re cheap. Choose one who can prove they’ve done this before-with agencies like yours.

The future of government software isn’t just automated code. It’s automated compliant code. And that’s a contract you can’t afford to get wrong.

Artificial Intelligence

Artificial Intelligence

Addison Smart

December 13, 2025 AT 06:42Let me tell you something - this isn’t just about contracts anymore, it’s about redefining how government even thinks about technology. We’ve been stuck in this mindset that software is something you buy off a shelf, like copier paper or office chairs. But AI CaaS? It’s alive. It learns. It adapts. And if you don’t lock down the SLAs with surgical precision, you’re not just risking a bug - you’re risking a system failure that could delay benefits for veterans, miscalculate taxes for millions, or worse. The fact that agencies are finally demanding FedRAMP compliance, air-gapped environments, and third-party verification? That’s the bare minimum. What’s missing is a cultural shift - procurement officers need to become code-literate, not just contract-literate. We can’t outsource accountability to an algorithm and then act shocked when it generates a function that violates FAR 52.227-14. The real win here isn’t the 67% reduction in drafting time - it’s that we’re finally treating software as infrastructure, not a vendor’s feature list.

David Smith

December 15, 2025 AT 01:59Ugh. Another ‘govtech guru’ article pretending AI is some revolutionary savior. Newsflash - it’s just a fancy autocomplete with a PR team. I’ve seen AI-generated code in my agency that wrote a loop that never ended. Took three days to catch it. And now we’re paying $500k/year for this? The real story is how many contractors are cashing in on fear and buzzwords. SLAs? Please. The only thing these contracts guarantee is more paperwork and slower decisions. If you want fast, reliable code, hire a real dev. Not a bot that thinks ‘if x == null’ is a security risk because it saw it once on Stack Overflow.

Lissa Veldhuis

December 15, 2025 AT 12:26David you absolute dumpster fire of a human. You think hiring a real dev solves everything? LOL. You’re the same guy who thought Excel macros were ‘AI’ back in 2018. The AI didn’t crash because it was dumb - it crashed because YOUR team didn’t train it on your legacy COBOL mess. And now you’re mad because the system actually WORKED 92% of the time? The IRS caught 90% of missing clauses - you’d be out of a job if they didn’t. Stop being a Luddite with a keyboard and learn how to use the damn tool. Or better yet - go back to filing paper forms. Maybe then you’ll stop complaining.

Michael Jones

December 15, 2025 AT 14:54There’s something beautiful about this whole shift - we’re finally moving from ownership to stewardship. For decades we treated software like property - buy it, lock it up, ignore updates, hope it doesn’t break. But AI CaaS? It’s a living process. It demands collaboration. It forces us to ask - who owns the output? Who’s responsible for the bias? Who’s accountable when the machine writes something that breaks a law? These aren’t legal questions anymore - they’re philosophical ones. And honestly? That’s good. We’ve been avoiding the hard questions about technology and power for too long. The SLAs aren’t just clauses - they’re ethics made concrete. If we can get this right, we’re not just fixing procurement - we’re redefining the relationship between humans and machines in public service

allison berroteran

December 17, 2025 AT 09:09I’ve been working in federal IT for over 15 years and I’ve seen every ‘revolution’ come and go - cloud, blockchain, blockchain-in-the-cloud, you name it. But this one? This feels different. Not because the tech is magic - it’s not - but because the people who wrote these SLAs actually listened to the folks on the ground. The requirement for air-gapped environments? That came from cybersecurity analysts who’ve seen what happens when AI models get trained on leaked internal docs. The 92% accuracy standard? That was pushed by testers who spent months manually reviewing AI output. And the integration with Code.gov? That’s the quiet win - it means we’re finally building something that connects, instead of siloing. I’m not saying it’s perfect - the training time for contracting officers is still brutal - but for the first time, I feel like we’re building something that won’t collapse under its own weight in five years.

Gabby Love

December 18, 2025 AT 02:41Small correction: the GSA’s SIN 54151-9 was added in August 2024, not 2025. The article says 2025, but the actual FAR update was published in August 2024. Also, NASA-STD-8739.8 was updated in March 2025 - the version referenced here is correct, but the timeline in the article is slightly off. Other than that, this is one of the clearest overviews I’ve seen on AI CaaS procurement. Good job.

Jen Kay

December 19, 2025 AT 07:06Wow. So let me get this straight - we’re spending billions to automate code generation, but we still need humans to review every line because the AI can’t understand that ‘FAR 52.227-14’ isn’t just a string in a document, it’s a legal obligation with teeth? That’s not innovation. That’s just outsourcing the grunt work to a robot that still needs a babysitter. And yet somehow this is the future? The real tragedy isn’t the cost - it’s that we’re so desperate to look ‘modern’ that we’re pretending a glorified autocomplete is a solution. If the AI can’t even parse a contract clause without a 15% error rate, maybe we should’ve just hired more lawyers. Or better yet - stopped pretending tech can fix bureaucratic dysfunction.