Enterprises aren’t just experimenting with AI-generated code anymore-they’re deploying it. But here’s the catch: vibe coding doesn’t work in production unless you lock it down. It’s not about writing faster. It’s about writing safely, legally, and reliably when the stakes are high. Companies like ServiceNow, Superblocks, and Betty Blocks are pushing tools that turn natural language into full applications. But without governance, those same tools can become liability factories.

What Vibe Coding Really Means in the Enterprise

Vibe coding isn’t GitHub Copilot on steroids. It’s not just autocomplete for developers. It’s an AI system that builds entire workflows, APIs, and UIs from plain English prompts-while staying inside your company’s security, compliance, and architecture rules. Think of it as having a junior developer who knows every policy, every data schema, every legacy system you’ve got-but only if you train it right.

Platforms like ServiceNow’s Build Agent use Retrieval Augmented Generation (RAG) to pull from internal documentation, codebases, and API specs before generating anything. That means the AI doesn’t guess-it references. It knows your CRM is Salesforce, your database is PostgreSQL, and your audit logs must be retained for seven years. That context is everything.

Companies using vibe coding report 30-50% faster development cycles for internal tools. One retail chain modernized its inventory system in six weeks instead of six months. But those wins only happen when governance is baked in from day one-not bolted on after a breach.

Why Governance Is the Make-or-Break Factor

Forrester found that enterprises with strict governance frameworks saw 3.5x higher success rates with vibe coding than those that didn’t. Why? Because AI doesn’t care about compliance. It doesn’t know HIPAA, GDPR, or SOX. It just generates code.

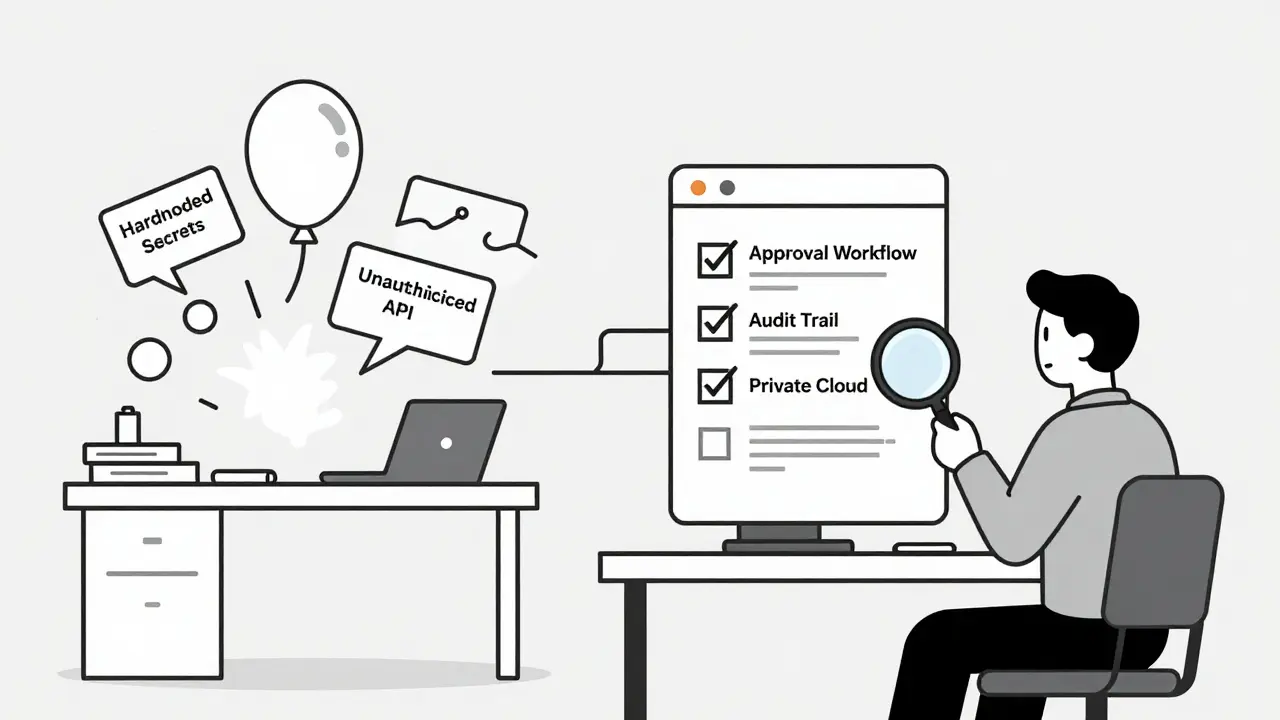

Without rules, you get:

- AI-generated SQL queries that expose customer data

- API endpoints without authentication

- Hardcoded secrets in config files

- Code that passes unit tests but violates internal audit policies

Successful organizations treat vibe coding like a new programming language-and they enforce standards like they would for Java or Python. That means:

- Role-based access: Only approved devs can trigger production builds

- Approval workflows: Every AI-generated module needs a human sign-off

- Audit trails: Every prompt, every change, every deployment is logged

- Code lineage: You can trace any line of AI-generated code back to its source prompt and reviewer

ServiceNow’s platform includes built-in governance modules that auto-flag code violating data handling rules. Superblocks lets teams define guardrails like “no direct access to PII databases” or “all endpoints must use OAuth 2.0.” These aren’t optional-they’re mandatory.

Security Risks You Can’t Ignore

AI-generated code is stealthy. It doesn’t look malicious. It looks like normal code. But that’s the danger.

Security experts at SANS Institute found that 42% of organizations using AI coding tools experienced incidents tied to subtle logic flaws-vulnerabilities that traditional scanners missed because the code was syntactically correct but functionally dangerous. One example: an AI generated a “user login” function that accidentally allowed any password if the username contained a space. It passed all tests. No one caught it until a penetration test three months later.

Here’s how top enterprises mitigate this:

- Automated scanning: Semgrep, CodeQL, and SonarQube run on every AI-generated commit before merge

- Secrets detection: Tools like TruffleHog scan for hardcoded keys, tokens, and passwords

- Private cloud deployment: Running the LLM on-premises or in a private VPC (not public cloud) prevents data leakage

- Code reviews with security focus: Not just “does it work?” but “is it safe?”

Instinctools’ case studies show companies that integrated automated security checks into their CI/CD pipeline reduced vulnerabilities in AI-generated code by 68%. That’s not a bonus-it’s table stakes.

Compliance Isn’t Optional-It’s Built In

Regulators aren’t waiting. The EU’s AI Act, effective February 2025, requires full documentation of AI-generated code components and mandatory human oversight for high-risk applications. The SEC is pushing public companies to disclose AI use in software development. HIPAA and PCI-DSS haven’t changed-but they apply just as strictly to AI code as to human-written code.

Healthcare providers who skipped validation ended up paying $2.1 million in fines after AI-generated code exposed patient records. Financial firms lost audits because they couldn’t prove who approved the AI’s changes.

Leading platforms are adapting:

- ServiceNow now auto-renews API keys every 30, 60, or 90 days

- Superblocks is integrating HashiCorp Vault for dynamic secrets management by Q2 2025

- Betty Blocks is building “code pedigree tracking” to satisfy SEC disclosure rules

If your vibe coding tool can’t generate audit logs, trace changes, or prove human oversight, it’s not enterprise-ready. Period.

Adoption Isn’t About Tools-It’s About Process

Many companies fail because they think vibe coding is a plug-and-play upgrade. It’s not. It’s a cultural shift.

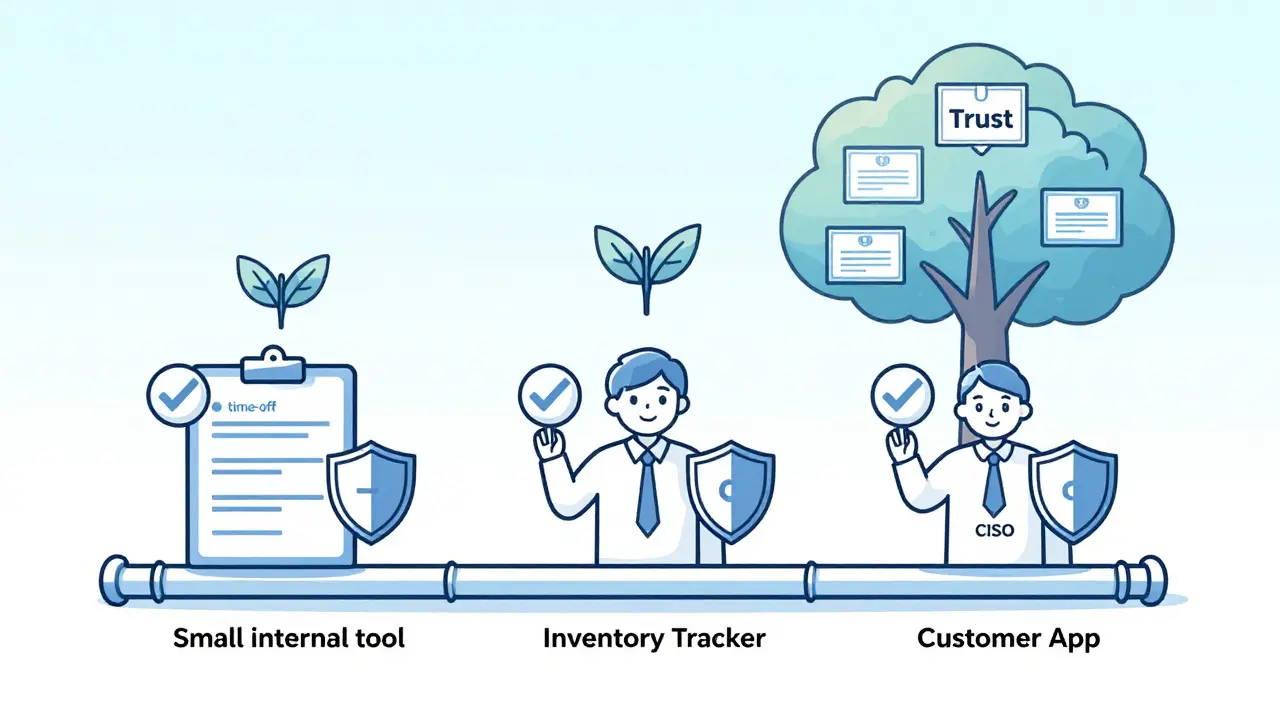

Successful implementations follow a clear path:

- Start small: Build internal tools first-expense reports, inventory trackers, time-off requests

- Lock down governance: Define who can prompt, who can approve, and what’s off-limits

- Train the team: Developers need 10-15 hours to learn prompt engineering. Business analysts need 25-40 hours

- Scale gradually: Move to customer-facing apps only after 3-4 successful internal projects

- Measure outcomes: Track time saved, bugs reduced, compliance violations prevented

One Fortune 500 company spent three weeks just setting up approval workflows. They thought it was too long. But after deployment, they had zero compliance incidents in their first year. That’s the ROI.

What Happens When You Skip Governance

There are horror stories.

A healthcare provider used a public AI tool to generate a patient portal. The AI created a search function that returned full medical records for any query. No authentication. No filters. It went live for two weeks before anyone noticed. The fine: $2.1 million.

A bank deployed an AI-generated loan approval system without validation. The AI started approving loans based on zip codes, not credit scores. It approved 12% of applicants who were flagged for fraud. Losses: $8.7 million.

These aren’t edge cases. They’re predictable outcomes of skipping structure.

Who’s Winning-and Who’s Falling Behind

Adoption is growing fast. 22% of Fortune 500 companies now use vibe coding-up from 5% in early 2024. Financial services lead at 32%, tech at 28%. Healthcare and government lag at 15% and 9%-not because they’re slow, but because they’re cautious.

The market is split between two types of players:

- Low-code vendors expanding into AI: ServiceNow, Betty Blocks, OutSystems-these platforms already had governance, compliance, and enterprise integrations. They added AI.

- AI-native platforms: Superblocks, Clark, Windsurf-built for speed, but many lack built-in governance. Enterprises adopt them with heavy customization.

Pricing reflects the difference. GitHub Copilot costs $10/user/month. Enterprise vibe coding starts at $50/user/month (ServiceNow) and goes up to $75/user/month (Betty Blocks) with minimum 50-user licenses. You’re not paying for code generation. You’re paying for control.

The Future: Standardization Is Coming

The Enterprise Vibe Coding Consortium, launched in November 2024 with 47 founding members including Microsoft, Google Cloud, and Salesforce, is working on industry-wide governance standards. Think of it like PCI-DSS for AI-generated code.

By 2027, Gartner predicts 70% of enterprises will use vibe coding for at least half their internal apps. But only those with strong governance will survive. Platforms without audit trails, access controls, and compliance hooks will vanish.

The real win isn’t speed. It’s trust. When your CISO, your auditor, and your legal team all say, “We can sign off on this,” that’s when vibe coding becomes transformative-not risky.

Is vibe coding the same as low-code or no-code?

No. Low-code and no-code platforms rely on drag-and-drop interfaces with limited customization. Vibe coding generates actual code-JavaScript, Python, SQL-that can be edited, extended, and integrated with existing systems. It offers 95% of the flexibility of hand-coded apps, compared to 70% for low-code platforms.

Can vibe coding replace developers?

No. It augments them. Developers still design architecture, handle complex logic, review security, and manage integrations. AI handles repetitive tasks-building forms, APIs, reports. Teams using vibe coding report 40-60% less time spent on boilerplate work, but the role of the developer becomes more strategic, not obsolete.

What’s the biggest mistake companies make with vibe coding?

Assuming the AI is safe by default. AI doesn’t understand compliance, context, or consequences. The biggest failure is deploying AI-generated code without automated scanning, human review, or audit trails. That’s how breaches happen.

How long does it take to set up governance for vibe coding?

Most enterprises take 2-4 weeks to define roles, approval workflows, and security rules. Some take longer-up to 8 weeks-if they’re in highly regulated industries like finance or healthcare. But skipping this step leads to far longer delays later, often from compliance failures or security incidents.

Is vibe coding secure if the AI runs in the cloud?

Only if your data never leaves your environment. Most enterprise platforms now offer private cloud or on-prem deployment options. Running the LLM on public cloud servers risks exposing proprietary data, internal schemas, or customer information. For high-security industries, local deployment isn’t optional-it’s required.

What should I look for in an enterprise vibe coding platform?

Five key features: 1) Built-in audit trails, 2) Role-based access controls, 3) Automated security scanning (Semgrep, CodeQL), 4) Integration with your existing CI/CD pipeline, and 5) Support for private cloud or on-prem deployment. If it doesn’t have these, it’s not enterprise-ready.

Next Steps for Your Team

Start with a pilot. Pick one low-risk internal tool-like a time-off request form or a vendor invoice tracker. Define your governance rules before you write a single prompt. Assign one developer to manage the AI prompts and another to review outputs. Run automated scans on every generated file. Measure how much time you save and how many bugs you catch.

Don’t rush to customer-facing apps. Build trust first-with your developers, your security team, and your auditors. Vibe coding isn’t a magic button. It’s a new way of working. And like any new way of working, it only succeeds when the rules are clear, the people are trained, and the risks are managed.

Artificial Intelligence

Artificial Intelligence