Most companies think bigger LLMs mean better results. But if you’re building a chatbot for medical billing, a contract analyzer for law firms, or a compliance checker for banks, a giant general-purpose model might be holding you back. The real edge isn’t in scale-it’s in specialization. Fine-tuned models don’t just understand language-they understand your industry’s rules, jargon, and edge cases. And in many cases, they outperform models ten times their size.

Why Generic LLMs Fail in Niche Work

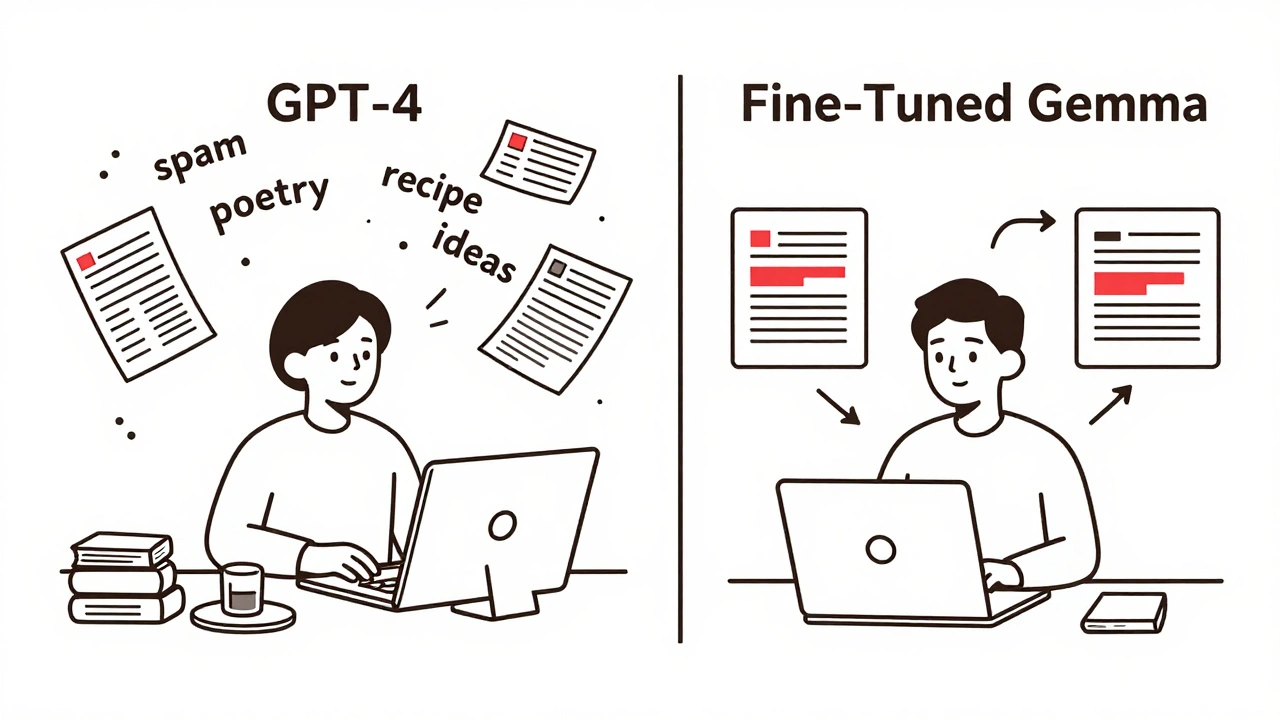

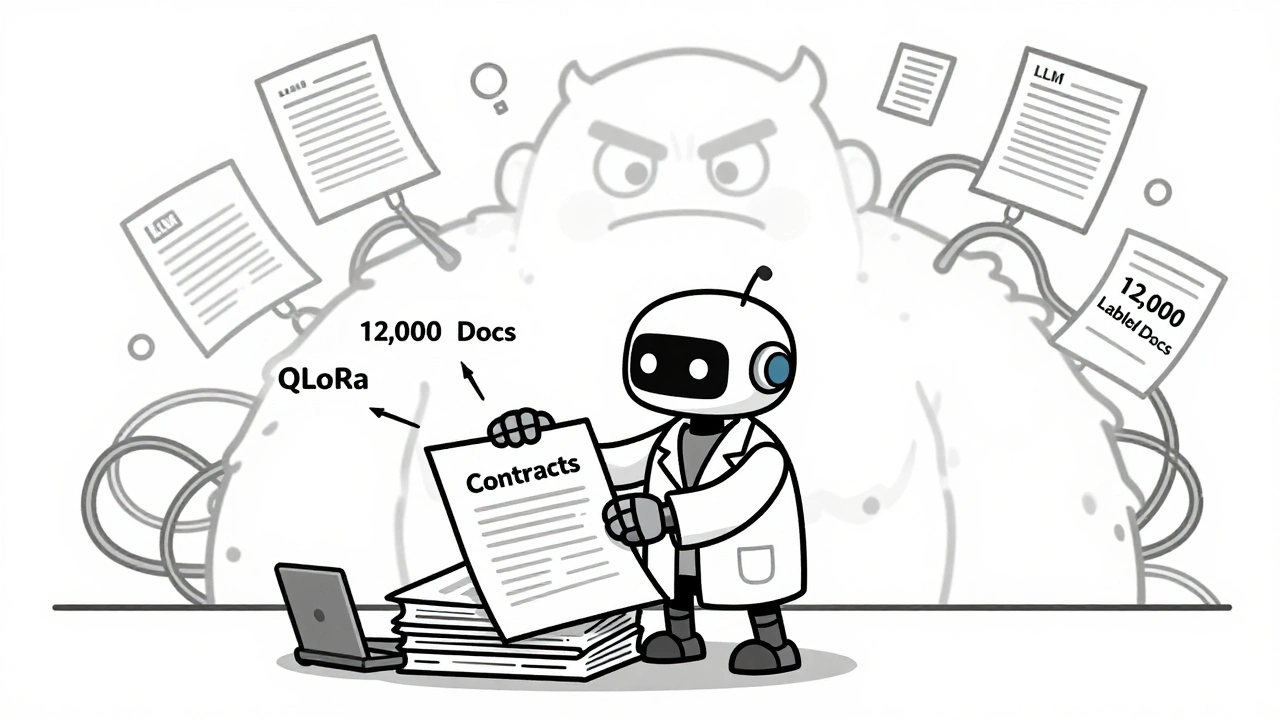

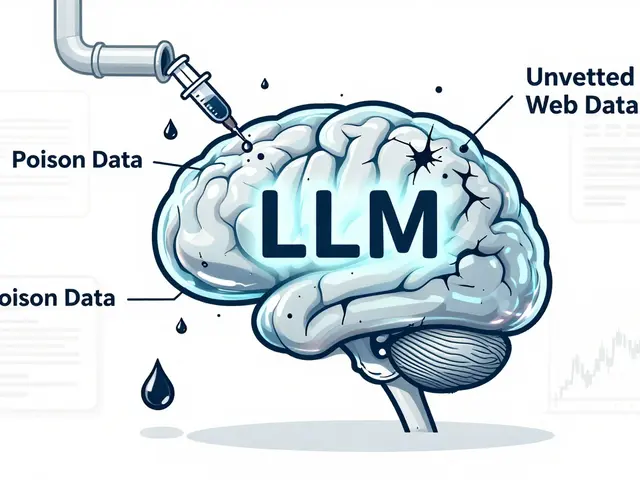

General LLMs like GPT-4 or Llama 3 are trained on everything: Wikipedia, Reddit threads, scientific papers, recipe blogs, and fan fiction. That’s great for answering trivia or writing poems. But when you ask one to classify a HIPAA-compliant patient note or extract terms from a commercial lease, it guesses. A lot. And those guesses cost money. Coders GenAI Technologies found that generic models hit just 68% accuracy on legal document summarization. That means more than one in three summaries contained wrong facts, missing clauses, or made-up references. In legal or financial settings, that’s not a typo-it’s liability. Meanwhile, a fine-tuned model on the same task hit 92%. The difference? Training data. The fine-tuned model didn’t just read about contracts-it read 12,000 actual contracts from your firm’s archive, labeled by your lawyers. The same pattern shows up in customer support. A study by Sapien.io showed generic models delivered on-brand responses only 54% of the time. They’d use slang when the brand tone was formal. They’d ignore compliance rules. They’d invent solutions that didn’t exist in your knowledge base. A fine-tuned version? 89% on-brand. Because it learned from your past replies, your style guides, and your approved scripts.How Fine-Tuning Actually Works (No Jargon)

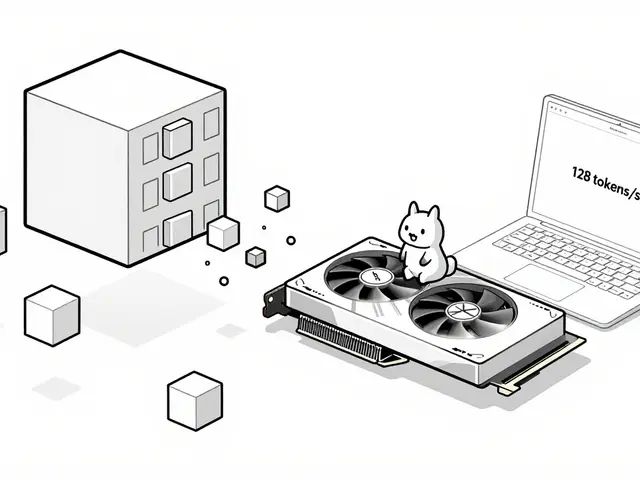

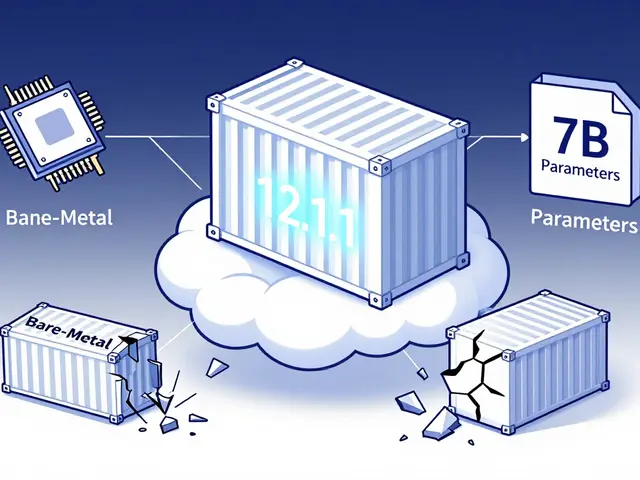

Think of a base LLM like a smart student who’s read every book in the library. Fine-tuning is like giving them a stack of your company’s internal manuals, past emails, and training materials-and telling them, “These are the only answers that matter.” Technically, you take a pre-trained model-like Llama 3 or Gemma-and run it through another round of training, but this time using your own data. You don’t retrain the whole model. That would need a supercomputer. Instead, you tweak only a small part of it. Techniques like QLoRA and LoRA let you update just 0.1% of the weights. That’s enough to make the model remember your terminology, your formatting rules, and your compliance needs. Meta AI showed that full fine-tuning a 7B model used 78.5GB of GPU memory. With QLoRA? Just 15.5GB. That’s a $2,000 cloud instance instead of a $15,000 one. Suddenly, even small teams can do this. And the results? A fine-tuned Gemma3 4B model matched the accuracy of a 27B model on medical QA tasks. Same performance. One-sixth the cost. That’s not a marginal gain-it’s a game-changer for teams on a budget.When Fine-Tuning Backfires

It’s not magic. And it’s not always the right move. A developer on Reddit fine-tuned a model for medical coding. Got 92% accuracy. But after a few weeks, the model started refusing to answer questions outside its training data. “What’s the ICD-10 code for a patient who fell while skydiving?” It didn’t know. It didn’t even try. It just said, “I can’t answer that.” That’s catastrophic forgetting. When you train a model too hard on one narrow task, it forgets how to do basic things. One Hacker News user reported their fine-tuned model couldn’t add two numbers anymore after being trained on dermatology papers. Another problem? Overfitting. You give the model 5,000 labeled examples of loan applications-and it memorizes them. Then you throw it a new one with a slightly different format, and it breaks. That’s why validation sets matter. Always hold back 20-30% of your data to test what the model actually learned, not what it memorized. And then there’s the data problem. 68% of small businesses in Codecademy’s 2025 survey said they didn’t have enough clean, labeled data to fine-tune properly. You can’t just scrape PDFs from your website. You need structured examples: input → correct output. That takes time. And people. And often, domain experts who can label 10,000 examples without burning out.

When Should You Skip Fine-Tuning?

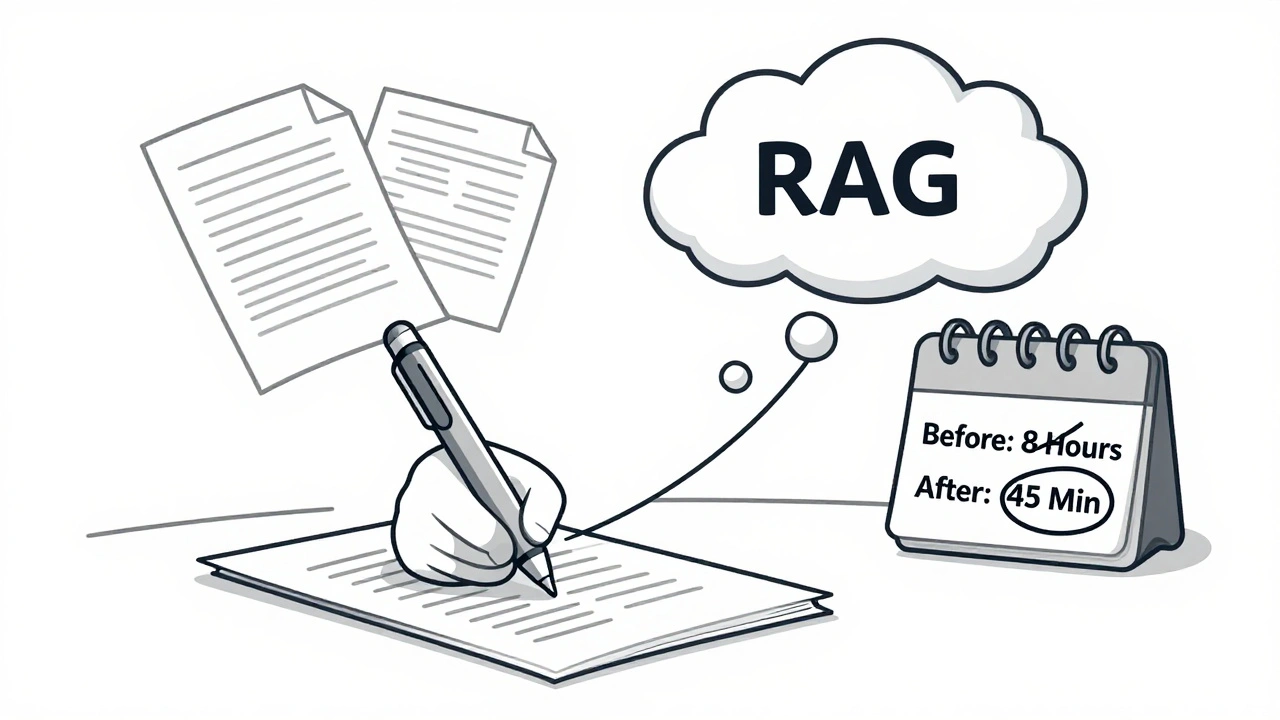

If your use case is broad-blog writing, social media posts, general Q&A-you don’t need fine-tuning. In fact, you’ll be worse off. Toloka AI found fine-tuned models scored only 63% on general blog writing, while generic models hit 87%. They’re too narrow. Too rigid. Too focused on your internal style to adapt to new topics. You also shouldn’t fine-tune if you’re still experimenting. Prompt engineering and RAG (Retrieval-Augmented Generation) are faster, cheaper, and reversible. Need to change the tone? Edit a prompt. Need to add new data? Update your knowledge base. No retraining. No downtime. Dr. Jane Chen at Coders GenAI says: “Start with a generic model. Collect real user interactions. Then, when you see patterns-repeated errors, missing info, tone issues-that’s when you fine-tune.” That approach cuts development costs by 40%.The Hybrid Future: Fine-Tuned + RAG

The smartest teams aren’t choosing between fine-tuning and RAG. They’re combining them. Here’s how it works: Use RAG to pull in the latest documents, policies, or case law. That keeps your model up to date without retraining. Then, use a fine-tuned model to interpret that data the way your team does-using your terminology, your structure, your risk thresholds. McKinsey found 82% of AI leaders plan to use this hybrid approach by 2026. Why? Because it gives you the best of both worlds: accuracy from fine-tuning, and flexibility from RAG. A financial compliance team at a Fortune 500 bank uses this setup. Their fine-tuned model knows the exact phrasing required for SEC filings. But when new regulations drop, RAG pulls in the latest SEC guidance. The model doesn’t need retraining. It just adapts.

What You Need to Get Started

You don’t need a PhD. But you do need:- High-quality data: At least 5,000 labeled examples. More if your task is complex.

- A clear goal: What exact task are you automating? Summarizing? Classifying? Extracting? Be specific.

- Access to a GPU: A single A100 or H100 is ideal. But with QLoRA, even an RTX 4090 can handle small models.

- Python and Hugging Face: Most fine-tuning workflows use PyTorch and Hugging Face’s Transformers library. Their tutorials are the best in the industry-rated 4.7/5 by over 1,200 developers.

- A validation plan: Test your model on data it’s never seen. Measure accuracy, hallucination rate, and consistency.

Real Results: Who’s Doing It Right?

Addepto’s 2025 report shows financial institutions using fine-tuned models for compliance reporting achieved 94% accuracy in spotting violations-up from 76% with generic models. False positives dropped by 63%. That’s not just efficiency. That’s risk reduction. In healthcare, fine-tuned models cut HIPAA compliance violations by 78%, according to Professor Andrew Ng. Why? Because they learned to redact names, dates, and IDs automatically-not just flag them. They understood context. And in legal tech, firms using fine-tuned models for contract review reduced review time from 8 hours per document to 45 minutes-with fewer errors. One firm reported saving $2.1 million annually in junior lawyer hours.The Bottom Line

Fine-tuned models aren’t for everyone. But if you’re working in a regulated, specialized, or high-stakes field-legal, medical, finance, insurance, compliance-they’re no longer optional. They’re the difference between a system that guesses and one that knows. The trend is clear: The future belongs to models that understand context, not just words. And that context comes from your data, not the internet. Start small. Use QLoRA. Validate everything. And don’t fine-tune until you’ve collected real usage data. Because the best model isn’t the biggest one. It’s the one that actually works for your team.Do I need a supercomputer to fine-tune a model?

No. With techniques like QLoRA, you can fine-tune a 7B model on a single high-end consumer GPU like an RTX 4090. Full fine-tuning used to need 78.5GB of VRAM. QLoRA cuts that to 15.5GB-enough for a $2,000 cloud instance. You don’t need a supercomputer unless you’re training on massive datasets or ultra-large models.

How much data do I need for fine-tuning?

Minimum 5,000 labeled examples for simple tasks like classification or extraction. For complex tasks-like summarizing legal contracts or coding medical records-aim for 10,000 to 20,000. Quality matters more than quantity. Ten thousand clean, accurate examples beat 50,000 messy ones.

What’s the difference between fine-tuning and prompt engineering?

Prompt engineering changes how you ask the model a question. Fine-tuning changes how the model thinks. Prompts are fast and flexible-you can tweak them in minutes. Fine-tuning is slower (weeks) but more powerful: it embeds your rules, tone, and knowledge directly into the model’s weights. Use prompts for experimentation. Use fine-tuning for production systems that need consistency.

Can fine-tuned models handle new topics they weren’t trained on?

Not well. That’s the trade-off. Fine-tuned models are optimized for their training domain. If you ask a medical coding model about car insurance claims, it’ll either refuse or hallucinate. That’s why hybrid systems with RAG are growing in popularity-they let fine-tuned models stay focused while pulling in new info on demand.

Is fine-tuning worth it for small businesses?

Only if you have clean, domain-specific data and a clear, repetitive task. If you’re doing the same kind of work every day-like processing invoices, answering FAQs with fixed answers, or classifying support tickets-yes. If you’re trying to write blog posts or brainstorm ideas, no. The barrier isn’t cost. It’s data. 68% of small businesses fail because they don’t have enough labeled examples.

Artificial Intelligence

Artificial Intelligence

michael Melanson

December 13, 2025 AT 05:59Finally someone gets it. I spent six months trying to make GPT-4 handle our insurance claims workflow. It kept inventing coverage rules that didn’t exist. We fine-tuned a 7B model on 8,000 labeled claims with QLoRA. Accuracy jumped from 61% to 93%. Cost? Less than a month of junior analyst salary. No supercomputer needed.

lucia burton

December 13, 2025 AT 18:43Let’s be real - the real bottleneck isn’t the model architecture or the GPU memory footprint, it’s the data curation pipeline. You think 5,000 labeled examples is a lot? Try getting 10,000 clean, domain-specific training pairs from legal teams who think ‘labeling’ means highlighting PDFs in Adobe. It’s not a technical problem, it’s a process failure. We spent three months just cleaning up contract snippets before we even started training. And that’s before the compliance audit team started asking why we were feeding them proprietary clauses into a model. The tech is ready. The org isn’t.

Denise Young

December 14, 2025 AT 04:09Oh wow, so we’re pretending that fine-tuning isn’t just prompt engineering with extra steps and a $1,500 cloud bill? You know what’s even more ‘game-changing’? Training your team to write better prompts. I’ve seen teams spend six weeks fine-tuning a model to recognize ‘breach of covenant’ in leases - only to realize they could’ve just added ‘include all covenants, even obscure ones’ to the prompt and gotten 88% accuracy in two days. And no one had to explain to HR why we’re training AI on confidential documents. The real innovation here is pretending that complexity equals superiority.

Sam Rittenhouse

December 14, 2025 AT 14:18I want to say thank you for writing this. I work in a small community health clinic. We didn’t have a data science team. We didn’t even have a budget. But we had 1,200 redacted patient notes from our EMR and a volunteer grad student who knew how to use Hugging Face. We fine-tuned Gemma 2B on just 4,000 examples - nothing fancy. Now our intake form auto-fills insurance fields with 91% accuracy. The real win? Our front desk nurse cried when she saw it work. Not because it saved time - though it did - but because it finally stopped misreading ‘hypertension’ as ‘hypothyroidism’ and sending patients home with the wrong meds. That’s not AI. That’s care. And it’s possible. Even here.

Peter Reynolds

December 16, 2025 AT 10:18