Imagine translating a marketing slogan from English to Spanish and having it land perfectly in Mexico City - but accidentally sound like a joke in Madrid. That’s the kind of mistake traditional machine translation used to make all the time. Today, large language models (LLMs) are fixing that. They don’t just swap words. They understand tone, culture, and intent. And that’s making localization not just faster, but actually accurate.

Why Context Matters More Than Words

Old-school translation tools worked like dictionaries on autopilot. They looked at each word, found its match, and stitched sentences together. No thought for whether the phrase was formal or casual. No care if "car" meant "auto" in Latin America or "coche" in Spain. That’s why you’d get translations that were technically correct but culturally weird - or worse, offensive. LLMs changed that. Trained on billions of sentences from real human conversations, books, websites, and customer support chats, they learned patterns humans use. Not just grammar. Context. They know when to use honorifics in Japanese, when to drop pronouns in Mandarin, or when a British idiom needs to become an American one. A 2024 Smartling case study showed LLMs got this right 92% of the time for regional Spanish variations. Traditional systems? Only 41%. It’s not magic. It’s data. And it’s architecture. LLMs use transformer models that track relationships between words across entire paragraphs, not just sentences. That means they can tell if "light" refers to weight, brightness, or alcohol content - and translate accordingly.How LLM Translation Compares to Old Systems

Let’s break it down:- Rule-based systems (1950s-1980s): Hard-coded grammar rules. Worked only for simple, structured text. Failed on anything with slang or ambiguity.

- Statistical machine translation (1990s-2000s): Learned from large text corpora. Better, but still word-by-word. Couldn’t handle idioms.

- Neural machine translation (NMT, 2016-present): Used deep learning to improve fluency. Still struggled with cultural nuance and long-range context.

- LLM-based translation (2022-present): Understands intent, tone, and cultural norms. Can adapt style to match brand voice.

Where LLMs Shine (and Where They Struggle)

LLMs are amazing for content that needs personality:- Marketing campaigns - they adapt humor, tone, and references to local culture

- Customer support responses - they match the brand’s voice, whether it’s friendly or formal

- Entertainment localization - think subtitles for Netflix shows or game dialogue

- Legal and medical summaries - when fine-tuned with domain-specific data

- Highly technical engineering specs - specialized NMT beats them by 22% in accuracy

- Low-resource languages - Swahili, Bengali, or Ukrainian get far worse results than English or Spanish

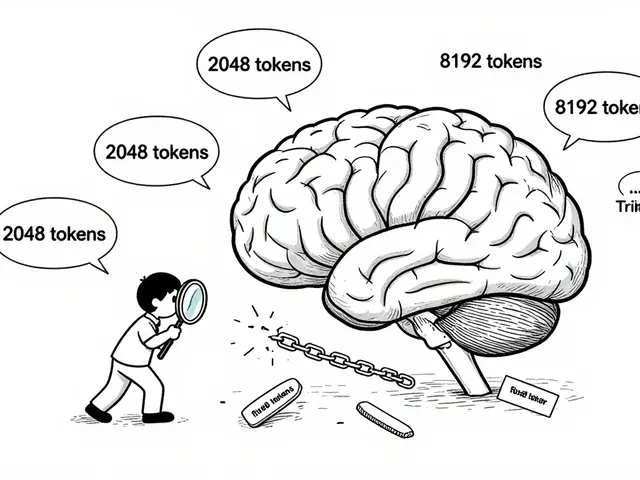

- Consistent terminology across long documents - 38% of users complain about inconsistent terms in large files

What You Need to Make LLM Translation Work

You can’t just plug in GPT-4 and expect perfect translations. Here’s what actually works:- Prompt engineering: 89% of successful teams say this is essential. You need to tell the model exactly what you want: "Translate this as if it’s for a 30-year-old tech user in Brazil. Use casual tone, avoid jargon."

- Translation memory + RAG: Retrieval Augmented Generation pulls in past approved translations to keep terminology consistent. AWS says this boosts BLEU scores by up to 20 points.

- Terminology databases: Upload your glossary. If your product is called "FlowSync," make sure it never gets translated as "Flujo Sincronizado" when you want "Flujo Sincronizado Pro."

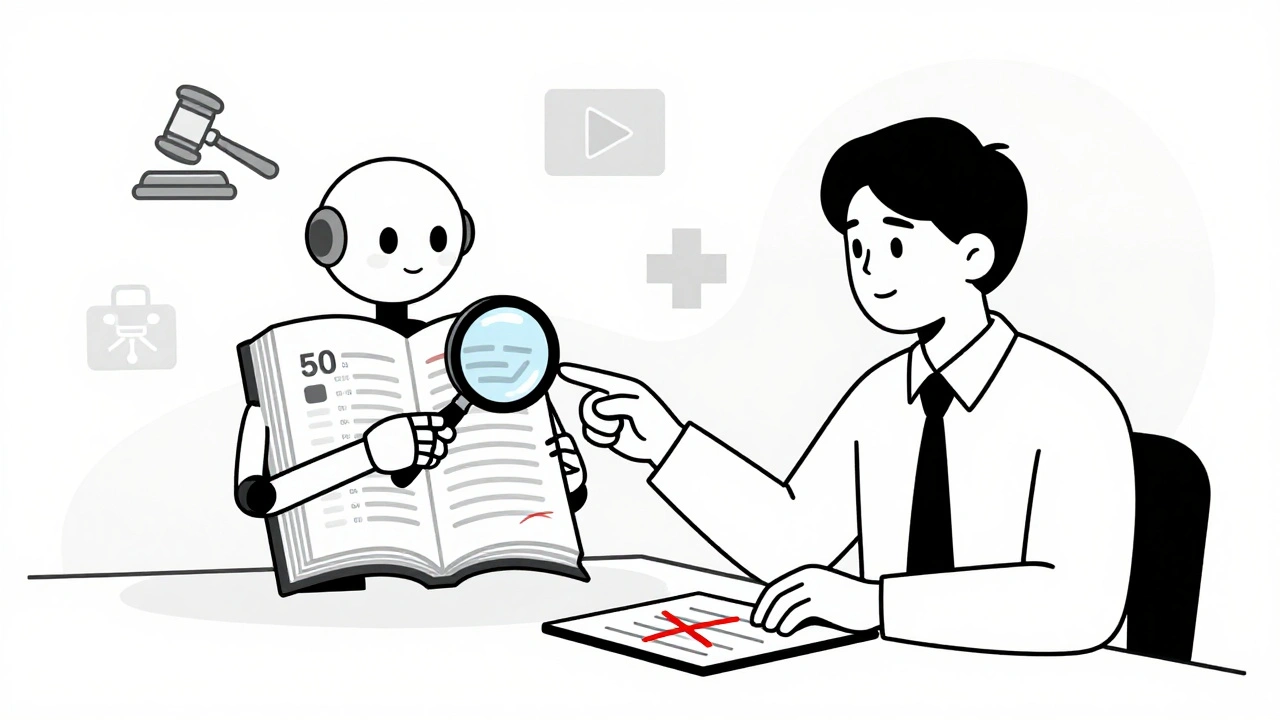

- Human oversight: MIT researchers and the EU’s 2024 AI Act both say: never fully automate critical translations. Always have a human review.

Real-World Results from Users

Professional translator Maria Chen on Reddit says Claude 3.5 cut her post-editing time by 60% for marketing content - but she still catches 3-5 errors per 1,000 words in technical docs. That’s the sweet spot: LLMs handle 80% of the work. You fix the rest. G2 reviews show Smartling’s LLM-powered tool has a 4.6/5 rating. Users love the 73% drop in context errors. But on Capterra, one user said: "We almost got sued because the LLM reversed a dosage instruction." That’s why hybrid workflows are the future. On HackerNews, an enterprise team reported 89% post-editing efficiency after building custom glossaries - up from 63% with NMT. That’s the kind of ROI that makes executives pay attention.

The Market Is Exploding - But So Are Risks

The global language services market is worth $56.18 billion. LLM translation is the fastest-growing slice: expected to jump from $1.2 billion in 2023 to $8.7 billion by 2027. That’s a 63% annual growth rate. Tech companies lead adoption (78% use it), followed by e-commerce (63%). Healthcare? Only 29%. Why? Fear of errors. The EU’s AI Act now requires human review for any translation that could impact safety or legal rights. That affects 63% of enterprise deployments. Big players are fighting for control: Microsoft, AWS, and Google offer LLM translation in their cloud platforms. Lokalise and Smartling target mid-sized businesses with easier tools. Lokalise’s 2024 test ranked Claude Sonnet 3.5 as the top performer across German, Polish, and Russian - beating Google Translate and DeepL.What’s Next?

The next big leap? Document-level context. Right now, most LLMs handle about 4,000 tokens - roughly 3 pages. That’s fine for a single page. But what about a 50-page user manual? The model forgets what it said on page 1. KU Leuven researchers are building systems that track context across entire documents. Prototype tools are coming in Q2 2025. They’ll use "translation technique" guidance - like when to paraphrase, when to localize, when to add footnotes - to make output smarter. Gartner predicts by 2027, 80% of enterprise translation workflows will use LLMs. But they’ll mostly be hybrids: LLMs for fluency and cultural fit, NMT for speed and technical precision. The future isn’t about replacing translators. It’s about empowering them. LLMs are the best assistant a localization team has ever had. They don’t think. They don’t feel. But they remember everything - and they never get tired.Are LLMs better than Google Translate for localization?

Yes, for context-heavy content. Google Translate still works well for quick, simple translations - like understanding a street sign or a basic email. But for marketing, legal, or user experience content, LLMs like Claude 3.5 or GPT-4 outperform it significantly. They adapt tone, regional dialects, and cultural references. A 2024 Lokalise study found Claude 3.5 delivered "good" translations 78% of the time in challenging passages, compared to Google Translate’s 62%.

Can LLMs translate low-resource languages like Swahili or Bengali well?

Not reliably. LLMs depend on training data, and languages like Swahili or Bengali have far less digital text available. Microsoft’s data shows translation quality drops by 55-68% for these languages compared to English or Spanish. For now, these languages still need human translators or hybrid systems that combine LLMs with rule-based fallbacks.

Do I need to hire a new team to use LLM translation?

No - but your team needs training. You don’t need new hires. You need upskilling. Localization teams typically need 3-5 weeks to learn prompt engineering, how to use translation memory with RAG, and how to build terminology databases. The real cost isn’t staff - it’s time spent learning the tools. Companies that skip training end up with fluent but wrong translations.

Is LLM translation safe for medical or legal documents?

Only with strict controls. LLMs can hallucinate - they might invent a term or reverse a dosage instruction. A 2024 case study showed a 2% error rate in medical instructions without proper safeguards. Use them only after fine-tuning with domain-specific data, adding terminology databases, and always having a human reviewer. The EU’s AI Act now requires this for any translation affecting safety or legal rights.

What’s the biggest mistake companies make with LLM translation?

Assuming it’s plug-and-play. Many companies throw a document into ChatGPT and call it done. That leads to inconsistent terminology, cultural blunders, and brand voice disasters. The best results come from structured workflows: prompt templates, glossaries, translation memory, and human review. Treat it like a tool, not a magic button.

Artificial Intelligence

Artificial Intelligence

Addison Smart

December 13, 2025 AT 14:19Man, I've been using LLMs for localization on my marketing team for a year now and it's been a game changer. We used to spend weeks just tweaking translations for Latin America vs Spain - now we feed the model a prompt like 'translate this like it's for a 28-year-old in Mexico City who binge-watches Netflix and uses TikTok slang' and it nails it 9 out of 10 times. The real win? We cut post-editing time by 60% without losing brand voice. I used to think AI couldn't get cultural nuance, but when you pair it with a solid glossary and RAG, it's like having a native speaker who never sleeps. The only thing that trips it up is when we throw in old internal jargon - turns out LLMs don't know what 'synergy blob' means, even if we wrote it in 2017.

Also, don't skip the human review. One time we missed a regional slang shift in Brazilian Portuguese and our slogan accidentally sounded like a dating app bio. Not proud.

Bottom line: LLMs aren't replacing translators. They're making them superheroes.

David Smith

December 14, 2025 AT 12:16Wow. So we're just gonna let some algorithm decide how to translate 'I love you' in German based on what it scraped from Reddit? Next thing you know, it'll be translating funeral eulogies using TikTok trends. I mean, come on. This isn't innovation - it's lazy. People used to study languages. Now we just throw words into a black box and pray it doesn't call your grandma 'hot' in Spanish. I've seen translations where 'serious medical warning' became 'this might make you feel kinda weird lol'. And you're celebrating this? I'm not mad, I'm just disappointed.

Lissa Veldhuis

December 15, 2025 AT 20:49Y'all are acting like this is some revolutionary breakthrough when it's basically just ChatGPT with a fancy label. I tried it on a legal doc last month and it turned 'non-transferable liability clause' into 'you can't sell this thing and blame someone else if it blows up' - which is technically accurate but legally suicidal. And don't even get me started on how it keeps using 'du' in German B2B emails like it's texting your cousin. I've seen more cultural sensitivity in a 1998 McDonald's ad. The truth? LLMs are just really good at sounding smart while being dangerously wrong. And now companies are replacing bilingual humans with this because 'it's cheaper'. Great. Let's see how many lawsuits happen when the AI translates 'do not swallow' as 'enjoy as a snack'.

Also - why is everyone ignoring the fact that it can't handle Swahili? Are we just pretending African languages don't exist until they're profitable?

And yes I'm still salty about the time it turned 'I'm sorry' into 'I'm so sorry you exist' in Japanese. Never again.

Michael Jones

December 17, 2025 AT 19:13What if the real revolution isn't the tech but what it reveals about us? We built these models to understand language but what we're really learning is how deeply culture is woven into every word. That moment when the AI catches the difference between 'coche' and 'auto' isn't just accuracy - it's empathy encoded in vectors. We used to think translation was about words. Now we know it's about silence between words. The hesitation before a joke. The weight behind a formal address. The unspoken rules that live in the gaps. LLMs don't know why they get it right - but they do. And maybe that's the most human thing about them. They mirror back the complexity we forgot we carried. We're not automating translation. We're finally seeing how much we never understood.

And that's beautiful. Even if it takes 45 seconds to do it.

allison berroteran

December 17, 2025 AT 23:44I love how this conversation is going - it's so easy to get caught up in the hype or the fear, but the real story is in the middle. I work with a team that translates patient instructions for a global health nonprofit, and we use LLMs as our first pass, then human editors do the final pass. It's not perfect, but it's allowed us to reach communities we never could before - rural areas in Guatemala, refugee camps in Jordan. We still have to train the models on local dialects and medical terms, and yes, we've had scary mistakes - like one time it translated 'take with food' as 'take during a meal' and someone thought they had to wait until dinner. But we caught it. And now we have a glossary for 12 dialects.

What I've learned is that LLMs don't replace care - they multiply it. They let us do more, faster, without burning out our translators. And that's the quiet win. It's not about AI being smarter. It's about humans being less tired. Less overwhelmed. More able to focus on the parts that matter - the trust, the dignity, the quiet moments when a translated sentence helps someone feel seen. That’s the real ROI.

Also, if you're using this for legal docs without a human, please just stop. I'm begging you.