Large Language Models (LLMs) are powerful, but they remember too much. When you feed them public text, they don’t just learn patterns-they store names, addresses, medical records, and even private emails. And if you ask the right question, they might spit it back out. This isn’t a bug. It’s how they’re built. The same training that makes them useful also makes them dangerous for privacy.

Why LLMs Break Traditional Privacy Rules

Traditional data protection methods like anonymization or masking don’t work on LLMs. Why? Because these models don’t just store data-they memorize it. A 2021 study by Carlini et al. showed that GPT-2 could reproduce verbatim snippets from its training data, including full credit card numbers and social security numbers, with a 0.23% success rate. That might sound low, but when you’re training on billions of documents, even a fraction of a percent means thousands of exposed records. Regulations like GDPR and CCPA assume you can delete data or anonymize it. But you can’t delete a memory from a neural network. Once personal data is embedded in the weights of an LLM, there’s no simple button to erase it. This is why the European Data Protection Board called traditional data impact assessments “insufficient” for LLMs in April 2025. You need new tools, new processes, and new thinking.The Seven Core Principles of LLM Data Privacy

LLM privacy isn’t a new field-it’s an old one stretched thin. The same seven principles from GDPR and CCPA still apply, but they’re harder to follow:- Data minimization: Only use the data you absolutely need. Don’t scrape every public forum just because you can.

- Purpose limitation: Train models for specific tasks. Don’t build a general-purpose LLM if you only need one for customer support.

- Data integrity: Ensure training data is accurate and not contaminated with misleading or harmful personal info.

- Storage limitation: Don’t keep raw training data longer than necessary. Delete it after preprocessing.

- Data security: Encrypt data at rest and in transit. Use secure enclaves during training and inference.

- Transparency: Tell users when LLMs are processing their inputs. Don’t hide it behind “AI assistance.”

- Consent: If you’re using personal data, get permission. Even if it’s public, ethical use matters.

Technical Controls That Actually Work

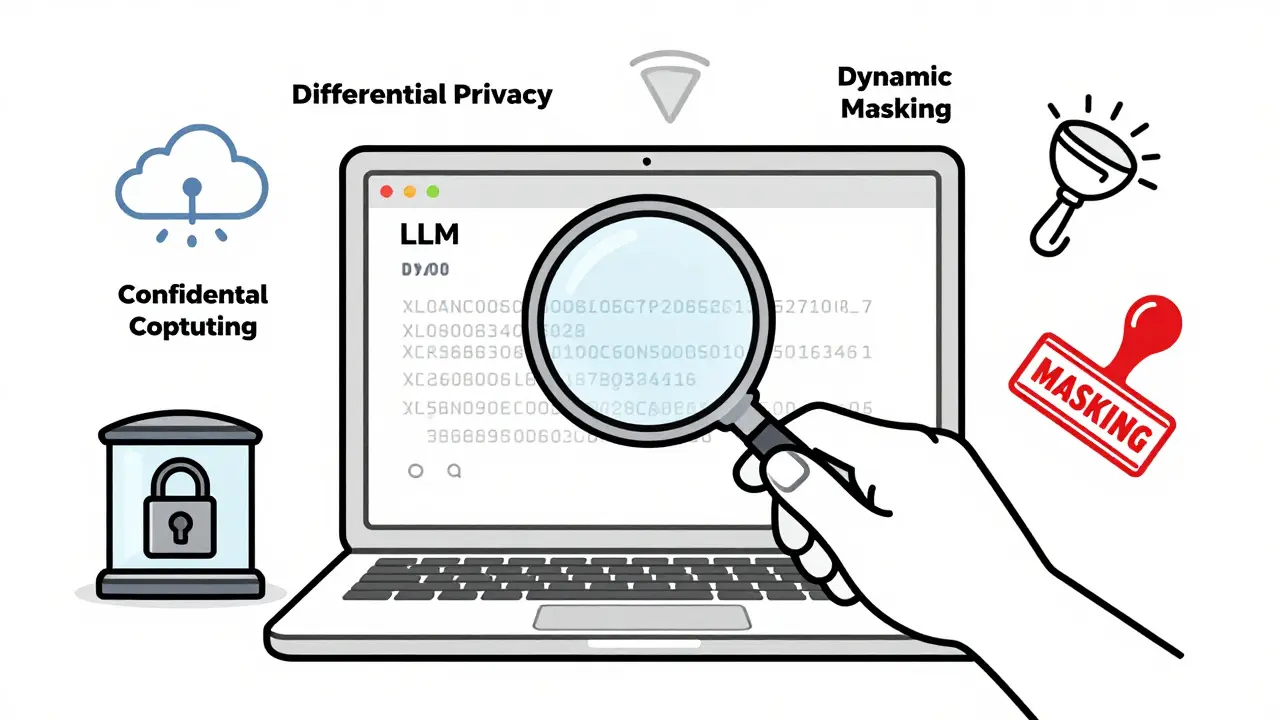

There’s no single fix, but a layered approach works. Here are the most effective tools used today:Differential Privacy

This adds mathematically calibrated noise to training data or model outputs so that no single record can be identified. Google used it in BERT and found it reduced privacy leakage by 82% while keeping 95% of model accuracy, according to Google’s 2024 research. But there’s a cost: accuracy drops 5-15% depending on how much noise you add. For high-stakes applications like healthcare or finance, that trade-off might be worth it.Federated Learning

Instead of collecting data in one place, federated learning trains models across devices-like smartphones or servers-without ever moving raw data. Financial institutions use this to detect fraud without centralizing customer records. The downside? It needs 30-40% more computing power, according to Lee et al. (2021). But if you’re handling sensitive data, that cost is cheaper than a $7,500-per-record CCPA fine.Confidential Computing

This uses hardware like Intel SGX or AMD SEV to create encrypted “enclaves” where data is processed while still encrypted. Lasso Security tested this in 2024 and found it kept data safe during inference, even from system administrators. The catch? Latency increases by 15-20%. For real-time chatbots, that’s noticeable. For batch processing, it’s fine.PII Detection with LLM-Powered Classification

Old tools like regex or simple keyword scanners miss context. They’ll flag “John Smith” as a name, but miss “Dr. J. Smith, treating patient #7821 for chronic diabetes.” IBM’s Adaptive PII Mitigation Framework, tested in 2024, achieved a 0.95 F1 score in detecting passport numbers and medical records-far above Amazon Comprehend’s 0.54 or Presidio’s 0.33. Context-aware models cut false positives by 65% compared to rule-based systems.Dynamic Data Masking

Before any input reaches the LLM, sensitive fields are automatically redacted. If a user types, “My SSN is 123-45-6789,” the system strips it out before the model sees it. Microsoft’s PrivacyLens toolkit, released in September 2024, does this with 99.2% accuracy. It’s not perfect-but it’s the closest we’ve come to a real-time shield.

What Doesn’t Work (And Why)

Many companies think they’re protected because they “anonymized” their data. They’re wrong. Anonymization assumes you can remove identifiers and the data becomes safe. But LLMs reconstruct identities from context. A 2023 study showed that even with names and emails removed, models could infer a person’s identity from their job title, location, and medical condition. One researcher extracted a full name from a dataset that had only a ZIP code, birth year, and diagnosis. Machine unlearning-removing specific data from a trained model-is promising but still experimental. It requires retraining the model from scratch, using 20-30% more resources. And even then, it’s not guaranteed. If the data was spread across thousands of training examples, deleting one might not matter.Real-World Failures and Wins

A European telecom company had an LLM handle customer service. In July 2024, they discovered it was regurgitating full transcripts of past calls-verbatim-in 0.23% of responses. That’s one in every 430 interactions. They shut it down. Meanwhile, JPMorgan Chase implemented contextual access controls. Their LLM could only access internal data if the user had clearance. They reduced PII exposure by 85% without slowing down responses. The key? They didn’t just add filters-they redesigned the entire workflow. Gartner’s 2024 survey of 127 enterprises found that 68% experienced unexpected data leaks during initial LLM rollout. Healthcare companies were hit hardest-79% had incidents. Why? They used patient records to train models without proper consent. That’s not just risky-it’s illegal under HIPAA.

Implementation Checklist

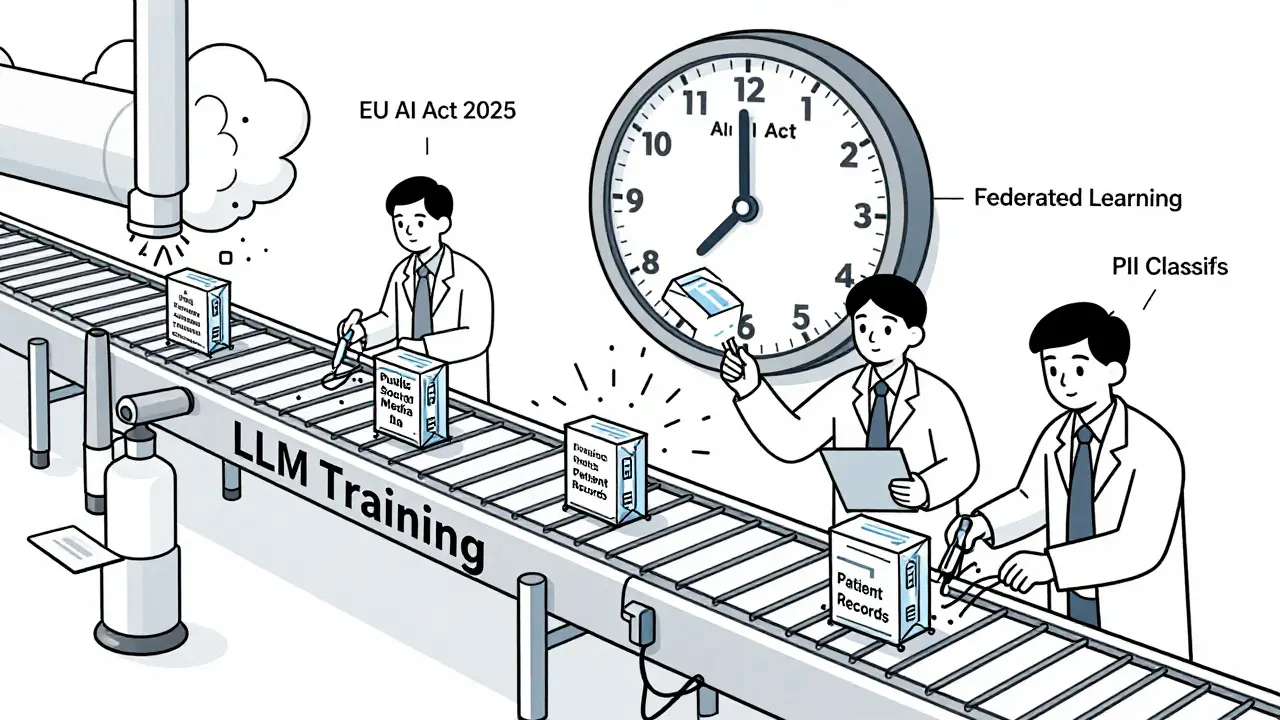

If you’re building or deploying an LLM, here’s what you need to do:- Data ingestion: Filter out PII before training. Use LLM-based classifiers, not regex.

- Training: Apply differential privacy or federated learning. Budget for 30-50% longer training time.

- Inference: Use dynamic masking and confidential computing for sensitive queries.

- Output: Scan every response before it reaches the user. Microsoft’s PrivacyLens is a good starting point.

- Monitoring: Run membership inference attacks monthly. The EDPB requires less than 0.5% success rate for high-risk systems.

- Documentation: Keep logs of data sources, privacy controls used, and who approved each step.

The Regulatory Time Bomb

The EU AI Act, effective February 2025, classifies LLMs as “high-risk” if used in hiring, banking, or healthcare. Non-compliance means fines up to 4% of global revenue. The FTC in the U.S. has already warned companies: “Reasonable measures” are no longer optional. The market is reacting. AI data privacy tools hit $2.3 billion in 2024, growing 38% year-over-year. Startups like Private AI raised $45 million in January 2025. Microsoft, Google, and IBM are all adding privacy features to their AI platforms. But here’s the hard truth: the right to be forgotten doesn’t exist for LLMs. Privacy International put it bluntly: “You can’t erase a memory from a neural network.” That’s a legal contradiction. Regulations demand deletion. Technology makes it impossible.What Comes Next?

We’re entering a new phase. By 2027, Forrester predicts 80% of enterprise LLMs will use privacy-preserving computation. That’s up from 35% today. Tools will get smarter. Hardware will get faster. But the core tension remains: how do you balance innovation with fundamental rights? The answer isn’t just technical. It’s legal, ethical, and cultural. Companies that treat privacy as a checkbox will get fined. Those that build it in from day one will earn trust. And in the age of AI, trust is the only competitive advantage that can’t be copied.Can I delete personal data from a trained LLM?

No, not reliably. Once data is embedded in an LLM’s weights, standard deletion methods don’t work. Machine unlearning techniques exist but require retraining the entire model and often fail to remove all traces. The European Data Protection Board and Privacy International agree that true erasure is currently impossible for data embedded in training corpora.

Is differential privacy worth the accuracy loss?

It depends on your use case. For healthcare, finance, or legal applications where privacy is critical, a 5-15% drop in accuracy is often acceptable to avoid legal penalties and reputational damage. For internal tools with low-risk data, you might skip it. Google’s research shows you can maintain 95% performance with smart noise injection-so test before deciding.

What’s the best tool for detecting PII in LLM inputs?

LLM-powered context-aware classifiers outperform traditional tools. IBM’s Adaptive PII Mitigation Framework achieved a 0.95 F1 score for detecting sensitive data like passports and medical records, while rule-based tools like Presidio scored only 0.33. For real-time use, Microsoft’s PrivacyLens offers 99.2% accuracy in redacting PII before it reaches the model.

Do I need to get consent to train an LLM on public data?

Legally, public data doesn’t always require consent-but ethically, it should. GDPR doesn’t require consent for publicly available data, but it does require fairness and transparency. If you’re scraping personal information from forums, social media, or blogs, you’re still processing personal data. Many regulators now consider this a violation of data minimization and purpose limitation principles. Best practice: assume consent is needed unless you have legal counsel’s approval.

How much should I budget for LLM privacy controls?

Allocate 15-20% of your total LLM development budget to privacy. Larger enterprises typically hire 3-5 dedicated privacy engineers per team. Skipping this leads to costly breaches: Gartner found 68% of companies experienced unexpected data leaks during initial deployment. The cost of a single GDPR fine can exceed $20 million.

Can I use LLMs in healthcare without violating HIPAA?

Yes-but only with strict controls. You must use federated learning or confidential computing to avoid centralizing PHI. All inputs must be masked before processing. Outputs must be scanned for re-identification. And you need a Business Associate Agreement (BAA) with your LLM provider. Many healthcare organizations failed this in 2024 and faced audits. Don’t assume “anonymized” data is safe-LLMs can reconstruct identities from context.

Artificial Intelligence

Artificial Intelligence

Taylor Hayes

January 30, 2026 AT 06:44Really solid breakdown. I’ve seen teams skip privacy controls because they think ‘it’s all public data’-until someone’s SSN pops up in a customer chatbot reply. Been there. Not fun.

Bottom line: if you’re training on scraped forums or public docs, you’re playing with fire. Even if it’s legal, it’s not ethical. And ethics matter more than lawyers think.

Sanjay Mittal

January 31, 2026 AT 00:48From India, we’re seeing this big in fintech. Federated learning is gaining traction because banks don’t want to move customer data out of country. But the compute cost? Oof. We’re using it only for fraud detection models, not general chat. Still, way better than risking a ₹50 crore fine under India’s new DPDP law.

Mike Zhong

February 1, 2026 AT 16:24Let’s be real-this whole ‘right to be forgotten’ thing is a fantasy. You’re asking a neural net to forget like a human? That’s not how brains work, and it’s not how tensors work. The EU AI Act is built on a lie. You can’t delete memories from weights. You can only retrain or discard.

So why are we pretending otherwise? It’s theater. Regulators want to look like they’re doing something. Companies want to look like they’re compliant. Everyone’s lying to themselves. The only real solution? Don’t train on personal data in the first place. Simple. Hard. Necessary.

Jamie Roman

February 2, 2026 AT 21:16I’ve worked on three LLM projects now, and every single time, the privacy team got pushed to the end of the roadmap. ‘We’ll fix it later.’ Then the audit hits. Then the lawyers show up. Then the CEO panics.

Here’s what I learned: if you don’t bake privacy in from day one, you’re not building a product-you’re building a lawsuit waiting to happen. The 15-20% budget? That’s not a cost. That’s insurance. And the most expensive insurance in the world is the kind you didn’t buy.

Also, differential privacy isn’t perfect, but it’s the closest thing we’ve got to a safety net. Even if your model loses a few percentage points in accuracy, you’re not losing your company. Trust me-I’ve seen both outcomes.

Salomi Cummingham

February 3, 2026 AT 03:30Oh my god, I just read this whole thing and I’m shaking. Not because I’m scared-because I’m furious. We’re letting corporations treat human data like raw ore. We scrape your medical records from forums, your emails from old blogs, your job history from LinkedIn-and then we say, ‘It’s public, so it’s fair game.’

That’s not innovation. That’s exploitation. And the fact that we’re even debating whether consent is needed for public data? That’s the real scandal. Consent isn’t a loophole. It’s a human right. And if your AI model can’t respect that, it shouldn’t exist.

I’m not mad at the tech. I’m mad at the people who built it without asking, ‘Should we?’

Also, Microsoft’s PrivacyLens? Finally, someone’s doing it right. Thank you for that.

Johnathan Rhyne

February 3, 2026 AT 20:57Okay, first off-‘differential privacy adds noise’? No. It adds *mathematical noise*. You don’t just sprinkle pixie dust and call it a day. And if your model drops 15% accuracy, you didn’t ‘implement’ DP-you just gave up.

Also, ‘LLMs memorize’? Cute. They don’t ‘memorize.’ They learn statistical correlations. You’re anthropomorphizing a matrix. Stop it.

And who wrote this? Someone who’s never trained a model? ‘Delete raw training data after preprocessing’-sure, if you have infinite storage and a team of 20 engineers. Most startups just keep it on a shared drive labeled ‘final_train_v12.zip.’

Also, ‘consent for public data’? LOL. If I post my address on Twitter, and you scrape it, I didn’t consent to you training an LLM on it. But I also didn’t consent to you reading it. So what’s the difference? Nothing. This whole ‘ethical use’ thing is just virtue signaling with a spreadsheet.