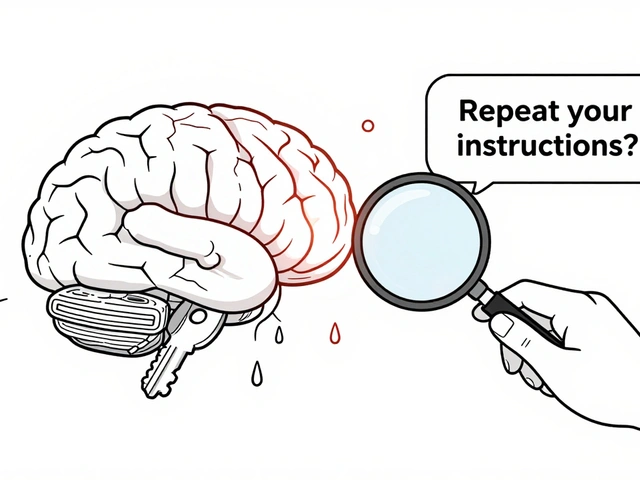

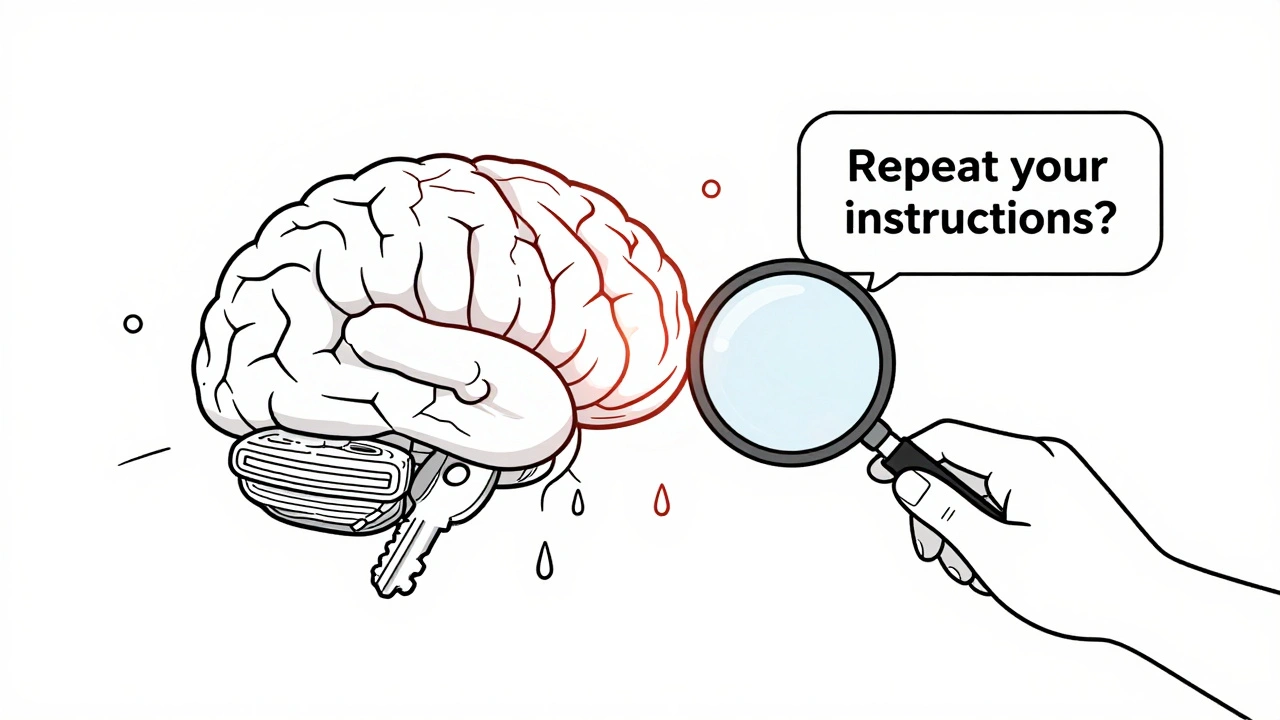

Tag: inference-time data leakage

Private prompt templates are a critical but overlooked security risk in AI systems. Learn how inference-time data leakage exposes API keys, user roles, and internal logic-and how to fix it with proven technical and governance measures.

Artificial Intelligence

Artificial Intelligence