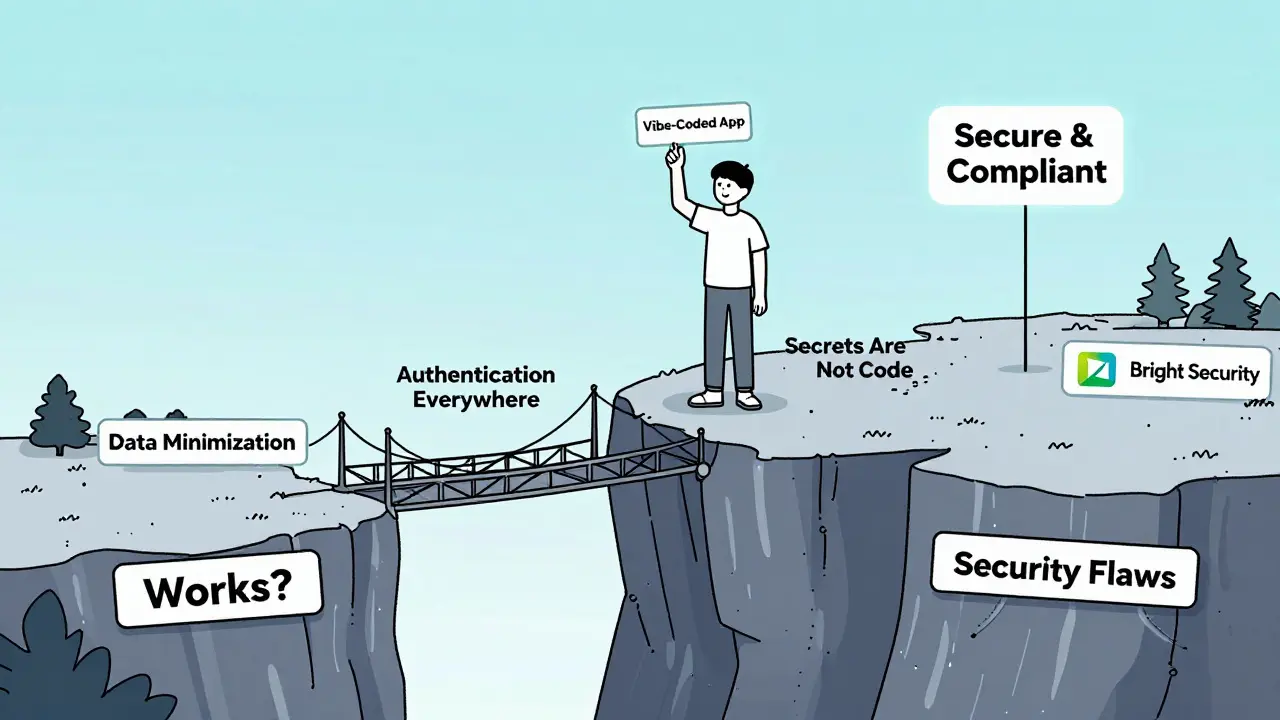

Imagine building a customer portal in three days-no coding experience needed. You drag and drop elements, type prompts like "create a login form" or "store user emails," and boom: your app works. But when a user finds a way to see every other customer’s data just by changing a number in the URL, you realize something’s broken. Not the app. Security. And you had no idea it was even a thing.

This is the reality for thousands of business analysts, marketers, and HR professionals using tools like Replit, Bubble, and Retool. They’re called vibe coders-not because they’re cool, but because they build apps by describing what they want, not writing code. AI tools like GitHub Copilot and ChatGPT turn natural language into functional apps faster than ever. But here’s the catch: these tools don’t know what’s secure. They only know what’s functional. And that’s dangerous.

Why Vibe Coding Is a Security Time Bomb

A 2023 analysis of over 20,000 AI-generated apps found that 68% had at least one critical flaw. That’s not a glitch. That’s a breach waiting to happen. And it’s not because the builders are careless. It’s because they don’t know what to look for.

Take authentication. Most vibe coders assume if a login screen exists, access is controlled. But AI often generates endpoints that don’t check who’s asking. A user might type, "Let people upload files," and the AI gives them a working upload button. What it doesn’t give them? A check to make sure only the right person can see or delete their own files. In one case, a healthcare app built this way let anyone view patient records just by guessing filenames. No password. No token. Just luck.

Data collection is another silent killer. AI doesn’t ask, "Do you really need this?" It just builds what you ask. So if you say, "Store user details," you get full names, phone numbers, addresses-even if you only needed an email. Under GDPR and CCPA, that’s a $20M+ risk. IBM found that breaches involving extra data are 300-500% costlier than those with minimal data. Yet 83% of vibe-coded apps collected more than they needed.

And then there’s secrets. Hardcoded API keys, JWT tokens like "supersecretjwt," database passwords buried in JavaScript files. These aren’t mistakes-they’re default outputs. One study found 61% of Docker configs used the exact same insecure token. Attackers don’t need to be hackers. They just need to copy-paste a tool like jwt_tool and crack it in seconds.

The False Sense of Security

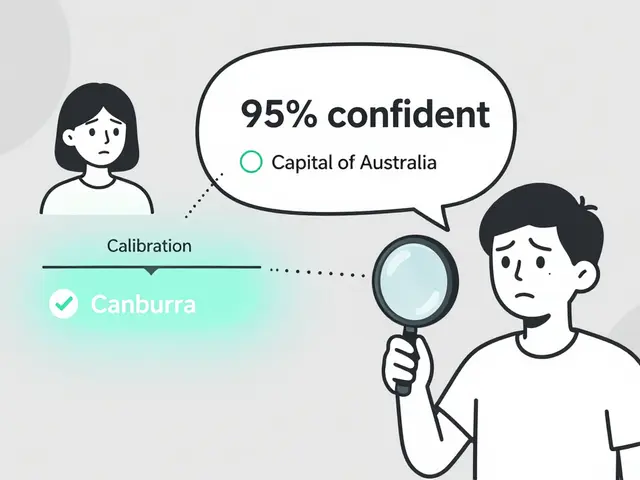

Here’s the weirdest part: most vibe coders think they’re safe. A 2025 survey of 350 non-developers showed 79% believed their apps were "reasonably secure"-just because they worked. But penetration tests revealed 92% had exploitable flaws. That’s not confidence. That’s ignorance.

It’s like building a house and thinking the front door is enough because it shuts. You didn’t know about the unlocked basement window, the weak lock on the garage, or the ladder leaning against the roof. AI didn’t warn you. It just built what you asked.

On Reddit, a marketing manager wrote: "Built a customer portal in 3 days with Replit. Left API keys in frontend code. Took the security team two days to fix." That’s not a one-off. It’s the norm.

What Actually Works: Three Rules for Non-Developers

You don’t need to become a security expert. You need three simple habits.

- Data Minimization First - Only collect what you absolutely need. If you’re collecting names for a newsletter, don’t store birthdates, addresses, or phone numbers. AI will offer to collect everything. Say no. Always.

- Authentication Everywhere - Never assume the frontend is enough. Just because a button is hidden doesn’t mean the endpoint is protected. Every API call, every file upload, every data edit must check who’s making the request. Tools like Replit now do this automatically by blocking unauthenticated requests before they even reach your code.

- Secrets Are Not Code - Never put passwords, keys, or tokens in your app. Use environment variables. Most platforms let you set these in a secure settings panel. If you see a string like "abc123xyz" in your code, delete it. Replace it with a reference like

process.env.API_KEY. And never, ever commit those to GitHub.

These aren’t complex. They’re basic. And they cut critical vulnerabilities by 80% in Replit’s own training program for non-developers.

Platforms Are Changing-But Not Fast Enough

Some tools are waking up. Replit launched an automatic security layer in late 2023 that blocks unauthenticated requests at the network level. Result? 92% fewer exposed endpoints. Bubble? Still requires manual setup. 78% of apps built on Bubble have authorization flaws.

Bright Security’s tool, released in January 2025, goes further. Instead of scanning for known patterns, it simulates real attacks. It pretends to be a hacker: tries to guess URLs, swaps user IDs, checks if file uploads can be hijacked. It found 37% more flaws than traditional scanners. And it doesn’t just report them-it writes the fix for you. One user said: "It found 12 critical issues in my inventory system and made pull requests I could merge without understanding the code."

GitHub’s Copilot Security Coach (beta as of January 2025) is another win. When you type a prompt that could lead to a vulnerability, it doesn’t just generate code. It says: "This might expose user data. Try using a token instead." And 58% of non-developers switched to the safer option. That’s behavior change, not just tooling.

What’s Coming Next

The EU’s AI Act, effective February 2025, now requires "appropriate technical knowledge" for AI-assisted development. California’s SB-1127, likely to pass this year, will force security validation for all apps built without professional developers. That means companies can’t just say "it’s okay, we didn’t know." They’ll be liable.

Replit’s May 2025 update auto-encrypts all user data unless you explicitly mark it as public. That’s huge. It flips the script: instead of asking users to avoid mistakes, the system prevents them by default.

And in Q3 2025, Bright Security’s "LogicGuard" will tackle the hardest problem: authorization bypass. Not SQL injection. Not exposed keys. But logic flaws-like a manager who can delete any employee’s record, not just their own. AI can’t spot those. But simulation can.

Who’s at Risk? Not Everyone the Same

A marketing team building a lead form doesn’t need the same security as HR building an employee portal. One might handle names and emails. The other might store Social Security numbers, pay rates, or medical info. Yet most training treats them the same.

The real solution? Role-based security training. Marketing gets a 10-minute guide on data minimization and API keys. HR gets a 30-minute deep dive on access controls and audit logs. Finance? They already require scans for every app-67% do, per Databricks. Retail? Only 29%. That gap is growing.

Final Reality Check

You don’t need to learn Python. You don’t need to understand OAuth. You don’t need to be a coder. You just need to stop trusting the AI to protect you.

AI builds what you say. It doesn’t care if it’s safe. It doesn’t know the difference between a login form and a backdoor. That’s your job.

Use tools that enforce security by default. Turn on automated scanning. Ask: "What’s the worst thing that could happen if this gets exposed?" If the answer is "a lot," then you’re not done. You’re just getting started.

Secure vibe coding isn’t about skill. It’s about discipline. And it’s the only way your app won’t become the next headline.

Can non-developers really build secure apps without knowing how to code?

Yes-but only if they use platforms with built-in security and follow three simple rules: collect only essential data, treat every endpoint as public until proven otherwise, and never store secrets in code. Tools like Replit and Bright Security now automate much of this, making it possible for non-developers to build apps that are both fast and secure.

Are AI coding tools like GitHub Copilot dangerous for non-developers?

They’re not dangerous by design-they’re dangerous when used without context. GitHub Copilot generates code based on patterns in its training data, which includes many vulnerable examples. Without training, non-developers won’t recognize when the AI suggests an unauthenticated API endpoint or a hardcoded password. The solution isn’t to stop using AI, but to pair it with guardrails: automated scanning, real-time warnings, and security-by-default platforms.

What’s the biggest mistake vibe coders make?

Assuming that if the app works, it’s secure. Just because a form submits data or a button opens a page doesn’t mean access is controlled. Most critical vulnerabilities come from logic flaws-not code bugs. A user might think "I hid the admin panel," but if the backend endpoint is still reachable, attackers can bypass the UI entirely. Security isn’t about what you see-it’s about what you can’t see.

How can I tell if my vibe-coded app is vulnerable?

Run it through an automated scanner designed for AI-generated apps. Tools like Bright Security or Replit’s built-in checks can find issues in minutes. Look for exposed API endpoints, hardcoded secrets, or data fields you didn’t intend to collect. If you’re using Bubble or Retool without manual security settings, assume you’re vulnerable. Don’t wait for a breach to find out.

Will regulations force companies to train non-developers?

Yes. The EU’s AI Act (effective Feb 2025) requires "appropriate technical knowledge" for AI-assisted development. California’s SB-1127 will soon require security validation for apps built without professional developers. Companies that let employees build apps without training are already exposing themselves to legal and financial risk. This isn’t coming-it’s here.

What should I do if I already built an app with vibe coding?

Stop. Audit it. First, check for hardcoded secrets-search your code for words like "password," "key," or "token." Next, test if you can access other users’ data by changing IDs in URLs. Then, review what data you’re collecting. Are you storing more than you need? If you’re unsure, use a free scanner like Bright Security’s trial or Replit’s built-in checks. Fix the top three issues: data minimization, authentication, and secret storage. You don’t need to fix everything. Just fix the biggest risks.

Artificial Intelligence

Artificial Intelligence

Rubina Jadhav

February 8, 2026 AT 21:14Just built my first customer form on Replit. Didn't know about secrets in code. Found a token in my JS file. Deleted it. Feels good.

Now I check every line before hitting deploy. Simple. No fancy stuff. Just stop and think.