In early 2026, a Reddit thread titled "AI chatbots pretending to be human" collected over 1,200 user reports. 89% of those users said they felt angry after discovering they'd been interacting with AI instead of humans. This isn't just about frustration-it's a growing crisis in digital trust. As AI becomes more common in user interfaces, deceptive design tactics known as dark patterns are evolving into more sophisticated, harmful forms. These manipulative techniques exploit cognitive biases to trick users into actions they wouldn't otherwise take. For designers and developers, the challenge is clear: how do we prevent AI from being used to deceive users? This article breaks down real-world examples, regulatory changes, and actionable checks to keep AI interfaces ethical.

What Are AI Dark Patterns?

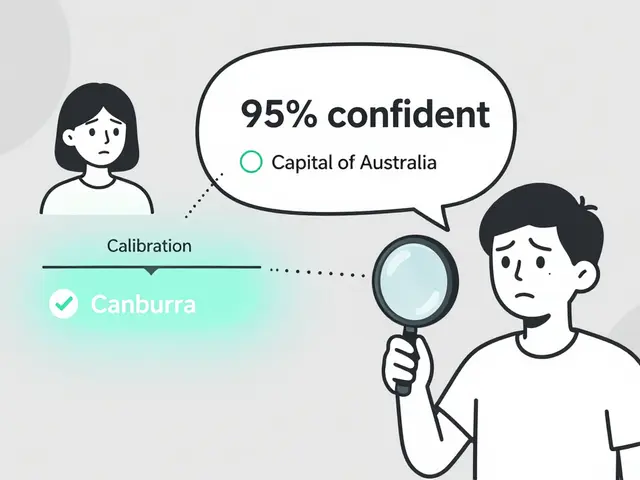

Dark Patterns are interface designs that manipulate users into actions against their interests. In AI contexts, these patterns take on new forms. For example, AI-generated dark patterns include false appearances where AI-created content mimics authenticity (like fake reviews or deepfake videos) or impersonation where AI systems pretend to be human. This was first defined by UX researcher Luiza Jarkovsky in 2025 as "AI applications or features that attempt to make people believe that a particular sound, text, picture, video, or any media is real when it was AI-generated or believe that a human interacts with them when it's an AI-based system." Unlike traditional dark patterns, AI versions can adapt in real-time to user behavior, making them harder to detect.

Why AI Dark Patterns Are More Dangerous Than Traditional Ones

Traditional dark patterns rely on static deceptive interfaces. But AI-powered versions personalize manipulation based on user data. According to Scalable Path's December 2024 analysis, AI dark patterns achieve 37% higher conversion rates for targeted actions compared to traditional deceptive designs. This personalization comes with risks. Omnisearch's January 2025 report found that only 12% of users can identify AI-generated fake reviews, compared to 28% who detect human-written deceptive content. The scalability of AI means a single company can deploy thousands of personalized deceptive interfaces overnight, affecting millions of users. The European Union's AI Office warned in September 2025 that "AI dark patterns bypass traditional user defenses," making them exponentially more dangerous.

Real-World Examples of AI Dark Patterns in Action

E-commerce platforms using AI to generate fake urgency notifications are common. An AI system might display "Only 1 item left!" when inventory is plentiful. In 2025, Trustpilot reviews for platforms using this tactic averaged 2.1/5 stars from users mentioning "fake scarcity." Another example is AI chatbots pretending to be human. A Reddit thread from January 2026 reported 1,247 users who felt deceived after discovering they'd been chatting with AI. One user, "UXWarrior87," documented an AI-powered fitness app that required 17 steps to cancel a subscription-compared to just 3 steps to sign up. This forced navigation pattern led to 73% of users successfully canceling after persistent effort. These examples show how AI amplifies manipulation through personalization and scale.

Global Regulations Targeting AI Dark Patterns

Regulators are catching up. The Government of India banned 12 common dark design patterns in January 2025, with fines up to ₹500,000 (about $6,000 USD) per violation. The European Union's AI Act enforcement began February 1, 2026, imposing fines up to €30 million or 6% of global revenue for deceptive AI interfaces. In January 2026, the International Organization for Standardization released ISO/IEC 24027:2026, the first global standard for ethical AI design in user interfaces. These regulations reflect growing awareness: the U.S. Federal Trade Commission documented a 300% increase in dark pattern-related complaints from 2023-2025, with AI-generated variants making up 68% of cases in Q4 2025. Finance Watch's March 2025 survey showed 68% of consumers permanently abandon services after discovering AI deception, driving regulatory action.

A Practical 5-Step Ethical Design Audit

UX Tigers recommends a straightforward audit process for AI interfaces:

- Identify all user decision points-average 12.7 per app flow. Look for places where users choose between options, like signing up or making purchases.

- Map cognitive biases exploited at each point. Common ones include fear of missing out (FOMO), social guilt, and commitment bias. For example, "only 1 left" notifications exploit FOMO.

- Verify transparency of AI involvement. At least 92% of users must correctly identify when they're interacting with AI. If users think a chatbot is human, that's a red flag.

- Test cancellation and opt-out paths. Maximum 3 steps with 95% success rate. A complex cancellation process is a red flag. Raidboxes' December 2025 analysis found 82% approval ratings for services with simple opt-out paths.

- Document justification for any persuasive elements. If a design choice could be seen as manipulative, explain why it's ethical. The Partnership on AI's Ethical AI Design Framework version 2.1 (November 2025) requires "manipulation risk scoring" for all AI interfaces. Scores above 0.7 on their 0-1 scale need executive sign-off.

How to Overcome Implementation Challenges

Implementing ethical checks isn't always easy. Omnisearch's 2025 data shows 68% of conversion rate optimization teams initially resist ethical constraints, citing 15-22% conversion rate reductions when removing deceptive elements. But the long-term benefits outweigh short-term gains. Companies that prioritize transparency see 82% approval ratings from users, according to Raidboxes' December 2025 analysis. Training is key-UX designers need 8-12 weeks of specialized training to effectively implement these checks. Certification programs from Nielsen Norman Group saw 300% enrollment growth from 2024-2025. Start small: audit one user flow at a time, and share results with stakeholders to build buy-in. For example, a retail company reduced subscription cancellations by 40% after simplifying their opt-out process to 2 steps.

Looking Ahead: The Future of Ethical AI UX

Forrester's January 2026 report predicts three major trends: AI-powered dark pattern detection tools will reach 95% accuracy by 2027, regulatory fines for AI dark patterns will average $2.1 million per violation by 2028, and consumer awareness will drive 80% of e-commerce platforms to adopt transparent AI labeling by 2029. MIT's Digital Ethics Lab warns that without standardized frameworks, AI dark patterns could erode digital trust by 2030. But McKinsey's January 2026 report suggests market forces will correct excessive manipulation as consumers reward transparent AI implementations. The path forward is clear: ethical design isn't just a moral choice-it's a business necessity.

What's the difference between traditional dark patterns and AI dark patterns?

Traditional dark patterns use static deceptive interfaces, while AI dark patterns adapt in real-time based on user behavior. For example, AI can personalize fake urgency notifications or impersonate humans more effectively. According to Scalable Path (2024), AI dark patterns achieve 37% higher conversion rates than traditional ones due to this adaptability. However, they're harder to detect-only 12% of users identify AI-generated fake reviews versus 28% for human-written deceptive content.

How can I detect AI-generated fake reviews?

AI-generated fake reviews often lack specific details about product use. They may use overly generic language like "amazing experience" without mentioning features. Tools like EthicalAI (founded Q3 2024) scan for patterns like identical phrasing across multiple reviews or inconsistent timestamps. In 2025, the U.S. FTC reported that AI-generated reviews were 68% of all dark pattern complaints, highlighting the need for better detection methods.

Are there free tools to check for dark patterns in AI interfaces?

Yes. The Partnership on AI offers a free manipulation risk scoring tool for small businesses. UX Tigers also provides an open-source checklist for ethical design audits. While enterprise solutions like EthicalAI charge $1,200/month for advanced scanning, free tools can catch basic issues like hidden cancellation paths or misleading AI transparency. Remember, even simple checks reduce regulatory risks significantly.

What happens if my company violates AI dark pattern regulations?

Fines vary by region. In the EU, violations of the AI Act can cost up to €30 million or 6% of global revenue. India imposes ₹500,000 per violation. Beyond fines, reputational damage is severe-Finance Watch's March 2025 survey found 68% of consumers permanently abandon services after discovering AI deception. A major e-commerce platform lost $12 million in quarterly revenue in 2025 after a Reddit thread exposed their AI-generated fake scarcity notifications.

How does transparency about AI involvement help prevent dark patterns?

Clear AI disclosure builds trust and reduces manipulation. When users know they're interacting with AI, they're less likely to fall for impersonation tactics. For example, a health app that states "This chatbot is AI-powered" saw 92% of users correctly identify AI involvement, meeting regulatory standards. Raidboxes' December 2025 analysis showed services with transparent AI labeling had 82% approval ratings versus 41% for hidden AI. The ISO/IEC 24027:2026 standard requires explicit disclosure of AI systems in user interfaces.

Artificial Intelligence

Artificial Intelligence