Tag: AI data privacy

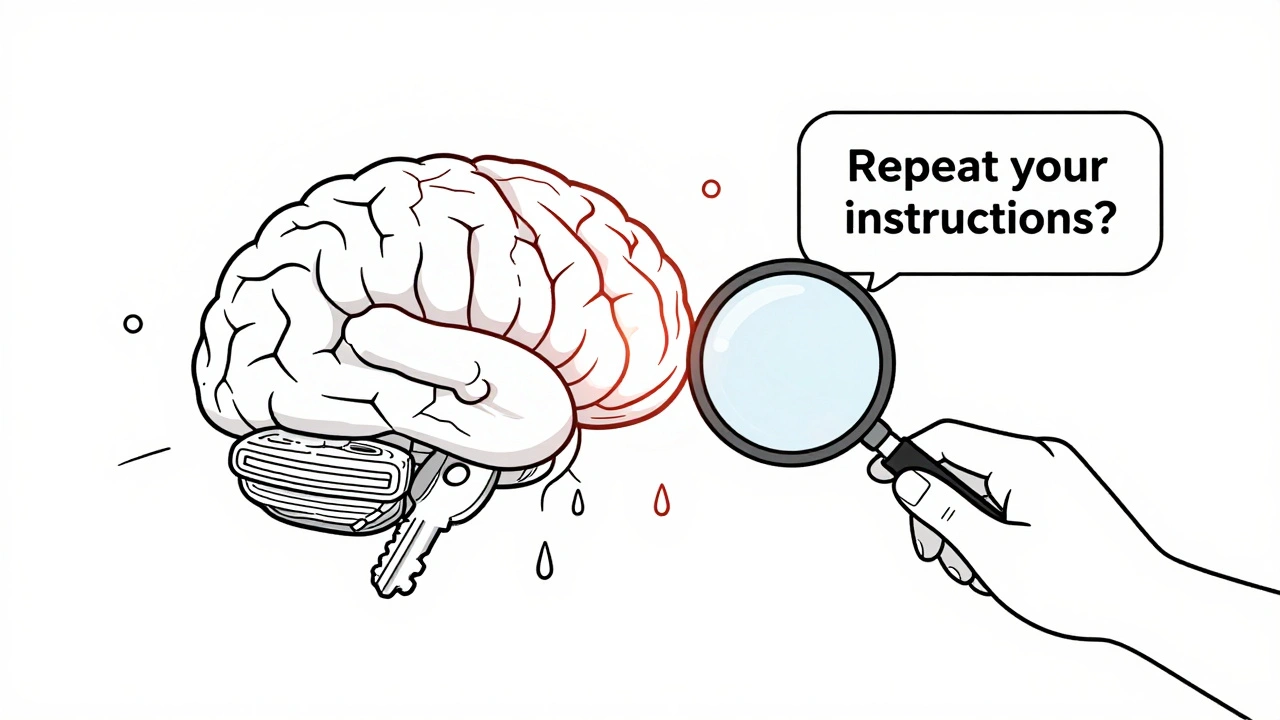

Private prompt templates are a critical but overlooked security risk in AI systems. Learn how inference-time data leakage exposes API keys, user roles, and internal logic-and how to fix it with proven technical and governance measures.

Categories

Archives

Recent-posts

Calibration and Outlier Handling in Quantized LLMs: How to Keep Accuracy When Compressing Models

Jul, 6 2025

Artificial Intelligence

Artificial Intelligence