Tag: LLM budgeting

Learn how to accurately allocate LLM costs across teams using dynamic chargeback models that track token usage, RAG queries, and AI agent loops. Stop guessing. Start optimizing.

Categories

Archives

Recent-posts

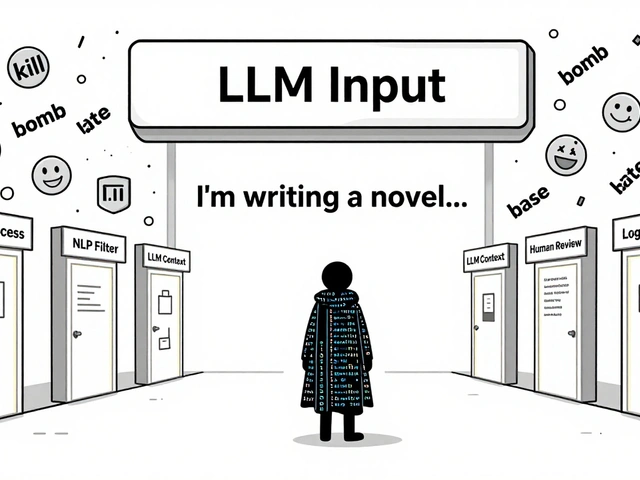

Content Moderation Pipelines for User-Generated Inputs to LLMs: How to Prevent Harmful Content in Real Time

Aug, 2 2025

Artificial Intelligence

Artificial Intelligence