Tag: LLM generalization

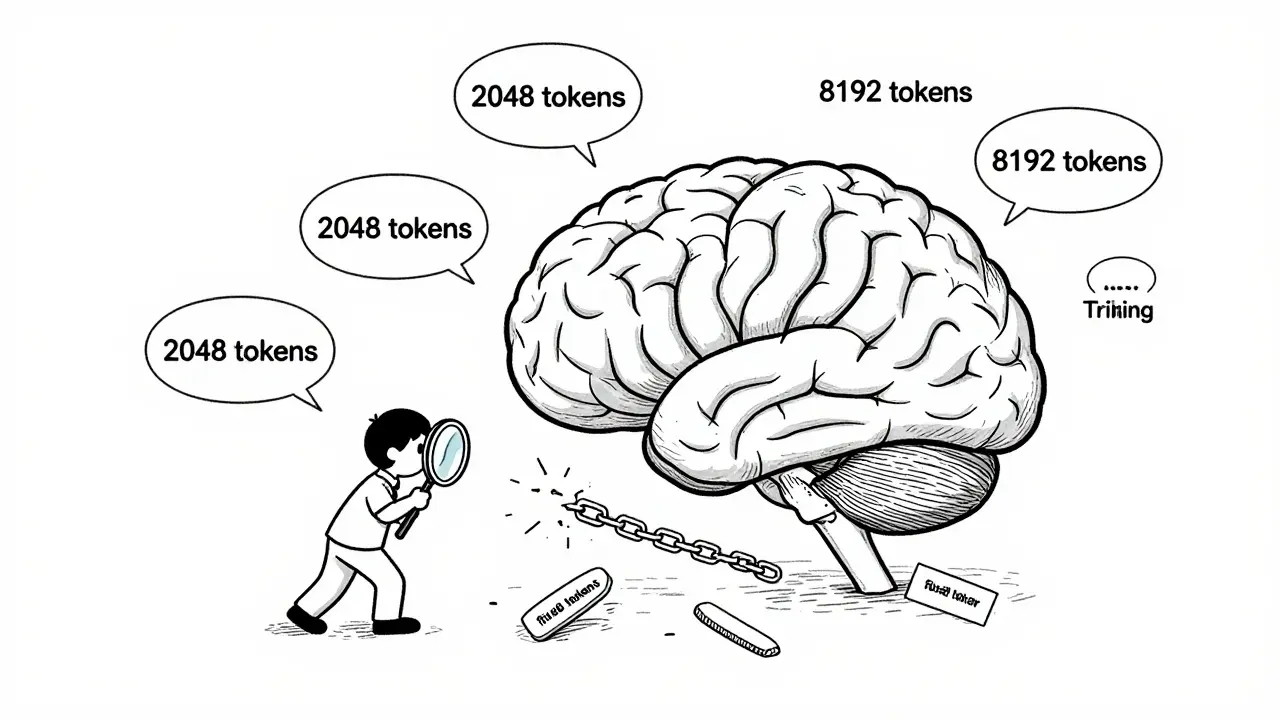

Training duration and token counts alone don't determine LLM generalization. How sequence lengths are structured during training matters more-variable-length curricula outperform fixed-length approaches, reduce costs, and unlock true reasoning ability.

Artificial Intelligence

Artificial Intelligence