Tag: RAG chunking

Chunking strategies determine how well RAG systems retrieve information from documents. Page-level chunking with 15% overlap delivers the best balance of accuracy and speed for most use cases, but hybrid and adaptive methods are rising fast.

Categories

Archives

Recent-posts

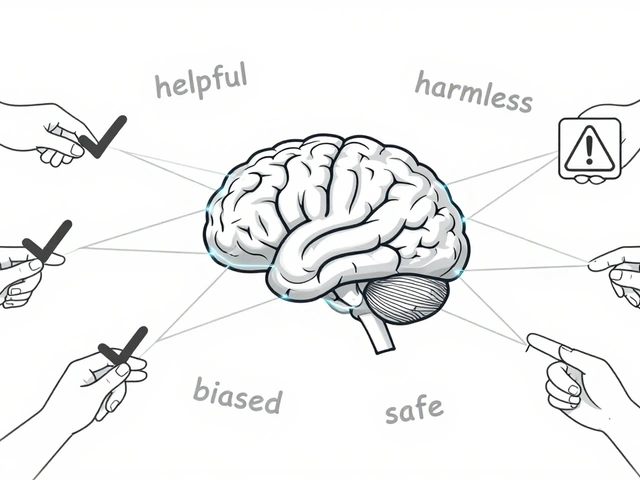

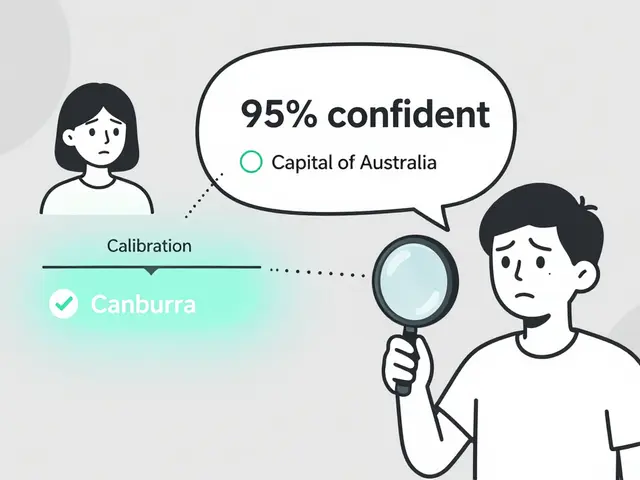

Token Probability Calibration in Large Language Models: How to Fix Overconfidence in AI Responses

Jan, 16 2026

Artificial Intelligence

Artificial Intelligence