Tag: AI uncertainty

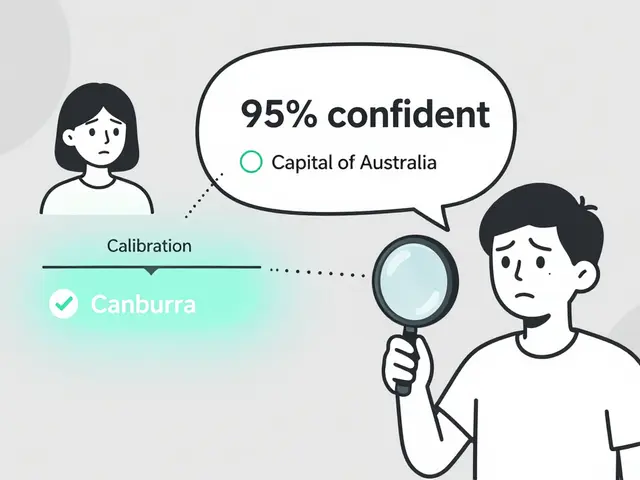

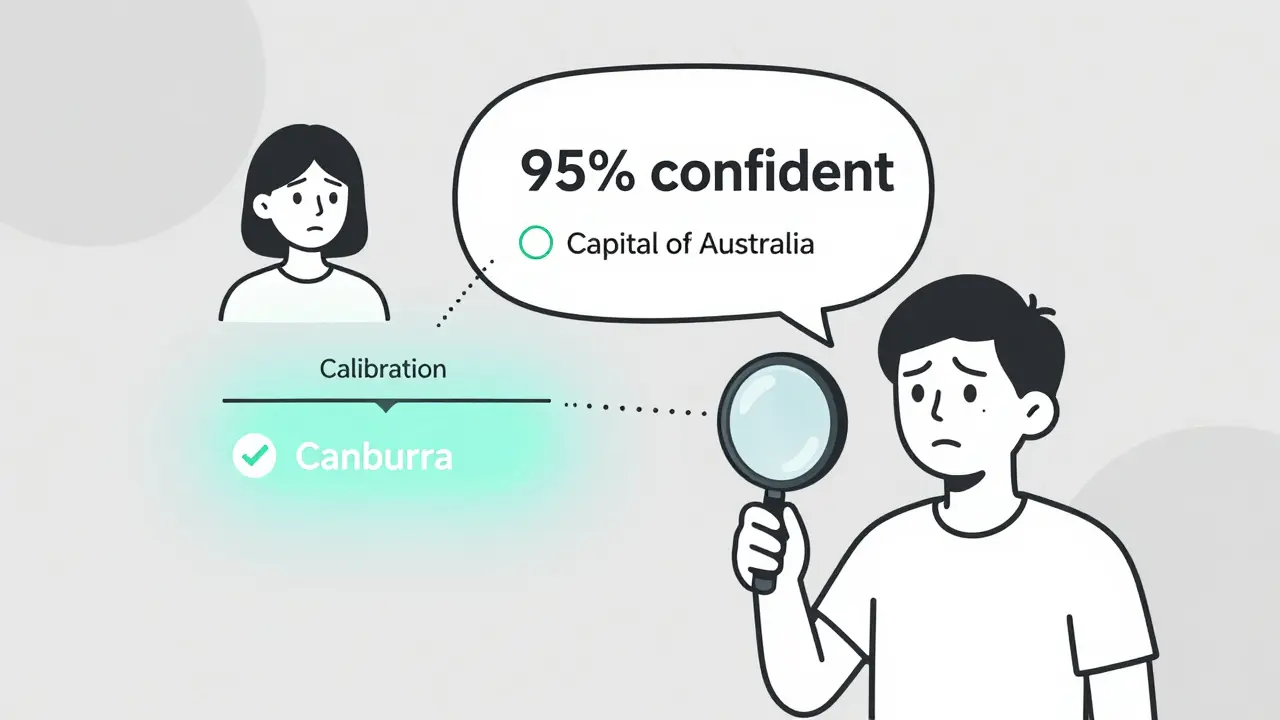

Most LLMs are overconfident in their answers. Token probability calibration fixes this by aligning confidence scores with real accuracy. Learn how it works, which models are best, and how to apply it.

Categories

Archives

Recent-posts

Token Probability Calibration in Large Language Models: How to Fix Overconfidence in AI Responses

Jan, 16 2026

Artificial Intelligence

Artificial Intelligence