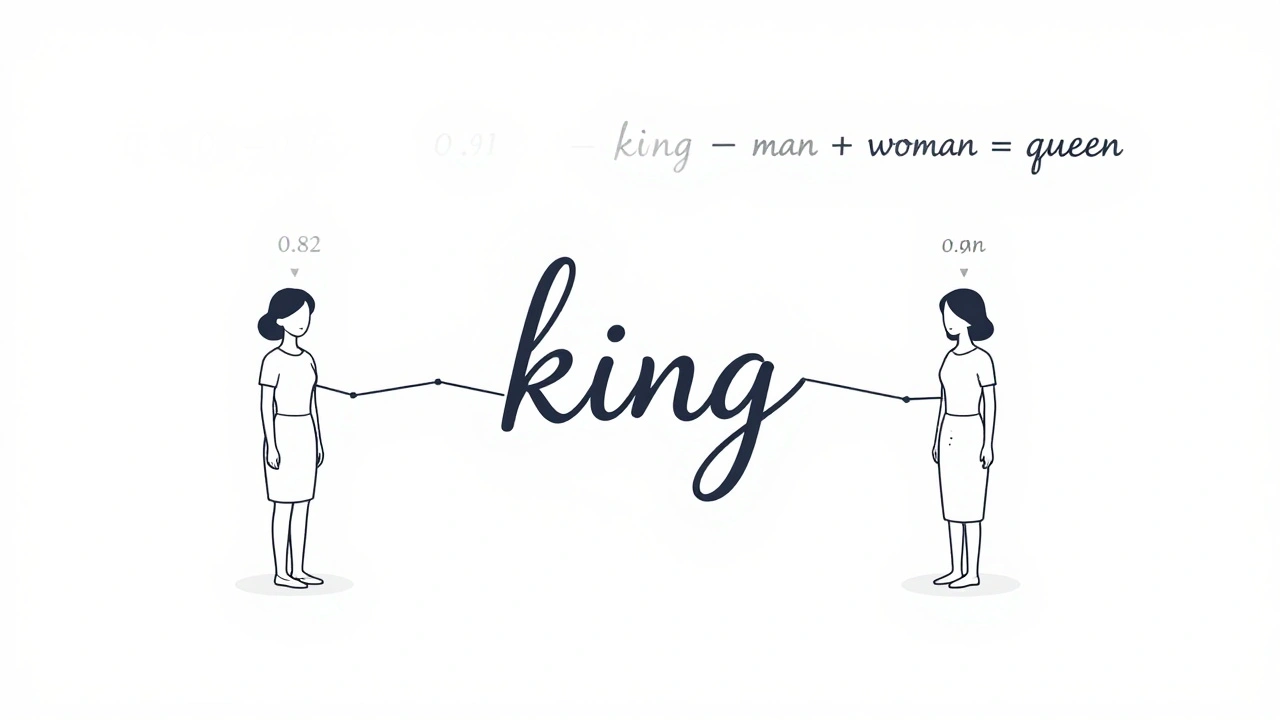

Tag: attention mechanism

Learn how embeddings, attention, and feedforward networks form the core of modern large language models like GPT and Llama. No jargon, just clear explanations of how AI understands and generates human language.

Categories

Archives

Recent-posts

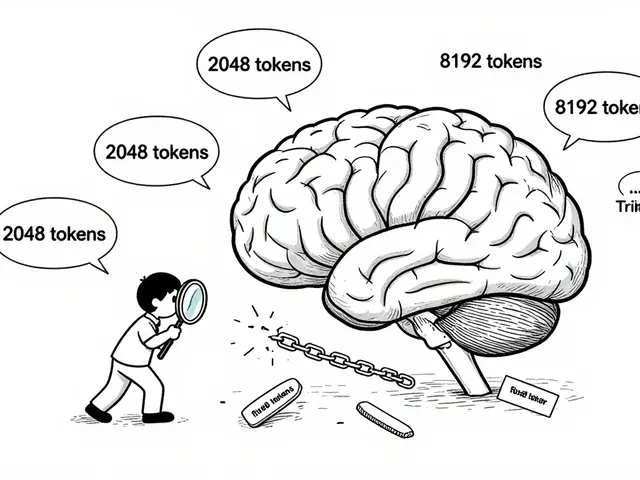

Calibration and Outlier Handling in Quantized LLMs: How to Keep Accuracy When Compressing Models

Jul, 6 2025

Artificial Intelligence

Artificial Intelligence