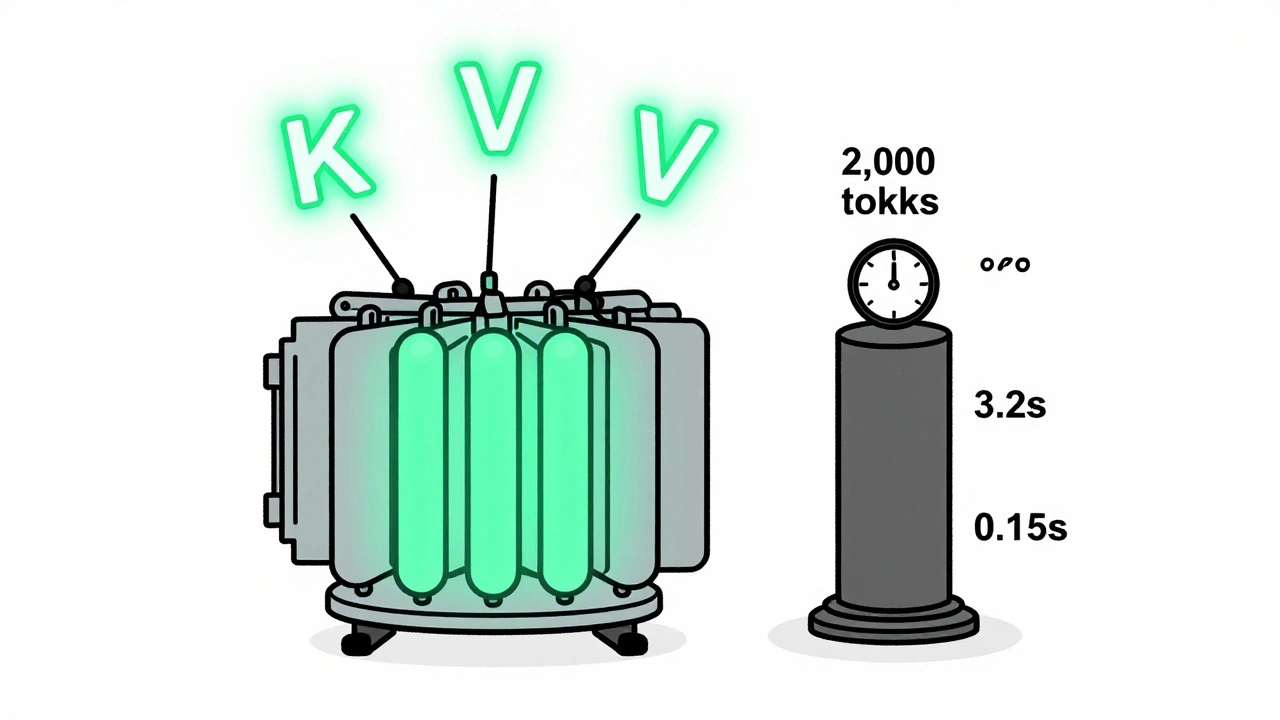

Tag: continuous batching

KV caching and continuous batching are essential for fast, affordable LLM serving. Learn how they reduce memory use, boost throughput, and enable real-world deployment on consumer hardware.

Categories

Archives

Recent-posts

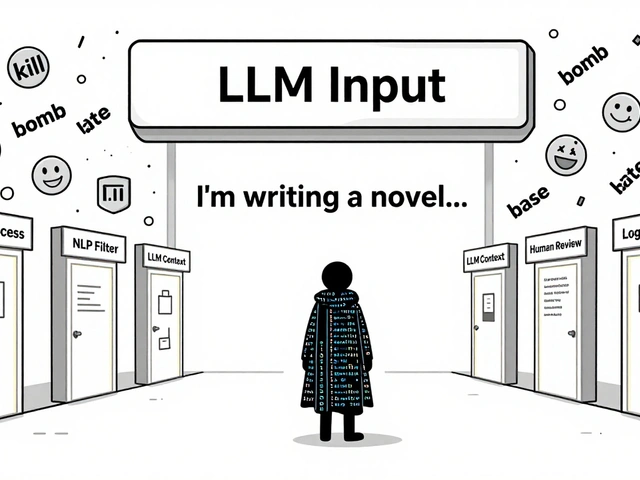

Content Moderation Pipelines for User-Generated Inputs to LLMs: How to Prevent Harmful Content in Real Time

Aug, 2 2025

Artificial Intelligence

Artificial Intelligence