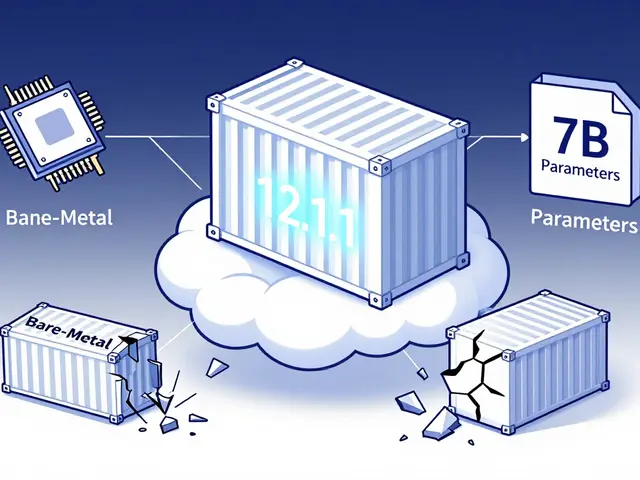

Tag: CPU offloading

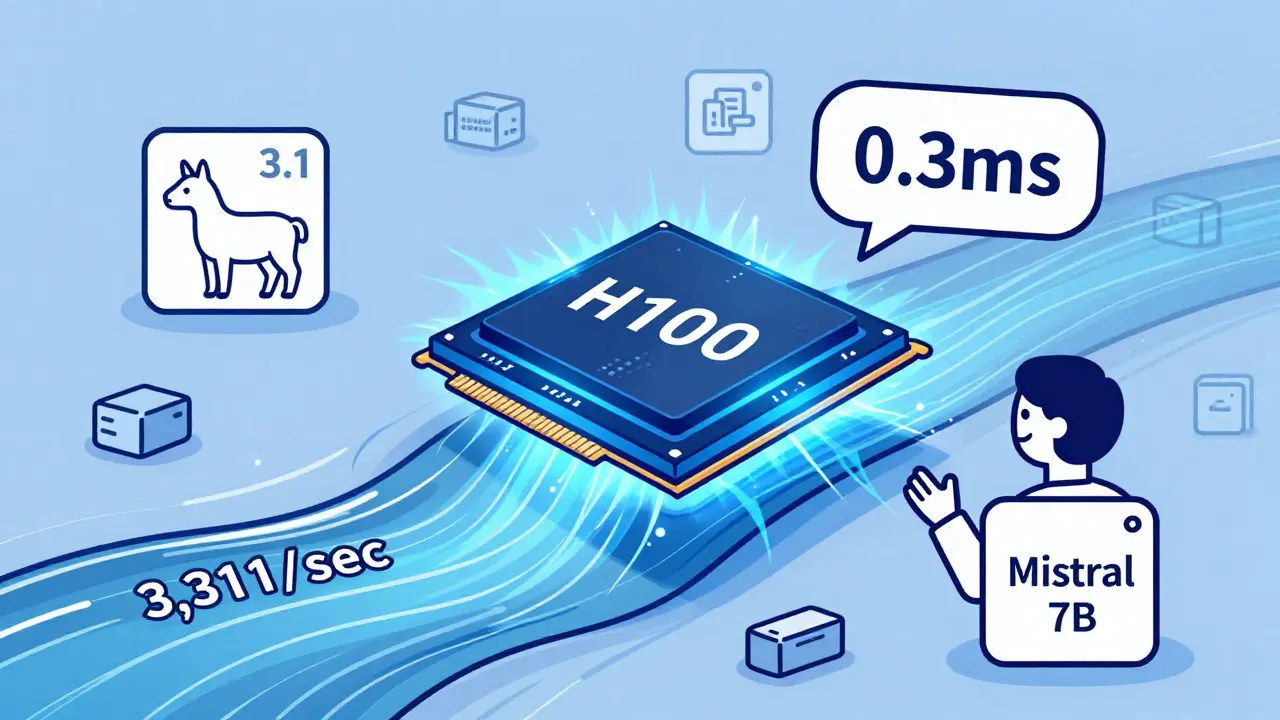

Learn how to choose between NVIDIA A100, H100, and CPU offloading for LLM inference in 2025. See real performance numbers, cost trade-offs, and which option actually works for production.

Categories

Archives

Recent-posts

Key Components of Large Language Models: Embeddings, Attention, and Feedforward Networks Explained

Sep, 1 2025

Artificial Intelligence

Artificial Intelligence