Tag: CUDA for LLMs

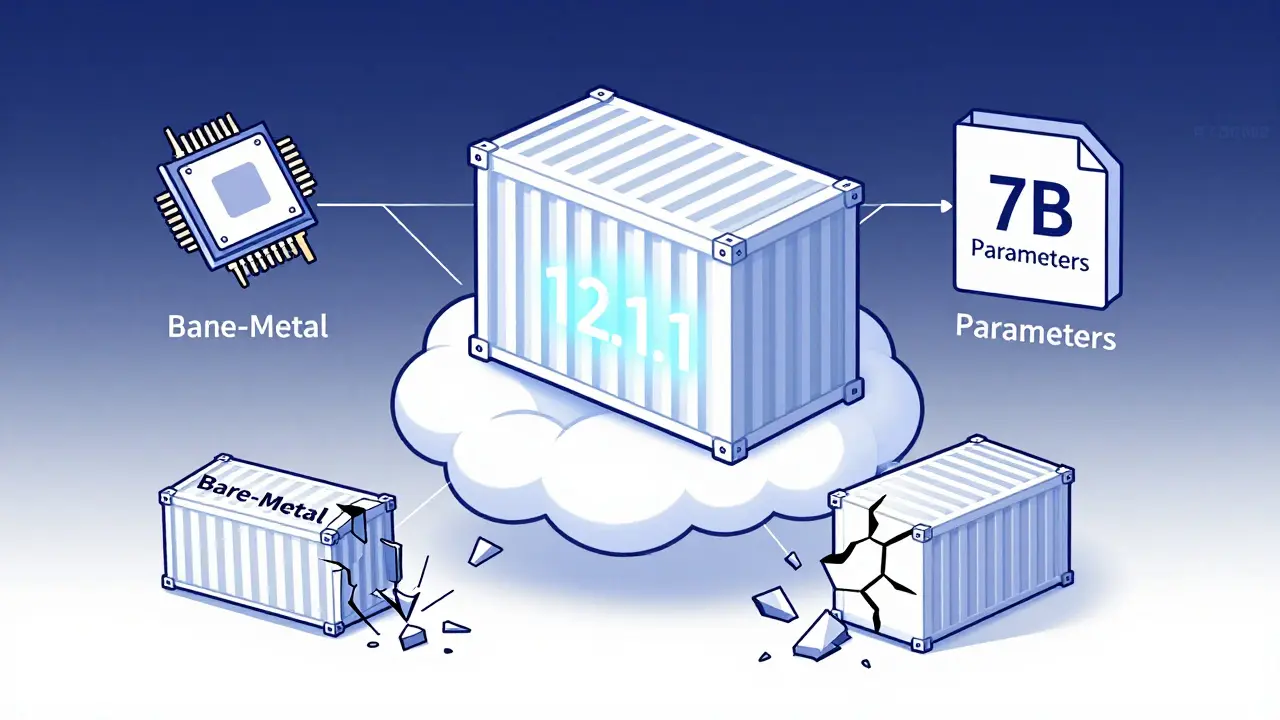

Containerizing large language models requires precise CUDA version matching, optimized Docker images, and secure model formats like .safetensors. Learn how to reduce startup time, shrink image size, and avoid the most common deployment failures.

Artificial Intelligence

Artificial Intelligence