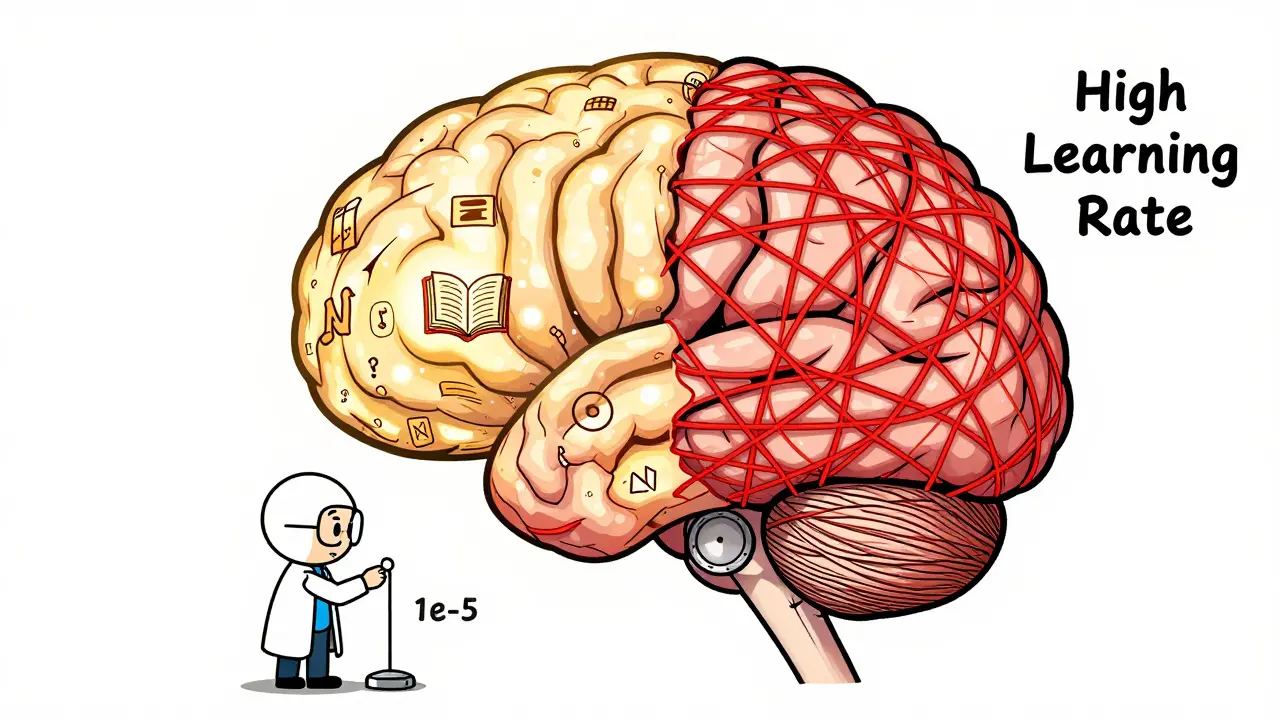

Tag: fine-tuning LLMs

Learn how to fine-tune large language models without losing their original knowledge. Discover the best hyperparameters, methods like LoRA and FAPM, and real-world trade-offs that keep models accurate and reliable.

Categories

Archives

Recent-posts

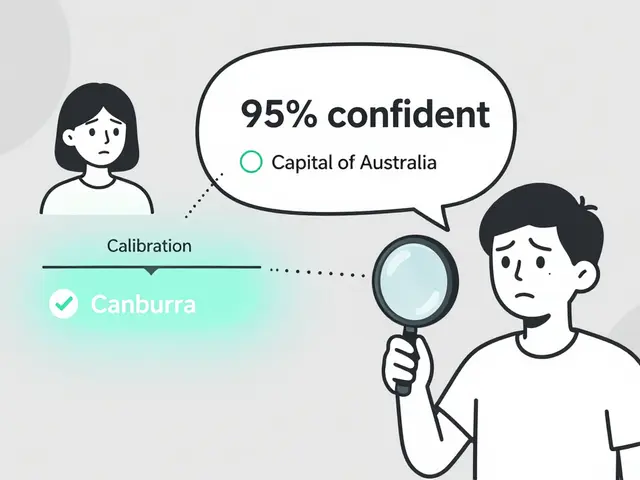

Token Probability Calibration in Large Language Models: How to Fix Overconfidence in AI Responses

Jan, 16 2026

Artificial Intelligence

Artificial Intelligence