Tag: inference optimization

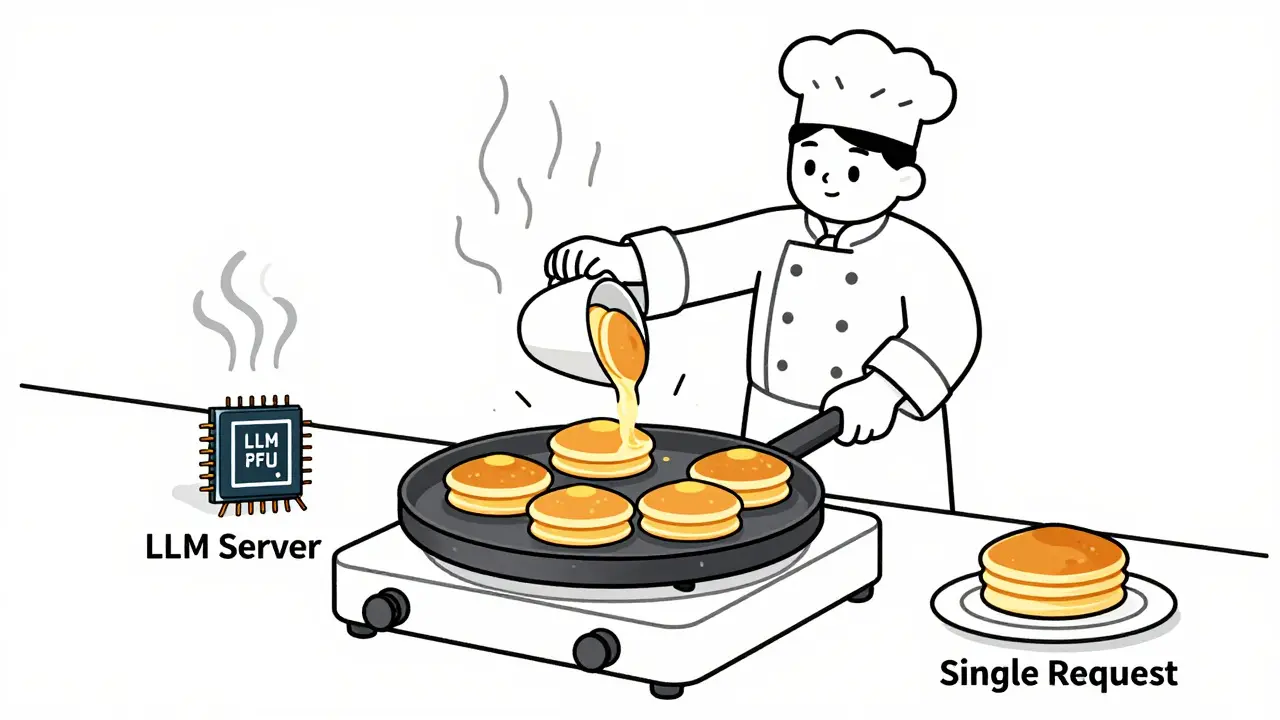

Learn how to choose optimal batch sizes for LLM serving to cut cost per token by up to 87%. Discover real-world results, batching types, hardware trade-offs, and proven techniques to reduce AI infrastructure costs.

Categories

Archives

Recent-posts

Marketing Content at Scale with Generative AI: Product Descriptions, Emails, and Social Posts

Jun, 29 2025

Artificial Intelligence

Artificial Intelligence