Tag: LLM chunking

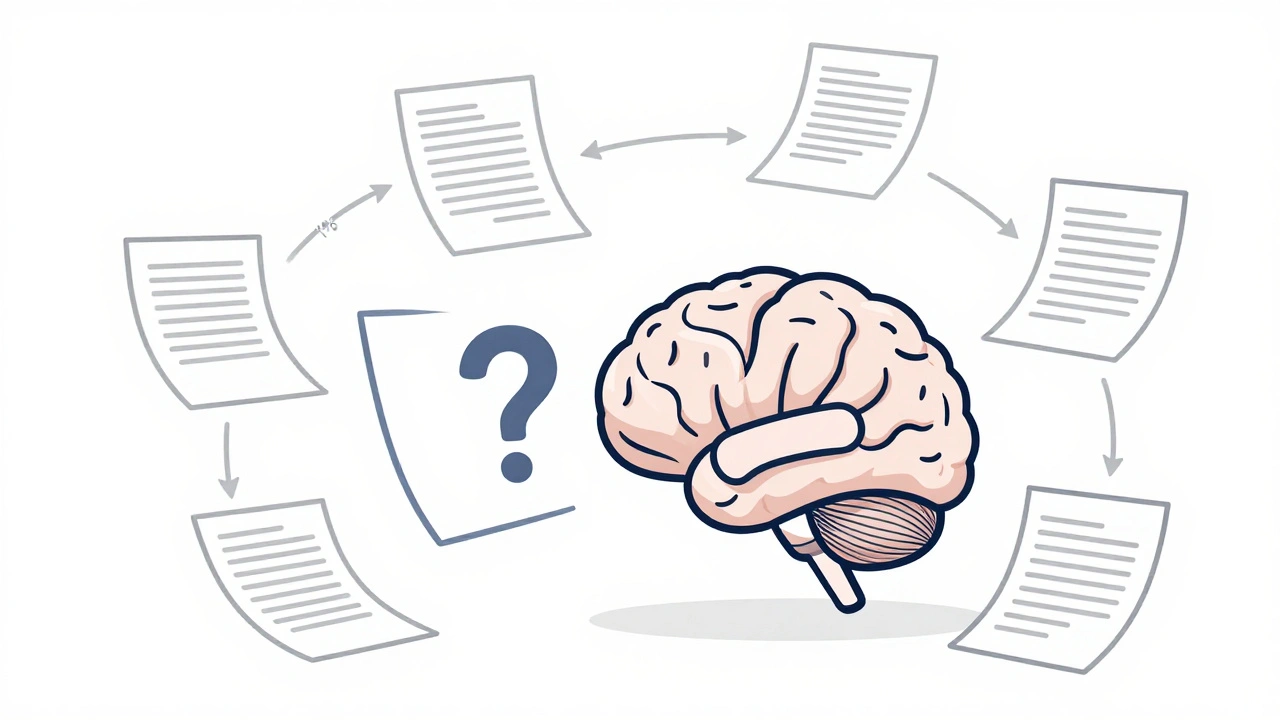

Chunking strategies determine how well RAG systems retrieve information from documents. Page-level chunking with 15% overlap delivers the best balance of accuracy and speed for most use cases, but hybrid and adaptive methods are rising fast.

Categories

Archives

Recent-posts

Key Components of Large Language Models: Embeddings, Attention, and Feedforward Networks Explained

Sep, 1 2025

Marketing Content at Scale with Generative AI: Product Descriptions, Emails, and Social Posts

Jun, 29 2025

Artificial Intelligence

Artificial Intelligence