Tag: LLM compression

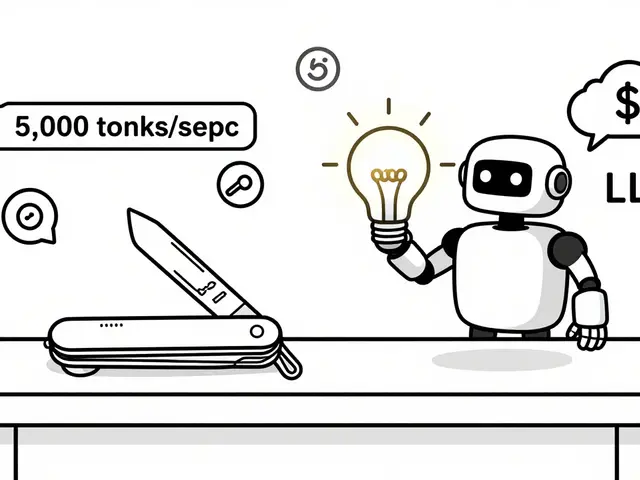

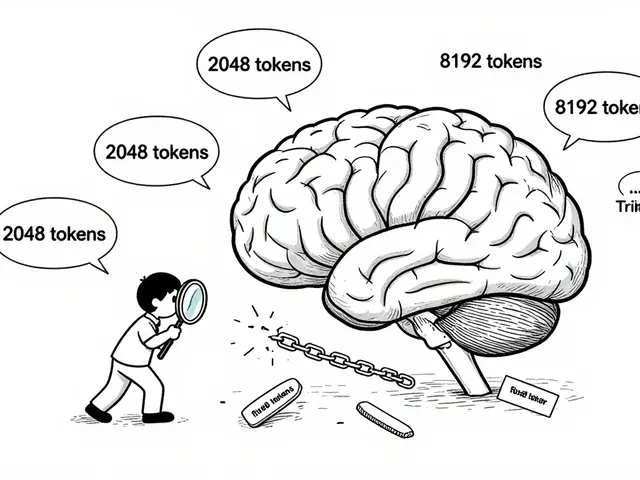

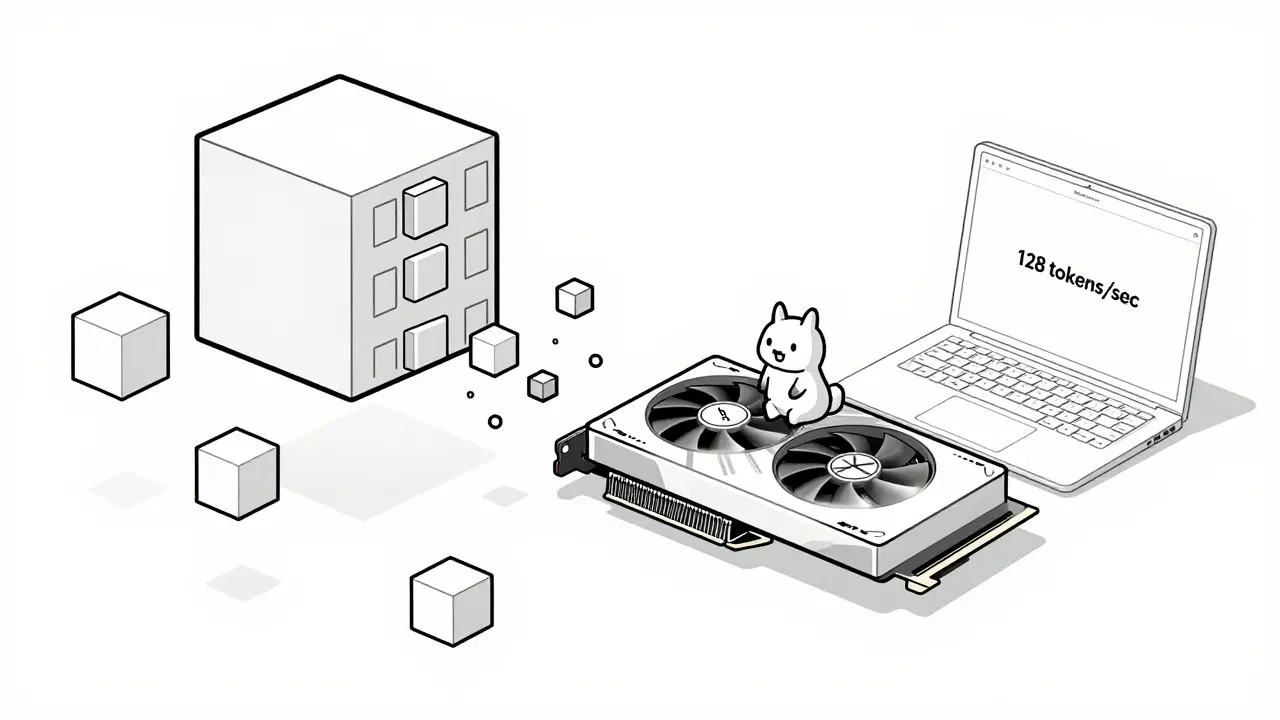

Learn how hardware-friendly LLM compression lets you run powerful AI models on consumer GPUs and CPUs. Discover quantization, sparsity, and real-world performance gains without needing a data center.

Categories

Archives

Recent-posts

How Generative AI Is Transforming Prior Authorization Letters and Clinical Summaries in Healthcare Admin

Dec, 15 2025

Artificial Intelligence

Artificial Intelligence