Tag: LLM inference cost

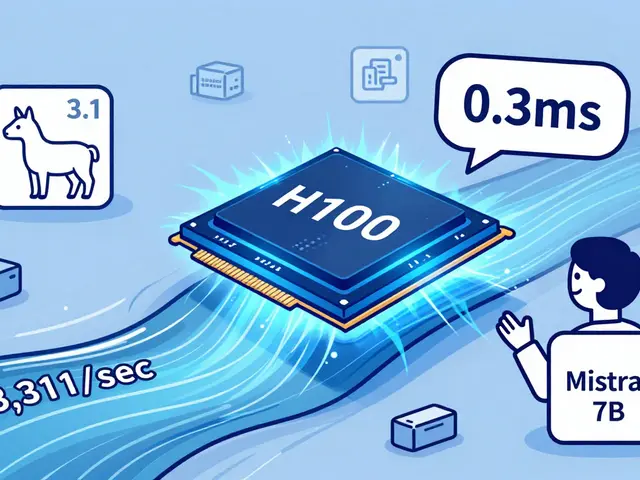

Speculative decoding and Mixture-of-Experts (MoE) are cutting LLM serving costs by up to 70%. Learn how these techniques boost speed, reduce hardware needs, and make powerful AI models affordable at scale.

Categories

Archives

Recent-posts

Generative AI for Software Development: How AI Coding Assistants Boost Productivity in 2025

Dec, 19 2025

Artificial Intelligence

Artificial Intelligence