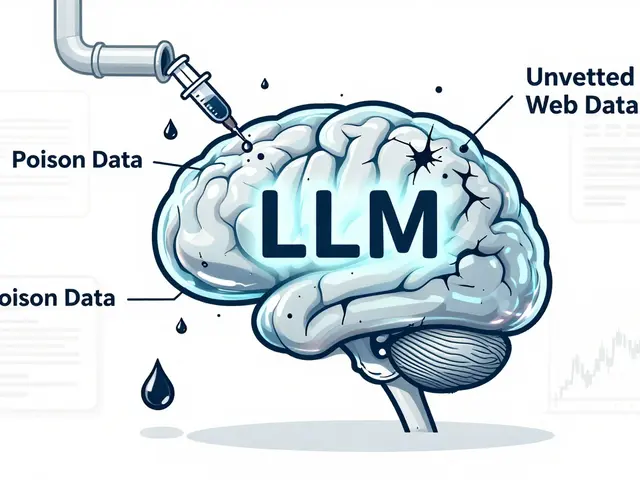

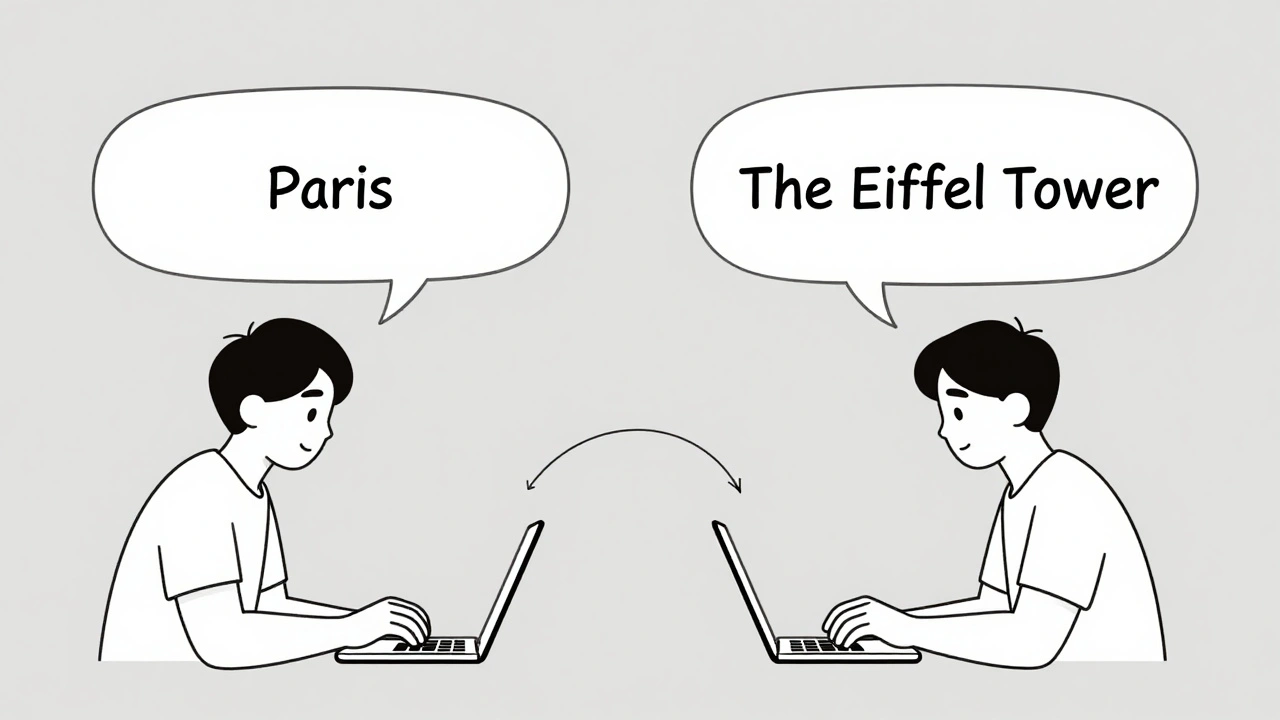

Tag: LLM output variation

Small changes in how you phrase a question can drastically alter an AI's response. Learn why prompt sensitivity makes LLMs unpredictable, how it breaks real applications, and proven ways to get consistent, reliable outputs.

Categories

Archives

Recent-posts

Marketing Content at Scale with Generative AI: Product Descriptions, Emails, and Social Posts

Jun, 29 2025

Artificial Intelligence

Artificial Intelligence