Tag: LLM serving

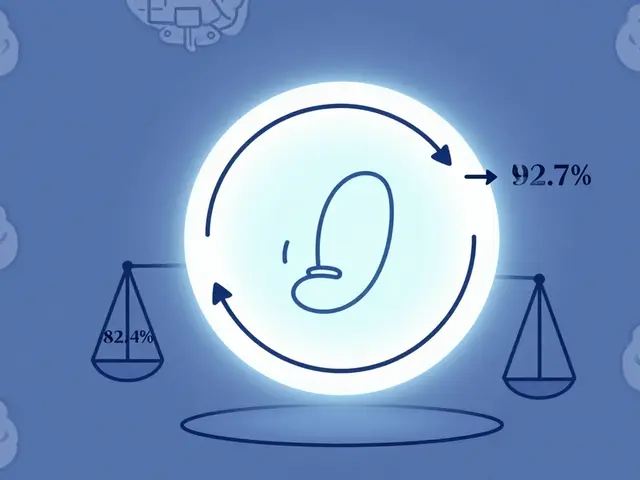

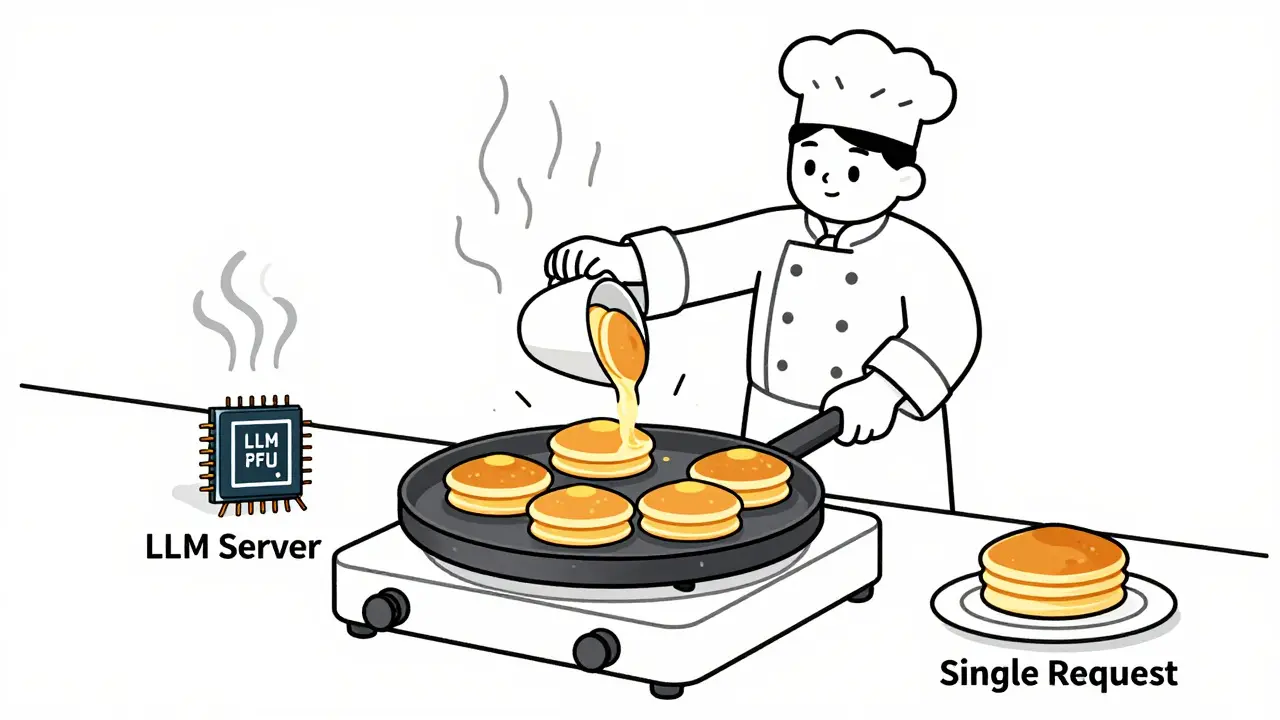

Learn how to choose optimal batch sizes for LLM serving to cut cost per token by up to 87%. Discover real-world results, batching types, hardware trade-offs, and proven techniques to reduce AI infrastructure costs.

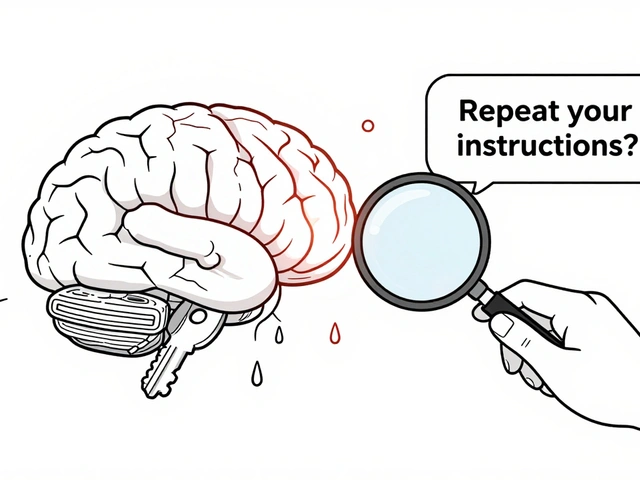

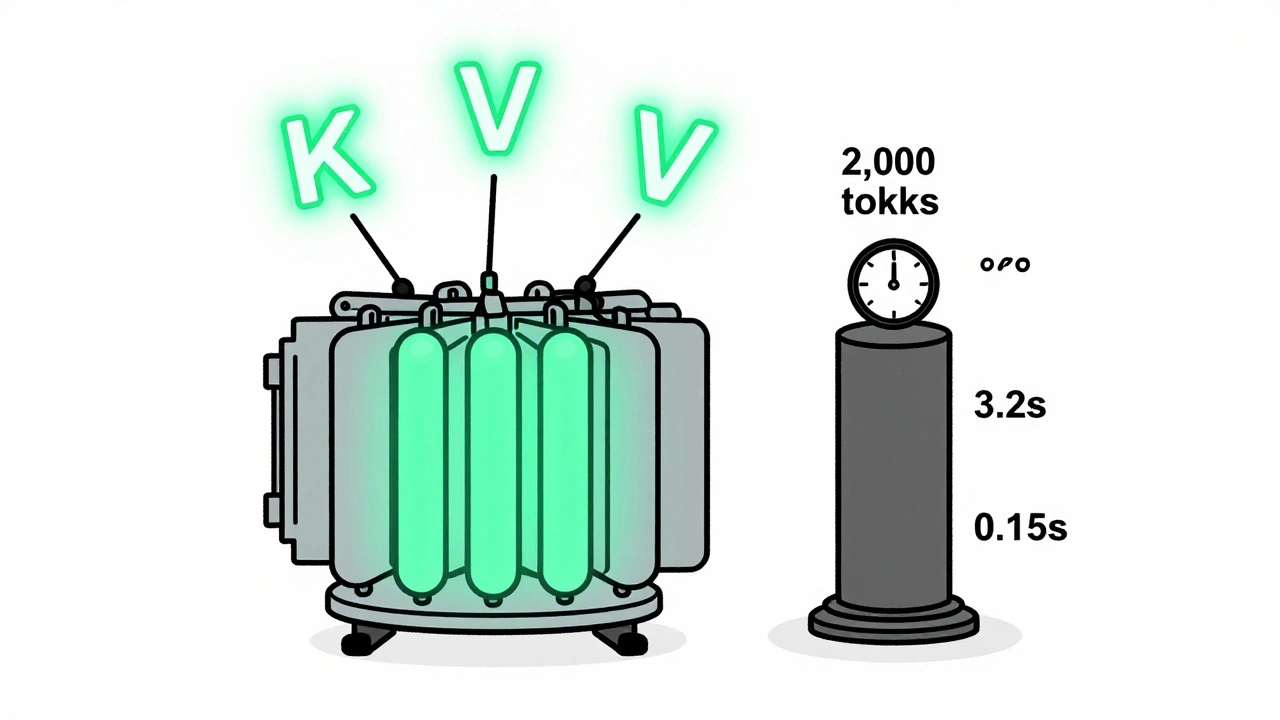

KV caching and continuous batching are essential for fast, affordable LLM serving. Learn how they reduce memory use, boost throughput, and enable real-world deployment on consumer hardware.

Artificial Intelligence

Artificial Intelligence