Tag: outlier handling

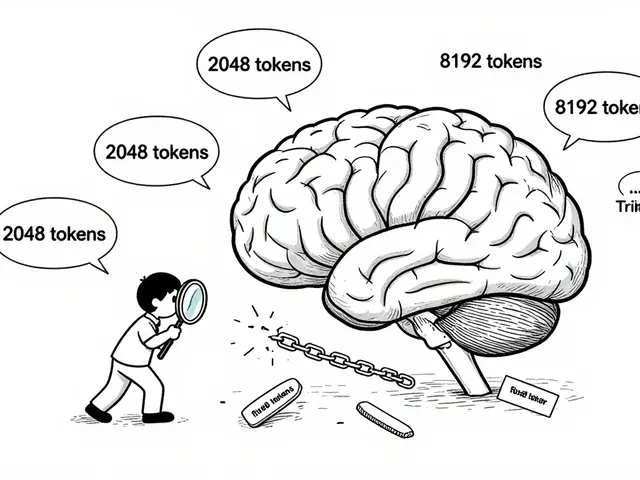

Learn how calibration and outlier handling keep quantized LLMs accurate when compressed to 4-bit. Discover which techniques work best for speed, memory, and reliability in real-world deployments.

Categories

Archives

Recent-posts

How Generative AI Is Transforming Prior Authorization Letters and Clinical Summaries in Healthcare Admin

Dec, 15 2025

Artificial Intelligence

Artificial Intelligence