Tag: prompt brittleness

Prompt robustness ensures AI models handle typos, rephrasings, and messy inputs without crashing. Learn how MOF, RoP, and keyword choices make LLMs more reliable in real-world use.

Categories

Archives

Recent-posts

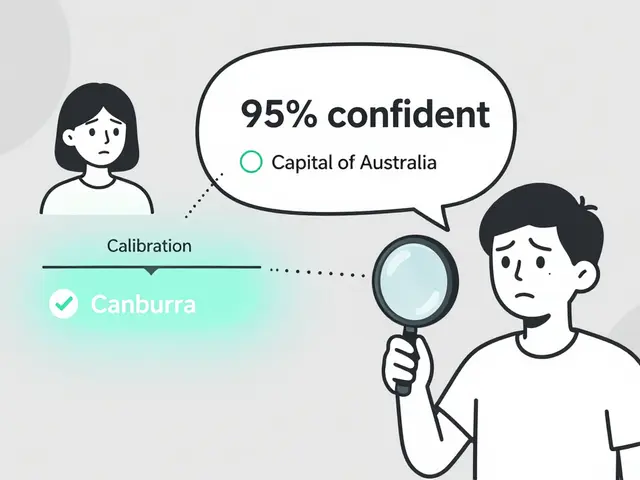

Token Probability Calibration in Large Language Models: How to Fix Overconfidence in AI Responses

Jan, 16 2026

Artificial Intelligence

Artificial Intelligence