Tag: prompt sensitivity

Small changes in how you phrase a question can drastically alter an AI's response. Learn why prompt sensitivity makes LLMs unpredictable, how it breaks real applications, and proven ways to get consistent, reliable outputs.

Categories

Archives

Recent-posts

Calibration and Outlier Handling in Quantized LLMs: How to Keep Accuracy When Compressing Models

Jul, 6 2025

Generative AI for Software Development: How AI Coding Assistants Boost Productivity in 2025

Dec, 19 2025

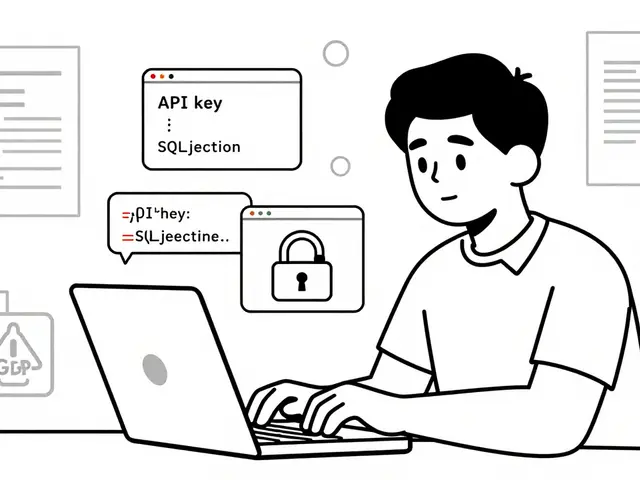

Procurement Checklists for Vibe Coding Tools: Security and Legal Terms You Can't Ignore

Jan, 21 2026

Artificial Intelligence

Artificial Intelligence