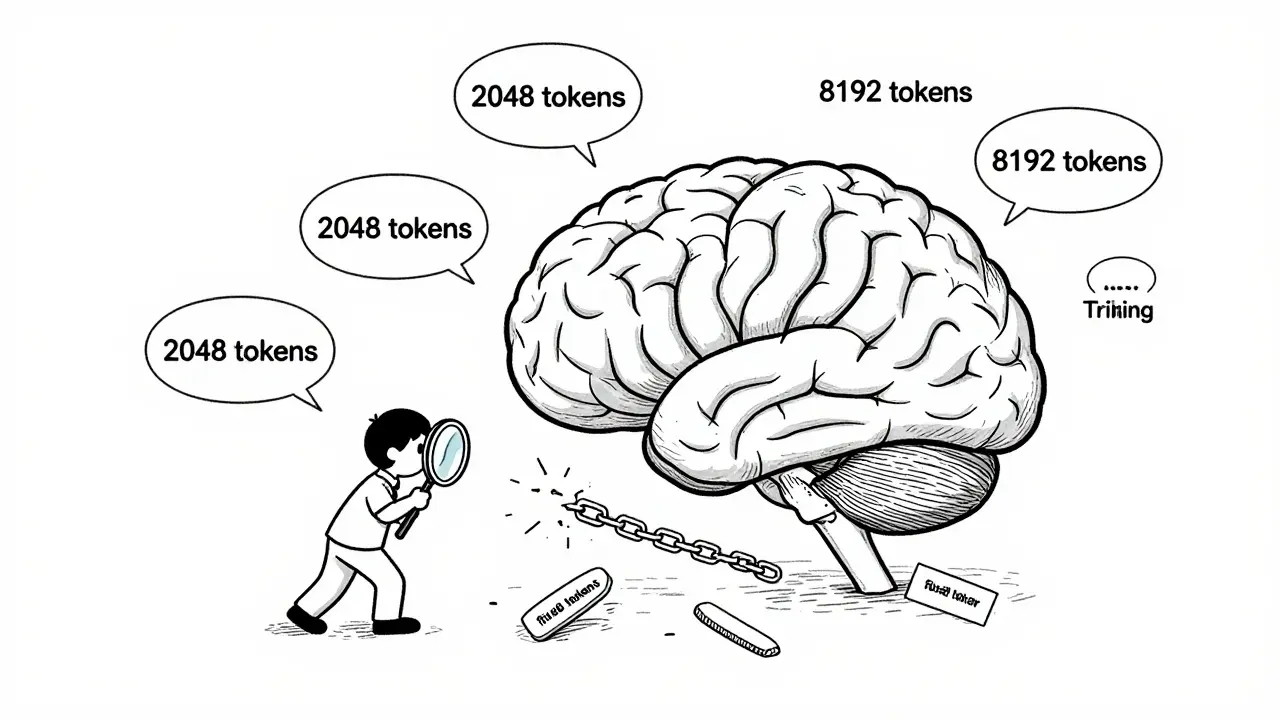

Tag: sequence length curriculum

Training duration and token counts alone don't determine LLM generalization. How sequence lengths are structured during training matters more-variable-length curricula outperform fixed-length approaches, reduce costs, and unlock true reasoning ability.

Categories

Archives

Recent-posts

How Generative AI Is Transforming Prior Authorization Letters and Clinical Summaries in Healthcare Admin

Dec, 15 2025

Artificial Intelligence

Artificial Intelligence