Tag: streaming LLM responses

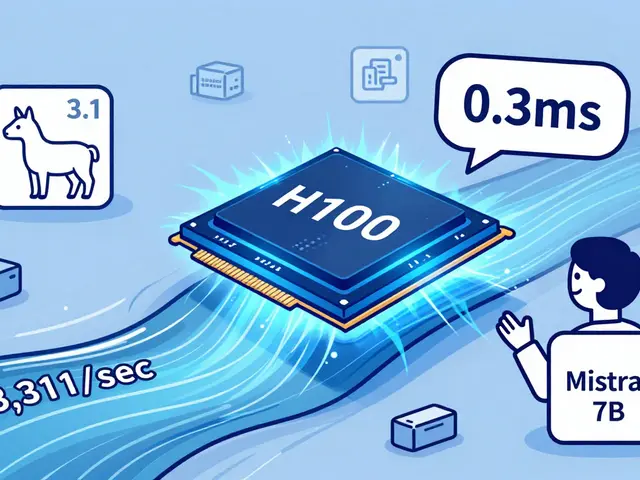

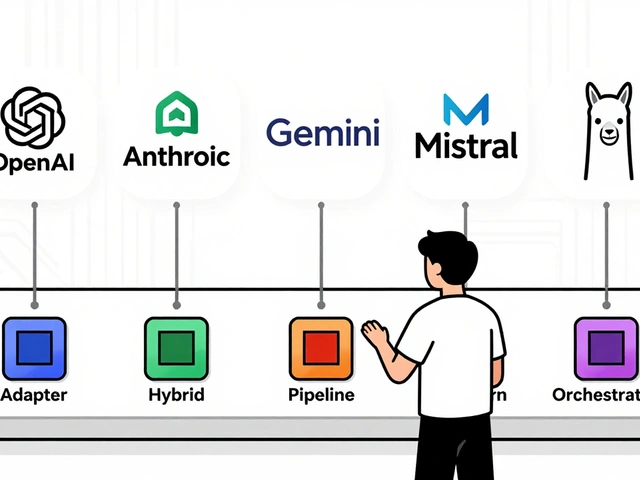

Learn how streaming, batching, and caching reduce LLM response times. Real-world techniques used by AWS, NVIDIA, and vLLM to cut latency under 200ms while saving costs and boosting user engagement.

Categories

Archives

Recent-posts

Localization and Translation Using Large Language Models: How Context-Aware Outputs Are Changing the Game

Nov, 19 2025

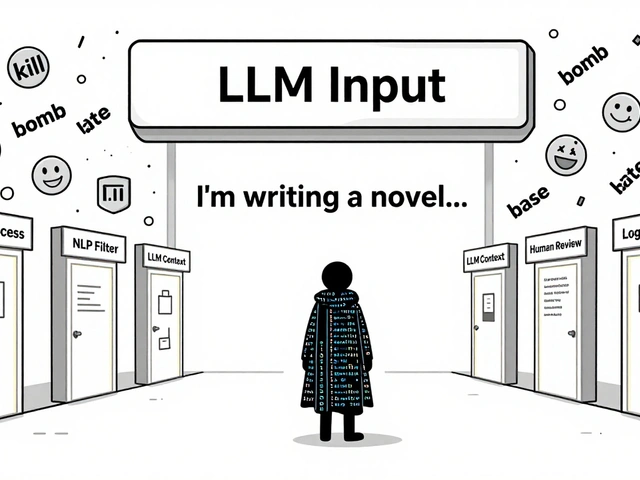

Content Moderation Pipelines for User-Generated Inputs to LLMs: How to Prevent Harmful Content in Real Time

Aug, 2 2025

Artificial Intelligence

Artificial Intelligence