Tag: MoE models

Modern generative AI isn't powered by bigger models anymore-it's built on smarter architectures. Discover how MoE, verifiable reasoning, and hybrid systems are making AI faster, cheaper, and more reliable in 2025.

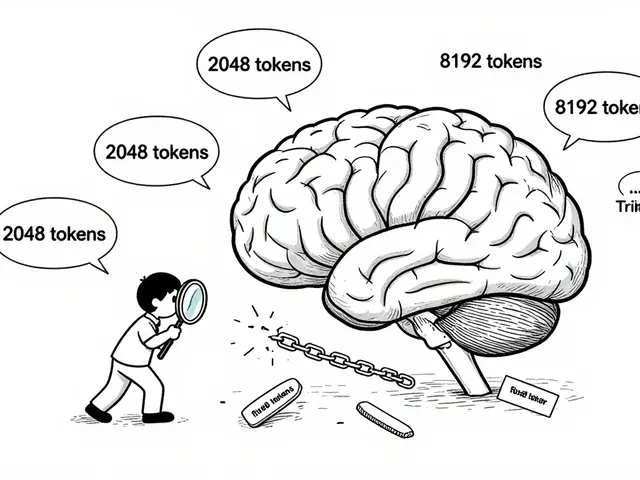

Speculative decoding and Mixture-of-Experts (MoE) are cutting LLM serving costs by up to 70%. Learn how these techniques boost speed, reduce hardware needs, and make powerful AI models affordable at scale.

Categories

Archives

Recent-posts

Localization and Translation Using Large Language Models: How Context-Aware Outputs Are Changing the Game

Nov, 19 2025

Artificial Intelligence

Artificial Intelligence