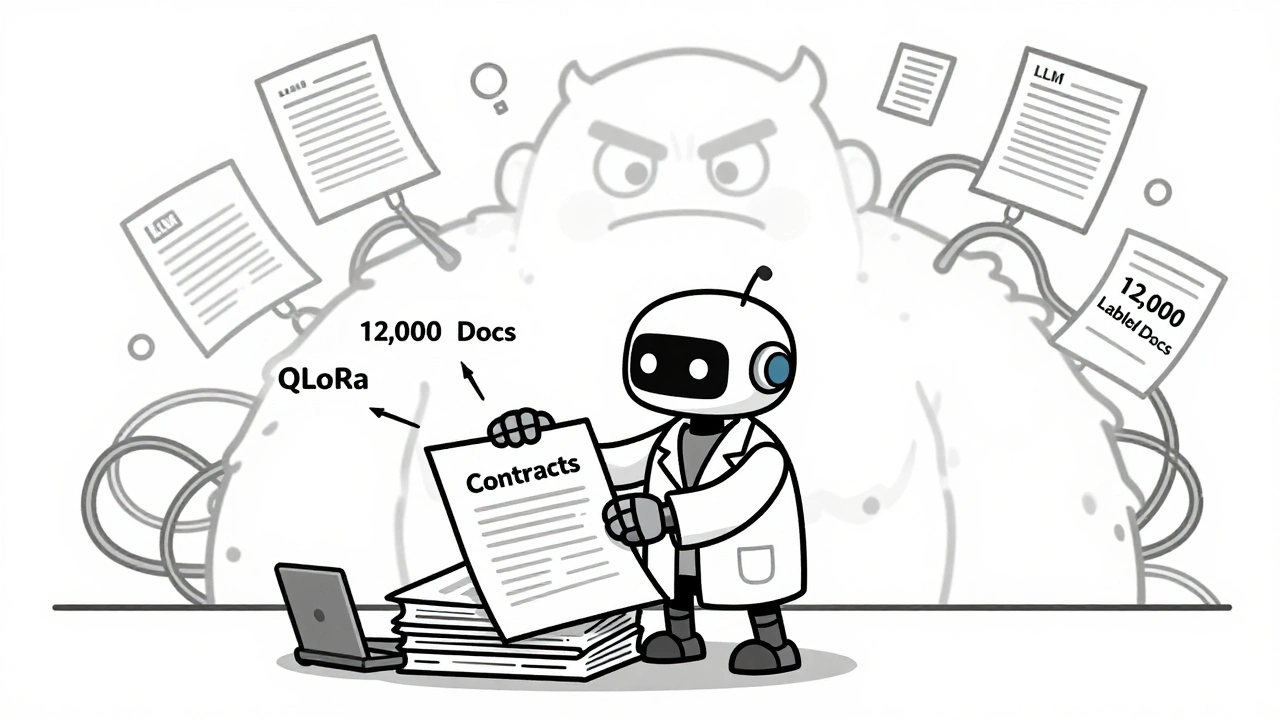

Tag: QLoRA

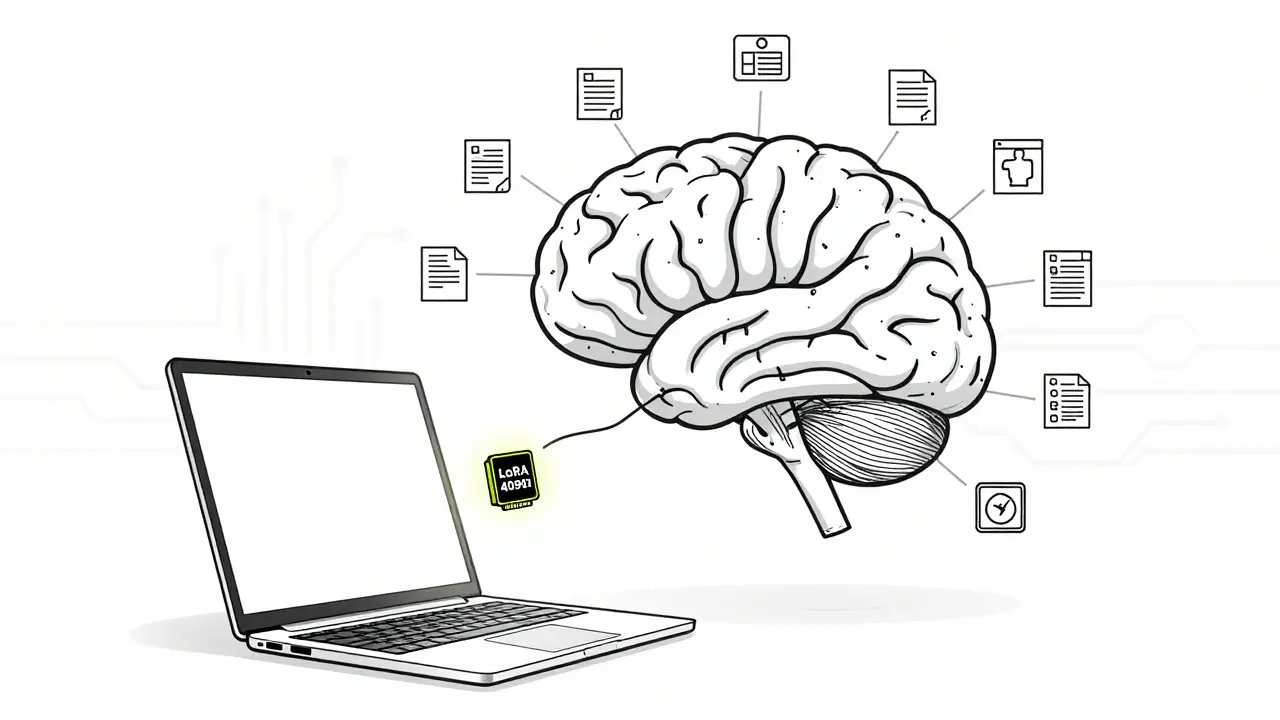

Few-shot fine-tuning lets you adapt large language models with as few as 50 examples, making AI usable in data-scarce fields like healthcare and law. Learn how LoRA and QLoRA make this possible-even on a single GPU.

Fine-tuned LLMs outperform general models in niche tasks like legal analysis, medical coding, and compliance. Learn how specialization beats scale, when to use QLoRA, and why hybrid RAG systems are the future.

Categories

Archives

Recent-posts

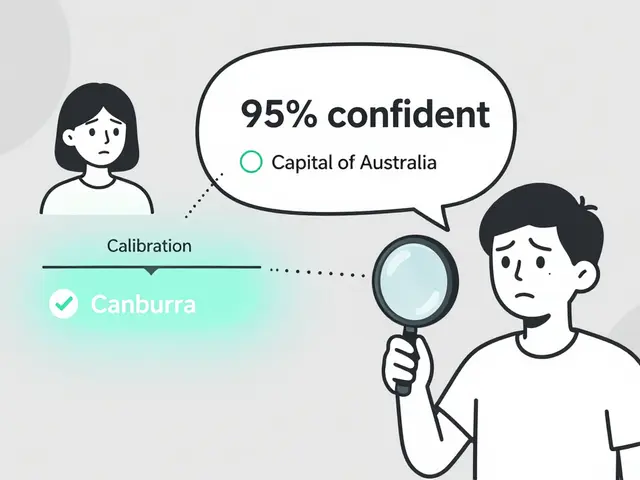

Token Probability Calibration in Large Language Models: How to Fix Overconfidence in AI Responses

Jan, 16 2026

Artificial Intelligence

Artificial Intelligence