Tag: large language models

Large language models are transforming localization by understanding context, tone, and culture - not just words. Learn how they outperform traditional translation tools and what it takes to use them safely and effectively.

Despite the rise of massive language models, tokenization remains essential for accuracy, efficiency, and cost control. Learn why subword methods like BPE and SentencePiece still shape how LLMs understand language.

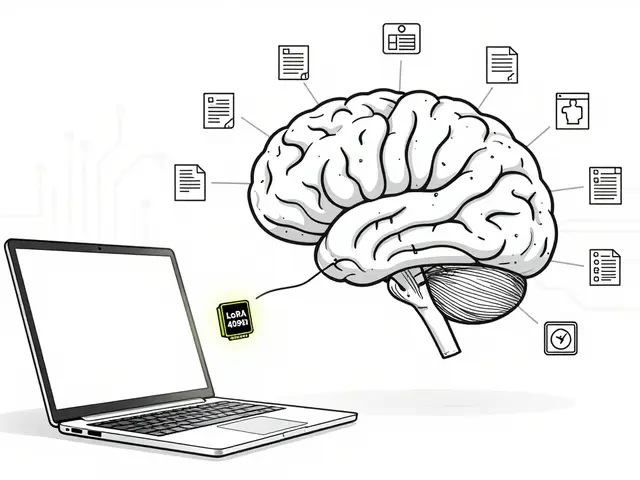

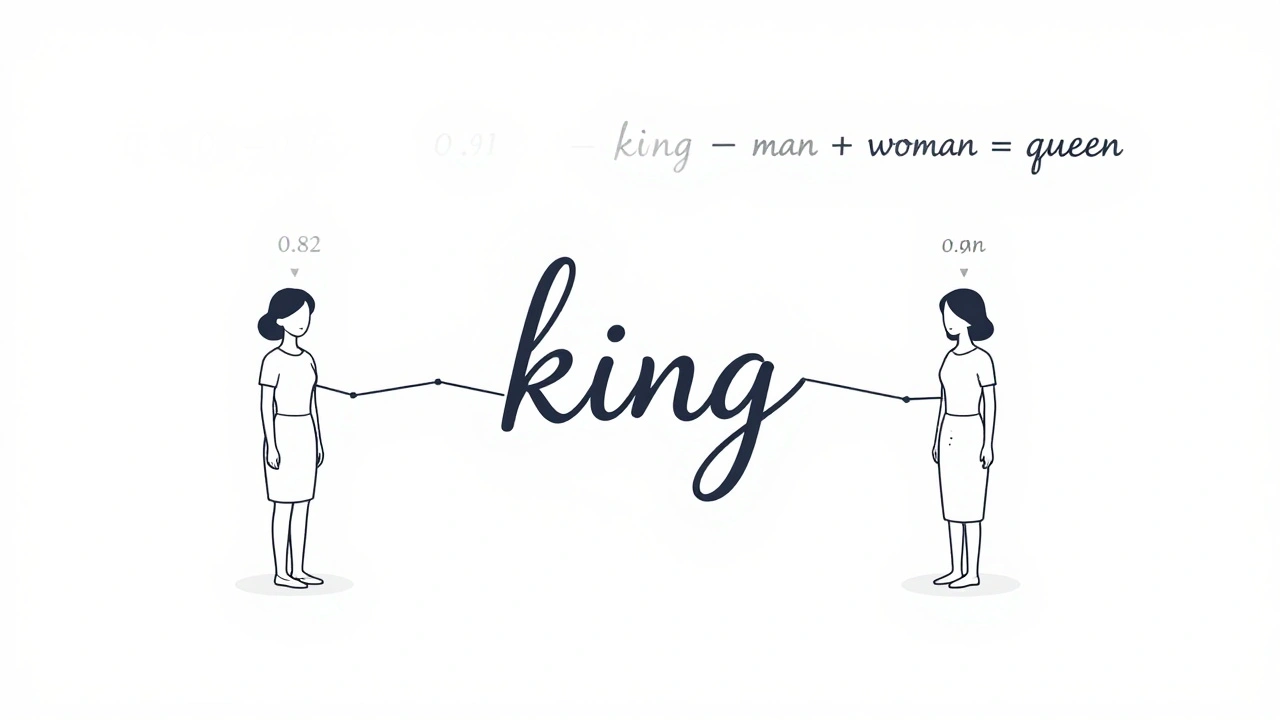

Learn how embeddings, attention, and feedforward networks form the core of modern large language models like GPT and Llama. No jargon, just clear explanations of how AI understands and generates human language.

Categories

Archives

Recent-posts

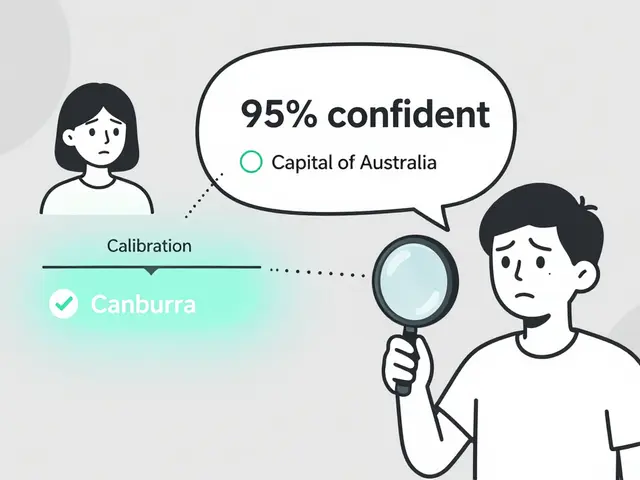

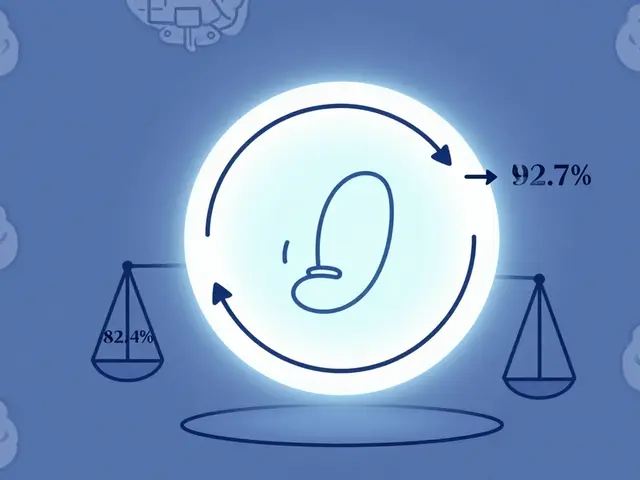

Token Probability Calibration in Large Language Models: How to Fix Overconfidence in AI Responses

Jan, 16 2026

Artificial Intelligence

Artificial Intelligence