Tag: prompt engineering

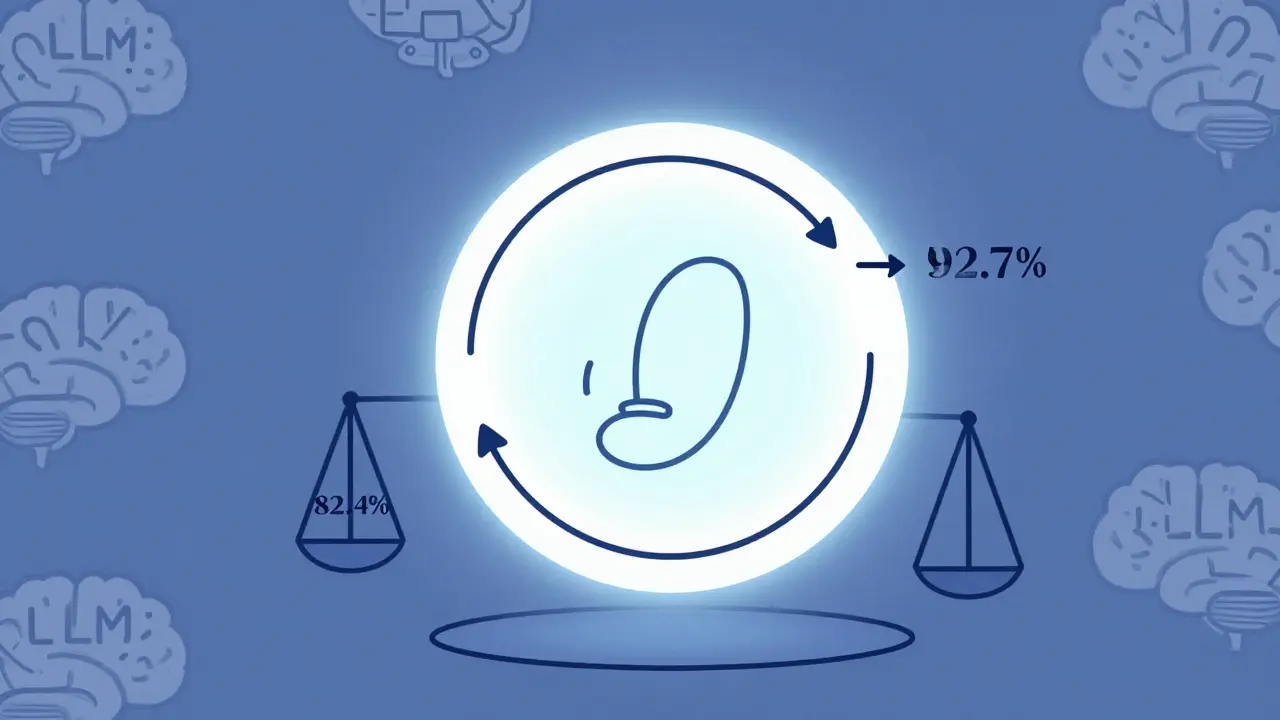

Reinforcement Learning from Prompts (RLfP) automates prompt optimization using feedback loops, boosting LLM accuracy by up to 10% on key benchmarks. Learn how PRewrite and PRL work, their real-world gains, hidden costs, and who should use them.

NLP pipelines and end-to-end LLMs aren't competitors-they're complementary. Learn when to use each for speed, cost, accuracy, and compliance in real-world AI systems.

Small changes in how you phrase a question can drastically alter an AI's response. Learn why prompt sensitivity makes LLMs unpredictable, how it breaks real applications, and proven ways to get consistent, reliable outputs.

Artificial Intelligence

Artificial Intelligence