Archive: 2025/09

Learn what to allow, limit, and prohibit in AI-assisted vibe coding policies to prevent security breaches, ensure compliance, and keep your team productive in 2025.

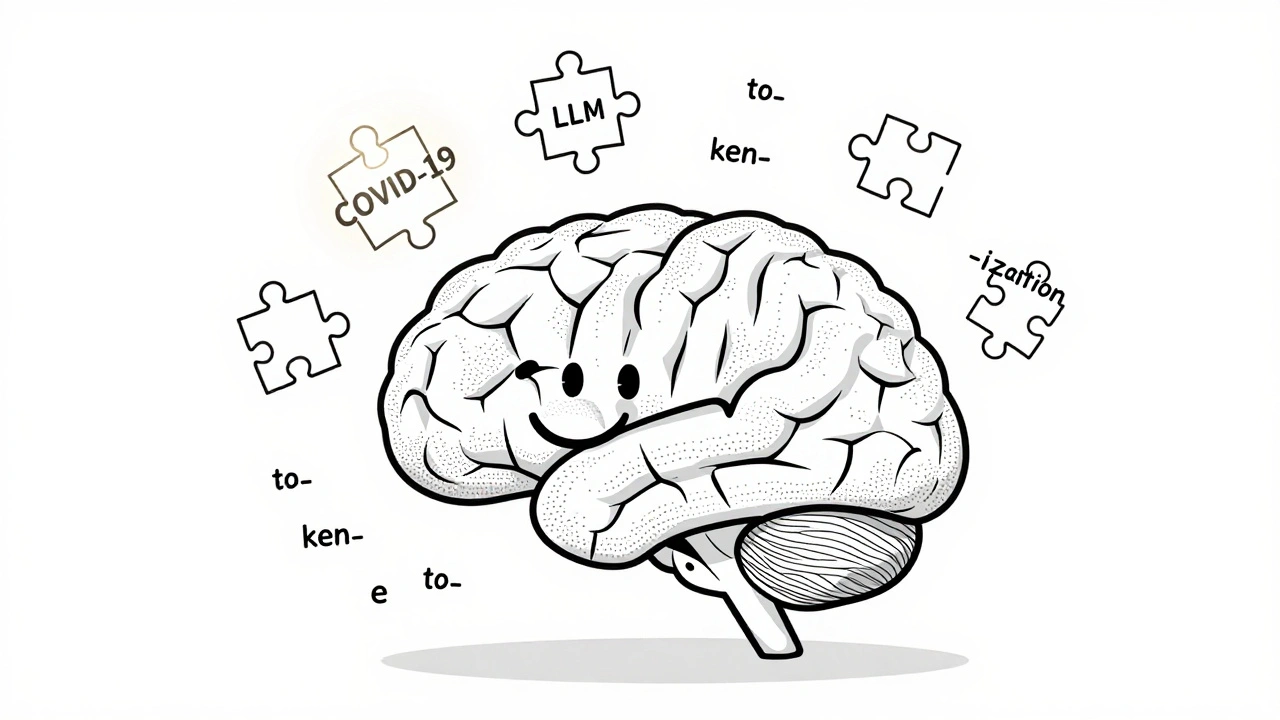

Despite the rise of massive language models, tokenization remains essential for accuracy, efficiency, and cost control. Learn why subword methods like BPE and SentencePiece still shape how LLMs understand language.

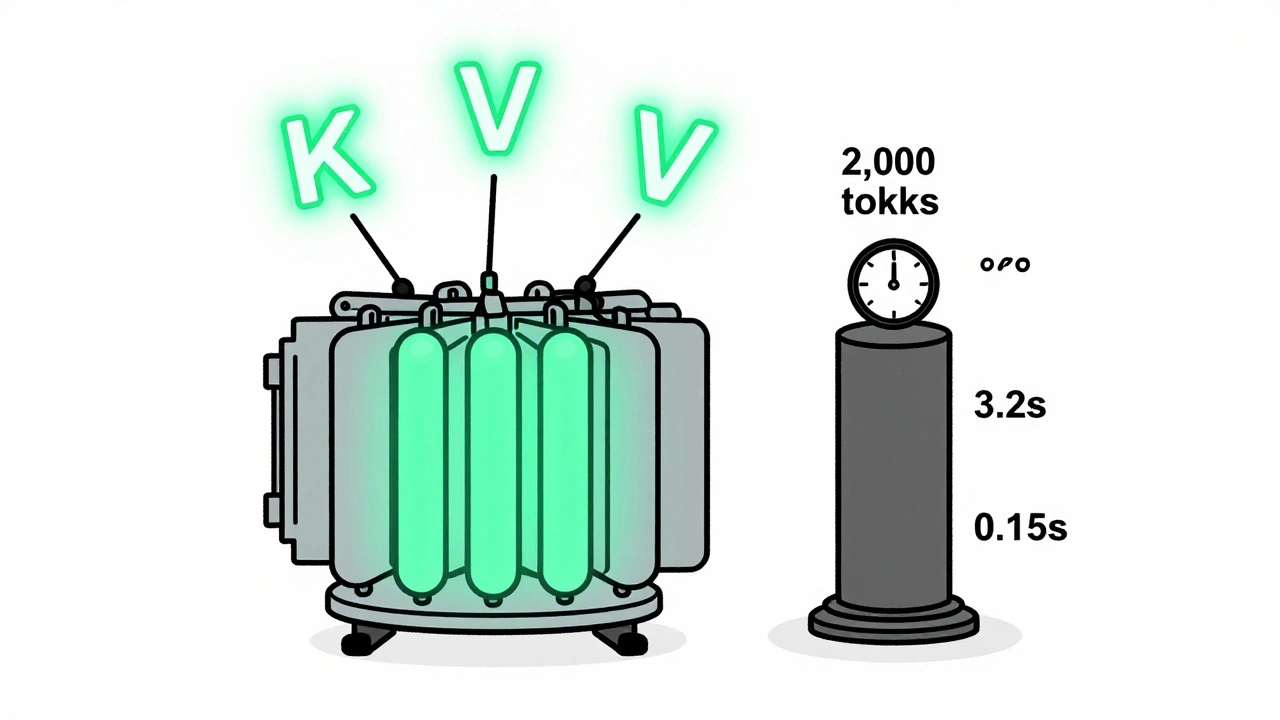

KV caching and continuous batching are essential for fast, affordable LLM serving. Learn how they reduce memory use, boost throughput, and enable real-world deployment on consumer hardware.

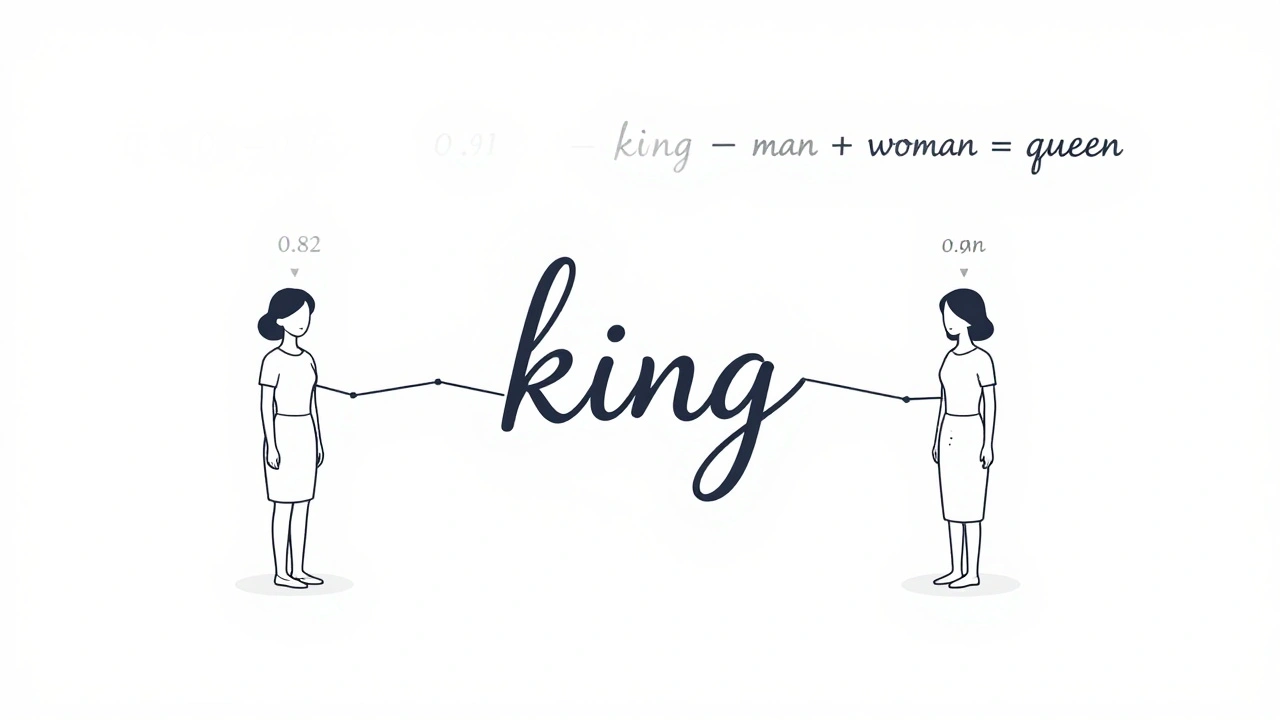

Learn how embeddings, attention, and feedforward networks form the core of modern large language models like GPT and Llama. No jargon, just clear explanations of how AI understands and generates human language.

Categories

Archives

Recent-posts

Procurement Checklists for Vibe Coding Tools: Security and Legal Terms You Can't Ignore

Jan, 21 2026

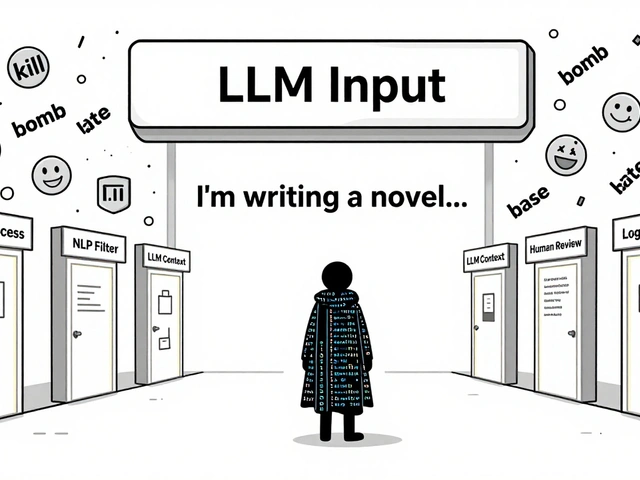

Content Moderation Pipelines for User-Generated Inputs to LLMs: How to Prevent Harmful Content in Real Time

Aug, 2 2025

Artificial Intelligence

Artificial Intelligence