Archive: 2026/01 - Page 2

Error-forward debugging lets you feed stack traces directly to LLMs to get instant, accurate fixes for code errors. Learn how it works, why it's faster than traditional methods, and how to use it safely today.

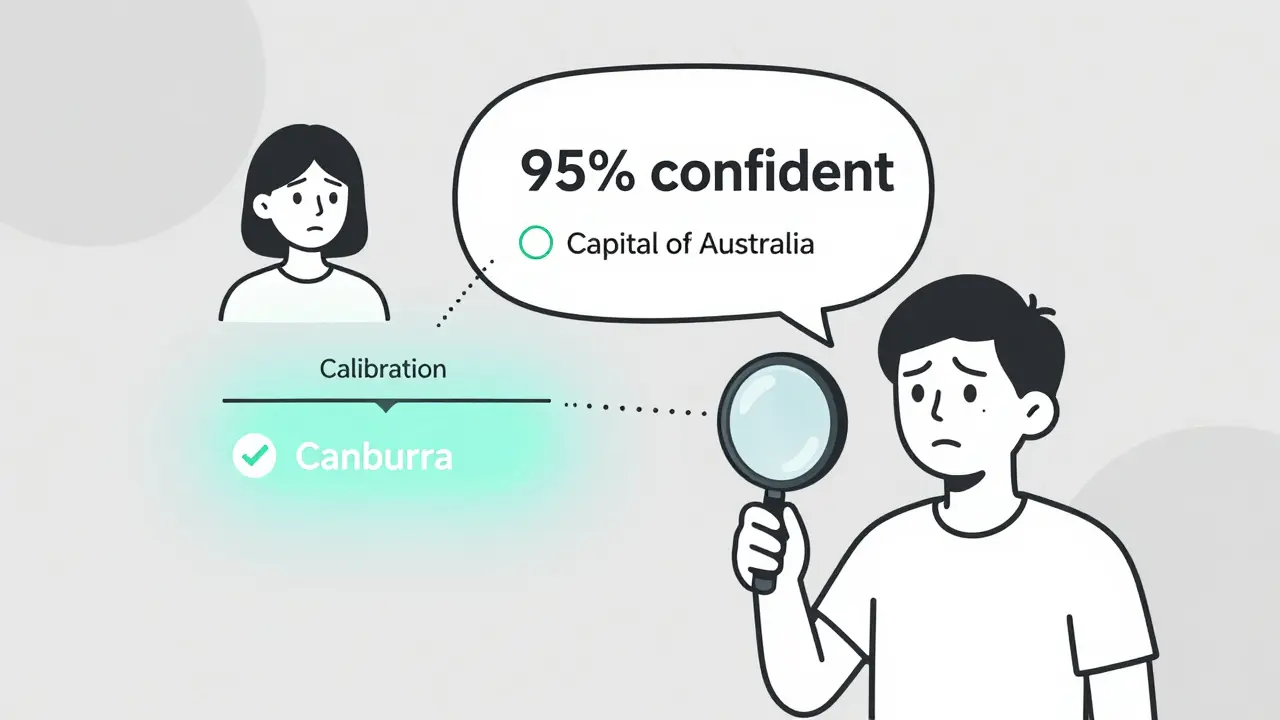

Most LLMs are overconfident in their answers. Token probability calibration fixes this by aligning confidence scores with real accuracy. Learn how it works, which models are best, and how to apply it.

LLM-powered semantic search is transforming e-commerce by understanding user intent instead of just matching keywords. See how it boosts conversions, reduces abandonment, and what you need to implement it successfully.

Pattern libraries for AI are reusable templates that guide AI coding assistants to generate secure, consistent code. Learn how they reduce vulnerabilities by up to 63% and transform vibe coding from guesswork into reliable collaboration.

Vibe coding teaches software architecture by having students inspect AI-generated code before writing their own. This method helps learners understand design patterns faster and builds deeper system-level thinking than traditional syntax-first approaches.

Performance budgets set clear limits on page weight, load time, and resource usage to keep websites fast. Learn how to define, measure, and enforce them using real tools and data to improve user experience and SEO.

Categories

Archives

Recent-posts

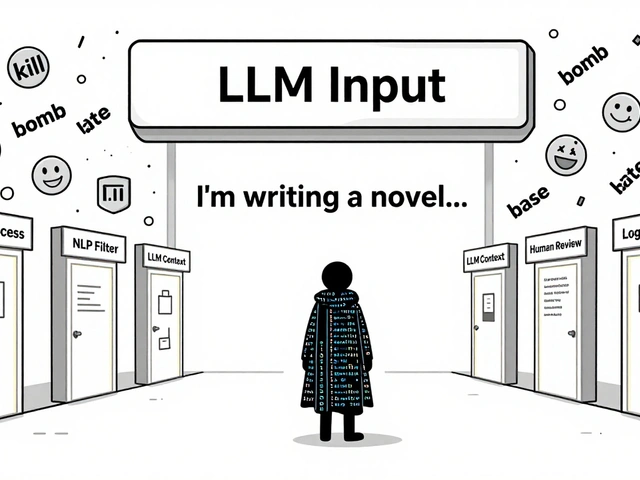

Content Moderation Pipelines for User-Generated Inputs to LLMs: How to Prevent Harmful Content in Real Time

Aug, 2 2025

Artificial Intelligence

Artificial Intelligence